Re: Imbalanced workload between workers

Posted by Pieter Hameete on

URL: http://deprecated-apache-flink-user-mailing-list-archive.369.s1.nabble.com/Imbalanced-workload-between-workers-tp4511p4542.html

URL: http://deprecated-apache-flink-user-mailing-list-archive.369.s1.nabble.com/Imbalanced-workload-between-workers-tp4511p4542.html

Hi Stephen,

it was the first CoGroup :-)

- Pieter

2016-01-28 11:24 GMT+01:00 Stephan Ewen <[hidden email]>:

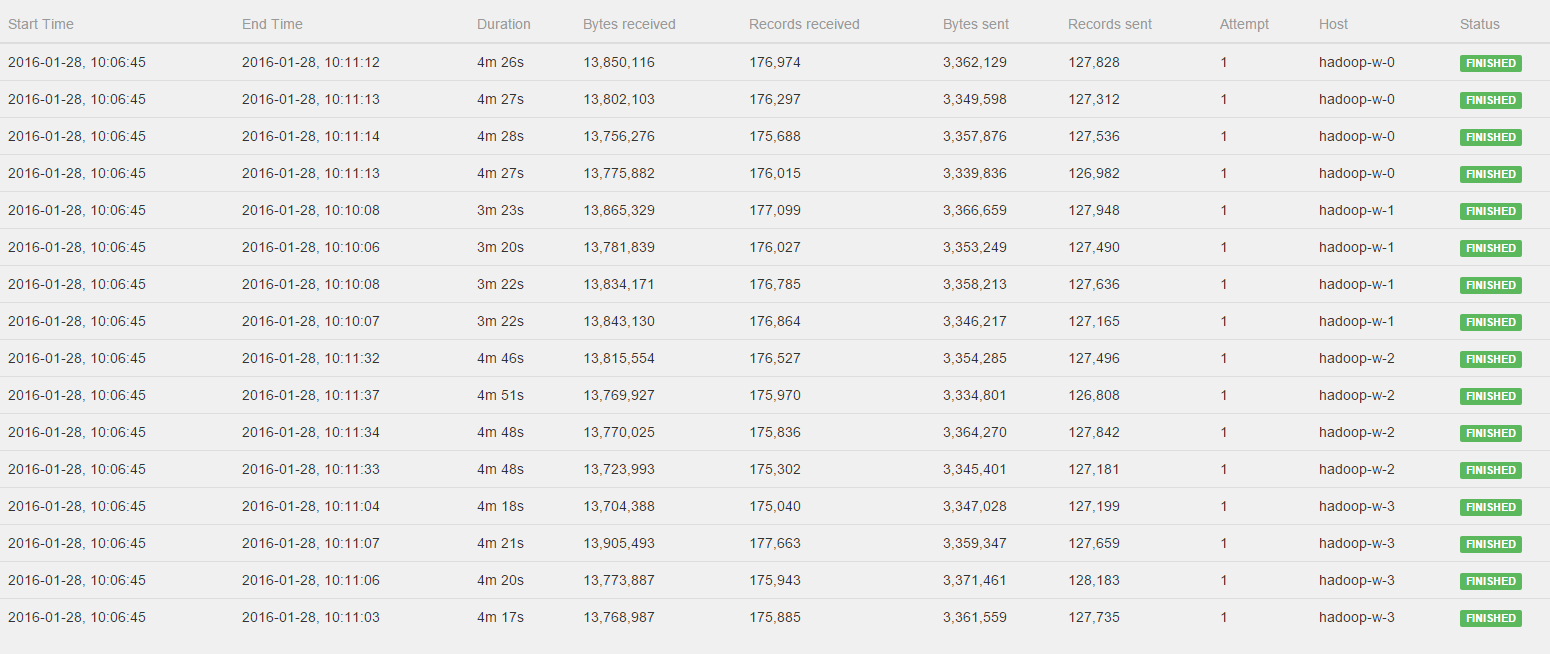

Hey!Which CoGroup in the plan are the statistics for? For the first or the second one?StephanOn Thu, Jan 28, 2016 at 10:58 AM, Till Rohrmann <[hidden email]> wrote:Hi Pieter,you can see in the log that the operators are all started at the same time. However, you're right that they don't finish at the same time. The sub tasks which run on the same node exhibit a similar runtime. However, all nodes (not only hadoop-w-0 compared to the others) show different runtimes. I would guess that this is due to some other load on the GCloud machines or some other kind of asymmetry between the hosts.Cheers,TillOn Thu, Jan 28, 2016 at 10:17 AM, Pieter Hameete <[hidden email]> wrote:Hi Stephen, Till,I've watched the Job again and please see the log of the CoGroup operator:All workers get to process a fairly distributed amount of bytes and records, BUT hadoop-w-0, hadoop-w-2 and hadoop-w-3 don't start working until hadoop-w-1 is finished. Is this behavior to be expected with a CoGroup or could there still be something wrong in the distrubtion of the data?Kind regards,Pieter2016-01-27 21:48 GMT+01:00 Stephan Ewen <[hidden email]>:Hi Pieter!Interesting, but good :-)I don't think we did much on the hash functions since 0.9.1. I am a bit surprised that it made such a difference. Well, as long as it improves with the newer version :-)Greetings,StephanOn Wed, Jan 27, 2016 at 9:42 PM, Pieter Hameete <[hidden email]> wrote:Hi Till,i've upgraded to Flink 0.10.1 and ran the job again without any changes to the code to see the bytes input and output of the operators and for the different workers.To my surprise it is very well balanced between all workers and because of this the job completed much faster.Are there any changes/fixes between Flink 0.9.1 and 0.10.1 that could cause this to be better for me now?Thanks,Pieter2016-01-27 14:10 GMT+01:00 Pieter Hameete <[hidden email]>:Cheers for the quick reply Till.That would be very useful information to have! I'll upgrade my project to Flink 0.10.1 tongiht and let you know if I can find out if theres a skew in the data :-)- Pieter2016-01-27 13:49 GMT+01:00 Till Rohrmann <[hidden email]>:Could it be that your data is skewed? This could lead to different loads on different task managers.With the latest Flink version, the web interface should show you how many bytes each operator has written and received. There you could see if one operator receives more elements than the others.Cheers,TillOn Wed, Jan 27, 2016 at 1:35 PM, Pieter Hameete <[hidden email]> wrote:Hi guys,Currently I am running a job in the GCloud in a configuration with 4 task managers that each have 4 CPUs (for a total parallelism of 16).However, I noticed my job is running much slower than expected and after some more investigation I found that one of the workers is doing a majority of the work (its CPU load was at 100% while the others were almost idle).My job execution plan can be found here: http://i.imgur.com/fHKhVFf.pngThe input is split into multiple files so loading the data is properly distributed over the workers.I am wondering if you can provide me with some tips on how to figure out what is going wrong here:

- Could this imbalance in workload be the result of an imbalance in the hash paritioning?

- Is there a convenient way to see how many elements each worker gets to process? Would it work to write the output of the CoGroup to disk because each worker writes to its own output file and investigate the differences?

- Is there something strange about the execution plan that could cause this?

Thanks and kind regards,Pieter

| Free forum by Nabble | Edit this page |