does flink support yarn/hdfs HA?

Posted by 邓俊华 on

URL: http://deprecated-apache-flink-user-mailing-list-archive.369.s1.nabble.com/does-flink-support-yarn-hdfs-HA-tp16448.html

URL: http://deprecated-apache-flink-user-mailing-list-archive.369.s1.nabble.com/does-flink-support-yarn-hdfs-HA-tp16448.html

hi,

Now I run spark streming on yarn with yarn/HA. I want to migrate spark streaming to flink. But it can't work. It can't conncet to hdfs logical namenode url.

It always throws exception as fllow. I tried to trace the flink code and I found there maybe something wrong when read the hadoop conf.

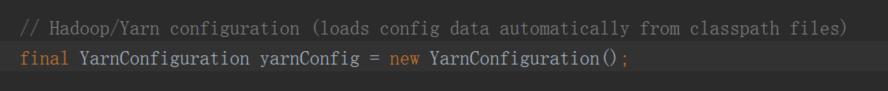

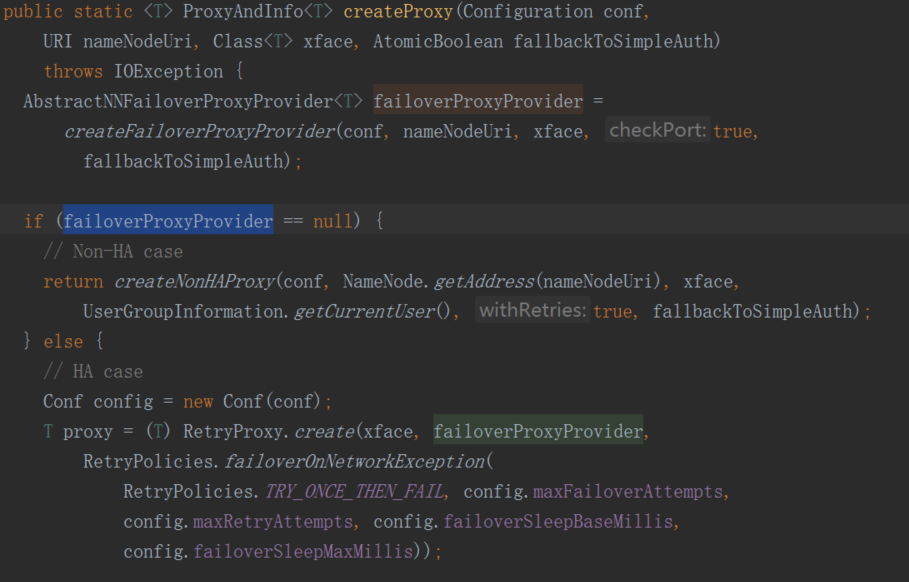

As fllow, code the failoverProxyProvider is null , so it think it's a No-HA case. The YarnConfiguration didn't read hdfs-site.xml to get "dfs.client.failover.proxy.provider.startdt" value,only yarn-site.xml and core-site.xml be read.

I have configured and export YARN_CONF_DIR and HADOOP_CONF_DIR in /etc/profile and I am sure there are *-site.xml in this diretory. I can also get the value in env.

Can anybody provide me some advice?

2017-10-25 21:02:14,721 DEBUG org.apache.hadoop.hdfs.BlockReaderLocal - dfs.domain.socket.path =

2017-10-25 21:02:14,755 ERROR org.apache.flink.yarn.YarnApplicationMasterRunner - YARN Application Master initialization failed

java.lang.IllegalArgumentException: java.net.UnknownHostException: startdt

at org.apache.hadoop.security.SecurityUtil.buildTokenService(SecurityUtil.java:378)

at org.apache.hadoop.hdfs.NameNodeProxies.createNonHAProxy(NameNodeProxies.java:310)

at org.apache.hadoop.hdfs.NameNodeProxies.createProxy(NameNodeProxies.java:176)

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:678)

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:619)

at org.apache.hadoop.hdfs.DistributedFileSystem.initialize(DistributedFileSystem.java:149)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2669)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:94)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2703)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2685)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:373)

at org.apache.hadoop.fs.Path.getFileSystem(Path.java:295)

at org.apache.flink.yarn.Utils.createTaskExecutorContext(Utils.java:385)

at org.apache.flink.yarn.YarnApplicationMasterRunner.runApplicationMaster(YarnApplicationMasterRunner.java:324)

at org.apache.flink.yarn.YarnApplicationMasterRunner$1.call(YarnApplicationMasterRunner.java:195)

at org.apache.flink.yarn.YarnApplicationMasterRunner$1.call(YarnApplicationMasterRunner.java:192)

at org.apache.flink.runtime.security.HadoopSecurityContext$1.run(HadoopSecurityContext.java:43)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698)

at org.apache.flink.runtime.security.HadoopSecurityContext.runSecured(HadoopSecurityContext.java:40)

at org.apache.flink.yarn.YarnApplicationMasterRunner.run(YarnApplicationMasterRunner.java:192)

at org.apache.flink.yarn.YarnApplicationMasterRunner.main(YarnApplicationMasterRunner.java:116)

Caused by: java.net.UnknownHostException: startdt

... 23 more

2017-10-25 21:02:14,755 ERROR org.apache.flink.yarn.YarnApplicationMasterRunner - YARN Application Master initialization failed

java.lang.IllegalArgumentException: java.net.UnknownHostException: startdt

at org.apache.hadoop.security.SecurityUtil.buildTokenService(SecurityUtil.java:378)

at org.apache.hadoop.hdfs.NameNodeProxies.createNonHAProxy(NameNodeProxies.java:310)

at org.apache.hadoop.hdfs.NameNodeProxies.createProxy(NameNodeProxies.java:176)

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:678)

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:619)

at org.apache.hadoop.hdfs.DistributedFileSystem.initialize(DistributedFileSystem.java:149)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2669)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:94)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2703)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2685)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:373)

at org.apache.hadoop.fs.Path.getFileSystem(Path.java:295)

at org.apache.flink.yarn.Utils.createTaskExecutorContext(Utils.java:385)

at org.apache.flink.yarn.YarnApplicationMasterRunner.runApplicationMaster(YarnApplicationMasterRunner.java:324)

at org.apache.flink.yarn.YarnApplicationMasterRunner$1.call(YarnApplicationMasterRunner.java:195)

at org.apache.flink.yarn.YarnApplicationMasterRunner$1.call(YarnApplicationMasterRunner.java:192)

at org.apache.flink.runtime.security.HadoopSecurityContext$1.run(HadoopSecurityContext.java:43)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698)

at org.apache.flink.runtime.security.HadoopSecurityContext.runSecured(HadoopSecurityContext.java:40)

at org.apache.flink.yarn.YarnApplicationMasterRunner.run(YarnApplicationMasterRunner.java:192)

at org.apache.flink.yarn.YarnApplicationMasterRunner.main(YarnApplicationMasterRunner.java:116)

Caused by: java.net.UnknownHostException: startdt

... 23 more

| Free forum by Nabble | Edit this page |