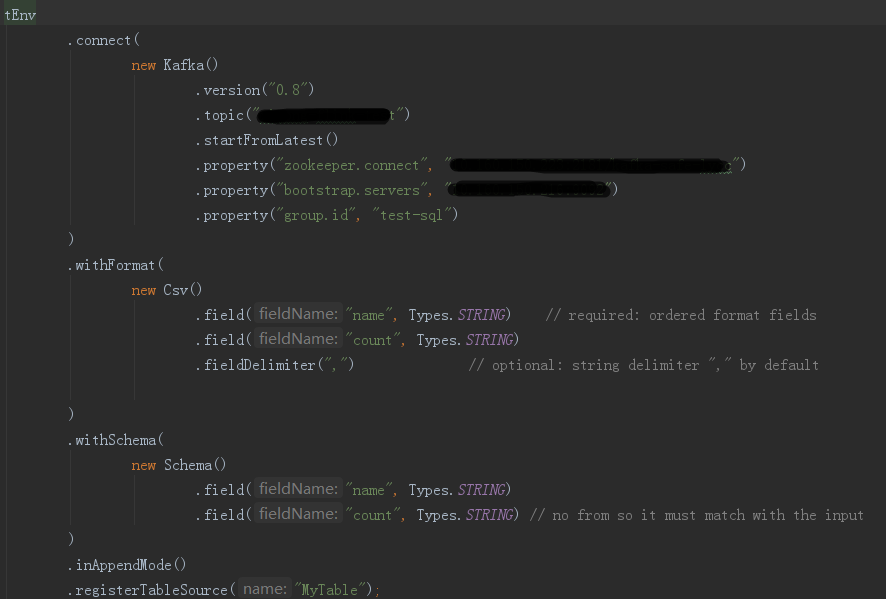

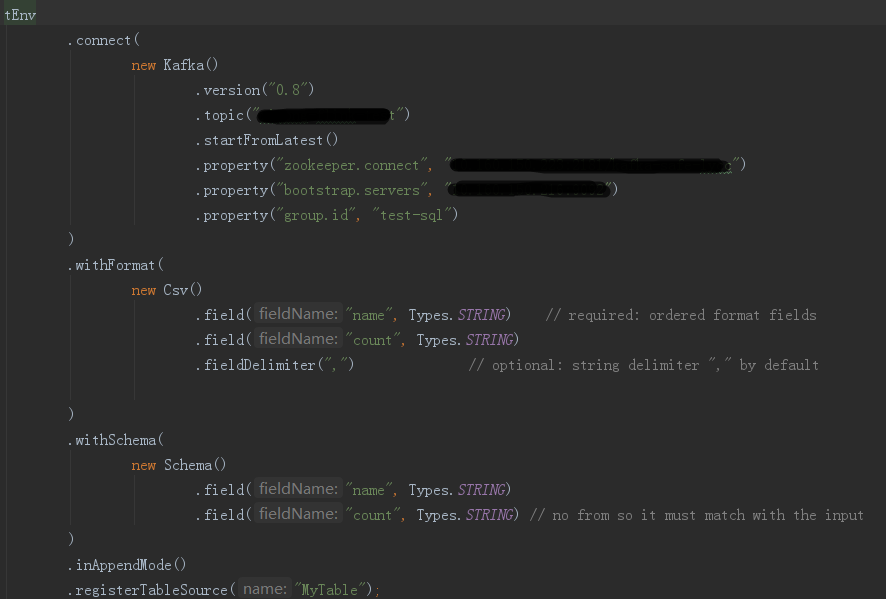

sql program throw exception when new kafka with csv format

|

Register kafka message source with csv format, the error message is as follows:

BTW, the flink version is 1.6.2 . Thanks Marvin.  |

Re: sql program throw exception when new kafka with csv format

|

Hi Marvin, I had taken a look at the Flink code. It seems we can't use CSV format for Kafka. You can use JSON instead. As the exception shows, Flink can't find a suitable DeserializationSchemaFactory. Currently, only JSON and Avro support DeserializationSchemaFactory. Best, Hequn On Tue, Dec 11, 2018 at 5:48 PM Marvin777 <[hidden email]> wrote:

|

Re: sql program throw exception when new kafka with csv format

|

In reply to this post by Marvin777

Hi Marvin,

the CSV format is not supported for

Kafka so far. Only formats that have the tag

`DeserializationSchema` in the docs are supported.

Right now you have to implement you own

DeserializationSchemaFactory or use JSON or Avro.

You can follow [1] to get informed once

the CSV format is supported. I'm sure it will be merge for Flink

1.8.

Regards,

Timo

Am 11.12.18 um 10:41 schrieb Marvin777:

|

«

Return to (DEPRECATED) Apache Flink User Mailing List archive.

|

1 view|%1 views

| Free forum by Nabble | Edit this page |