savepoint failed for finished tasks

|

Hi,

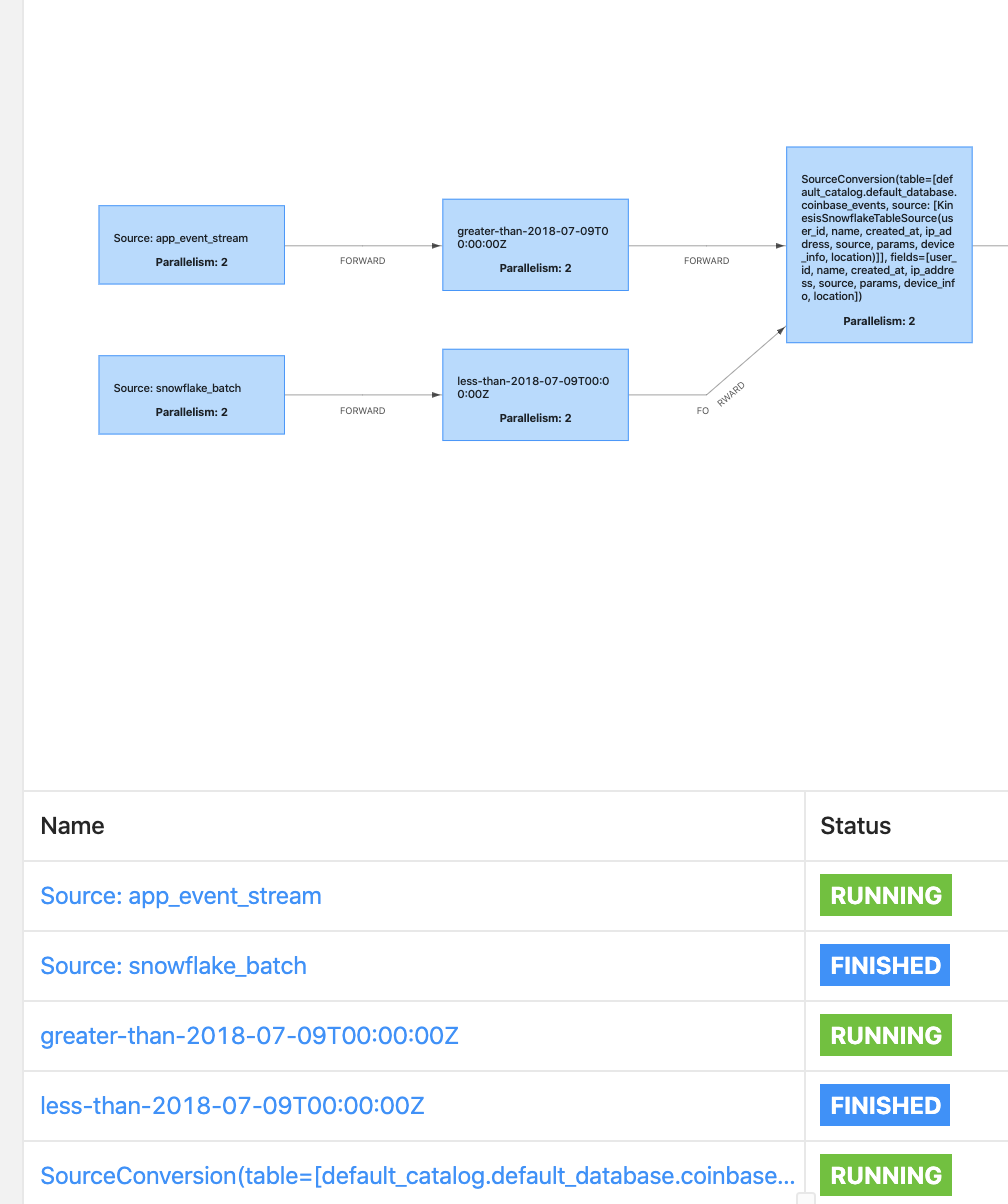

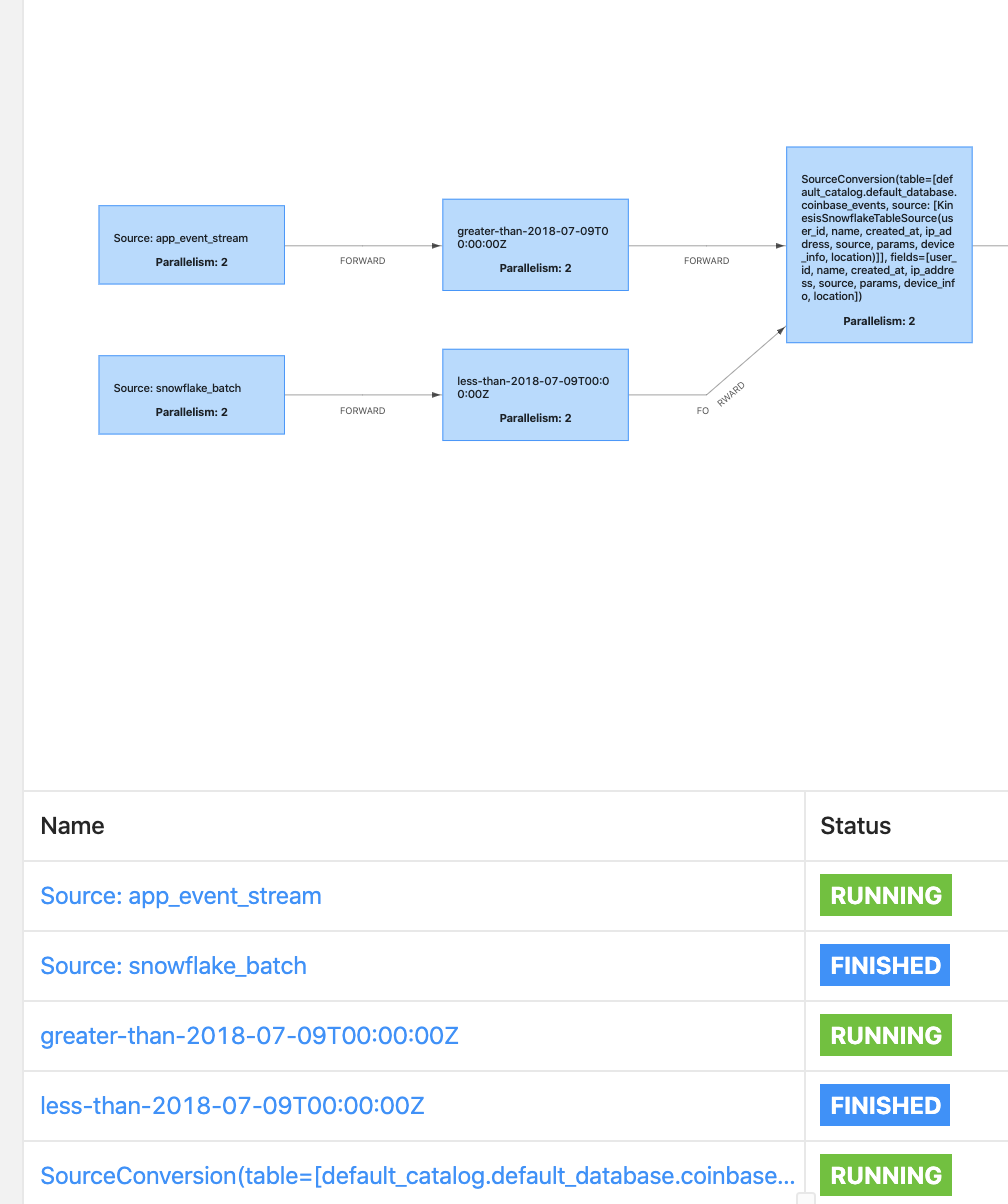

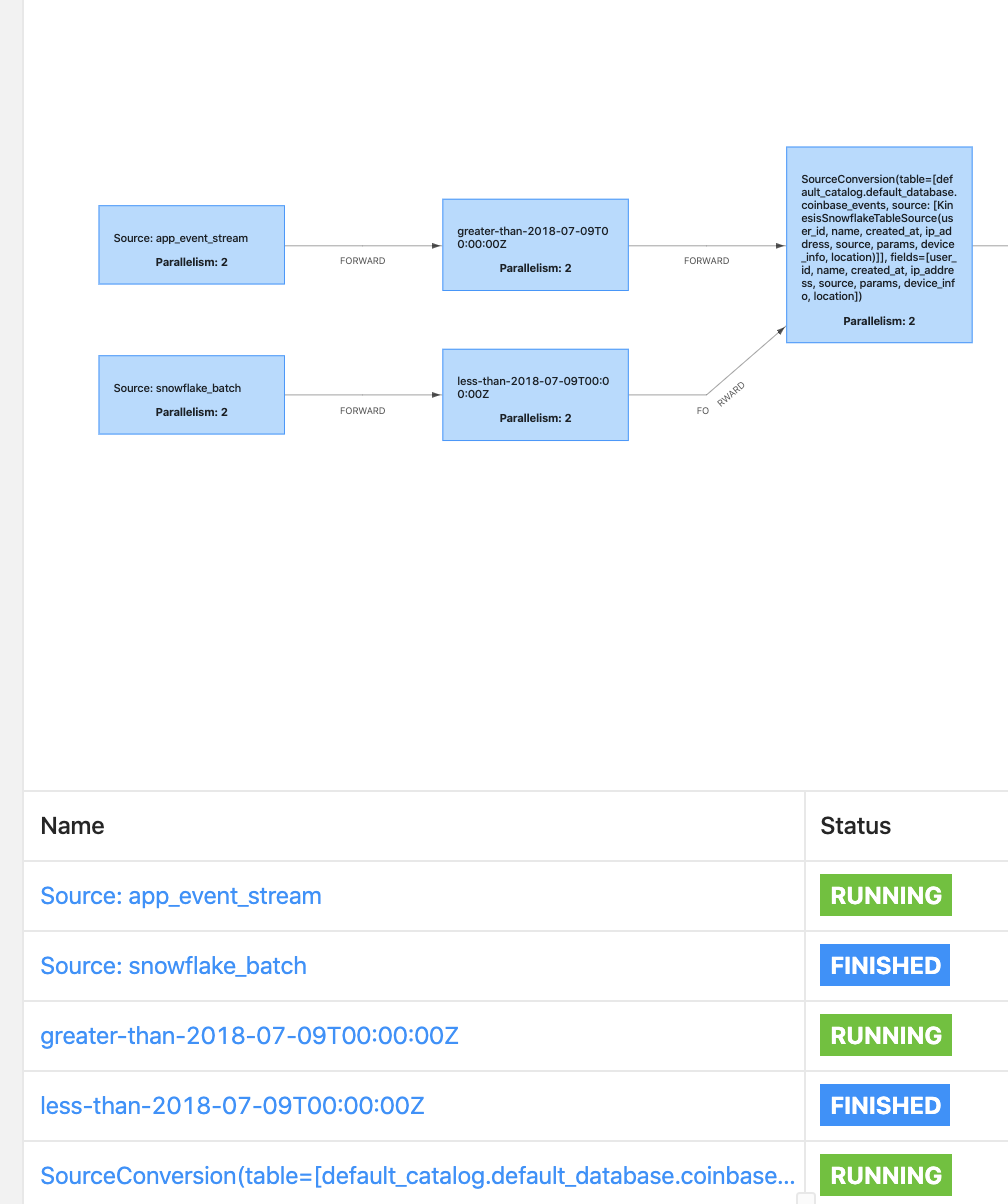

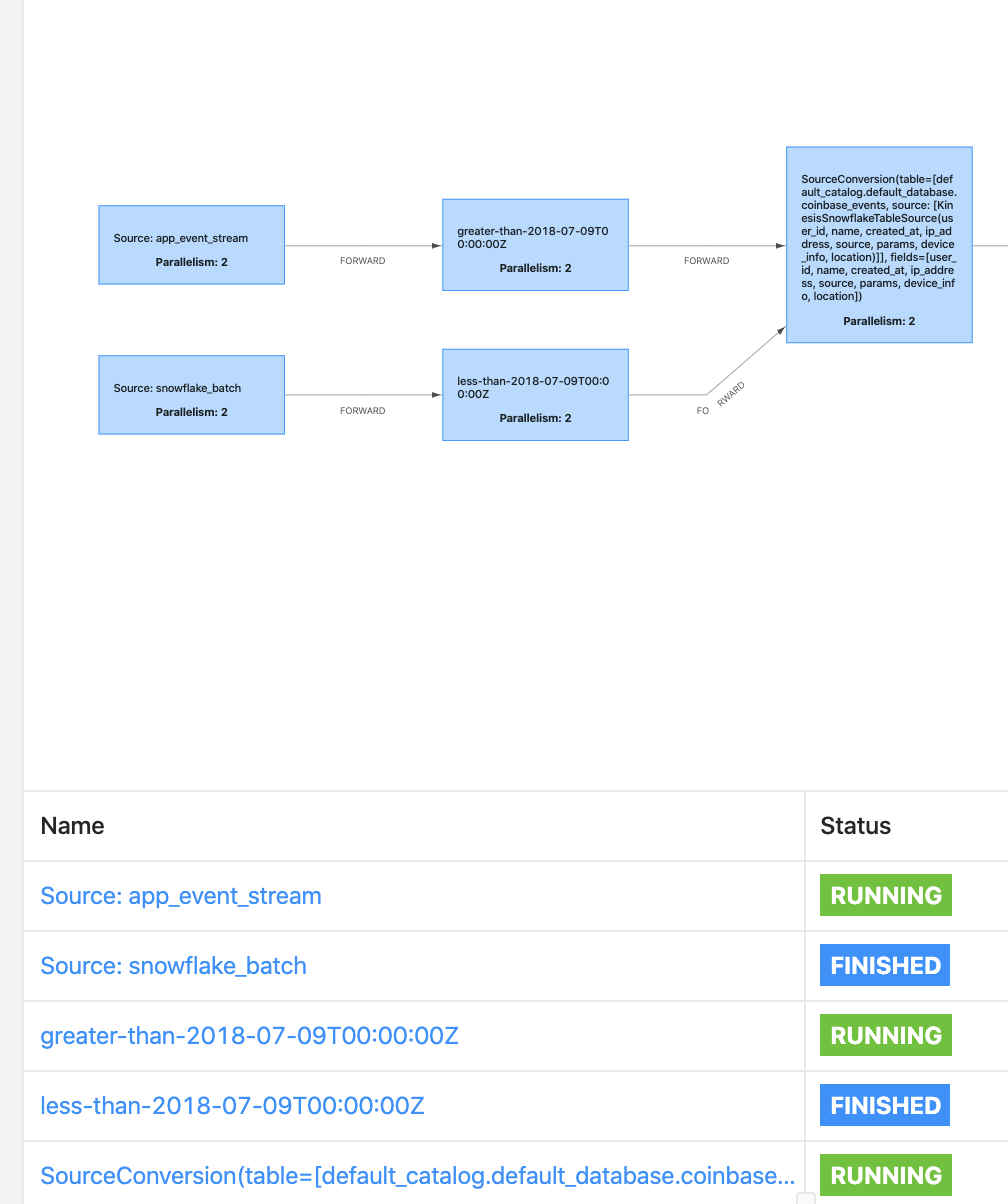

I couldn't make a savepoint for the following graph:  with stacktrace: Caused by: java.util.concurrent.CompletionException: java.util.concurrent.CompletionException: org.apache.flink.runtime.checkpoint.CheckpointException: Failed to trigger savepoint. Failure reason: Not all required tasks are currently running. Here is my Snowflake source definition: val batch = env.createInput(JDBCInputFormat.buildJDBCInputFormat .setDrivername(options.driverName) .setDBUrl(options.dbUrl) .setUsername(options.username) .setPassword(options.password) .setQuery(query) .setRowTypeInfo(getInputRowTypeInfo) .setFetchSize(fetchSize) .setParametersProvider(new GenericParameterValuesProvider(buildQueryParams(parallelism))) .finish, getReturnType) where query is something like select * from events where timestamp > t0 and timestamp < t1 My theory is that the snowflake_batch_source task changed to FINISHED once it reads all the data. and then savepoint failed. Is there any way to make a savepoint for such cases? Thanks, Fanbin |

Re: savepoint failed for finished tasks

|

Hi Currently, Checkpoint/savepoint only works if all operators/tasks are still running., there is an issue[1] tracking this Best, Congxian Fanbin Bu <[hidden email]> 于2020年1月17日周五 上午6:49写道:

|

|

Hi Fanbin, Congxian is right. We can't support checkpoint or savepoint on finite stream job now. Thanks, Biao /'bɪ.aʊ/ On Fri, 17 Jan 2020 at 16:26, Congxian Qiu <[hidden email]> wrote:

|

|

Do you have a rough ETA when this issue would be resolved? Thanks Fanbin On Fri, Jan 17, 2020 at 12:32 AM Biao Liu <[hidden email]> wrote:

|

«

Return to (DEPRECATED) Apache Flink User Mailing List archive.

|

1 view|%1 views

| Free forum by Nabble | Edit this page |