multiple flink applications on yarn are shown in one application.

multiple flink applications on yarn are shown in one application.

|

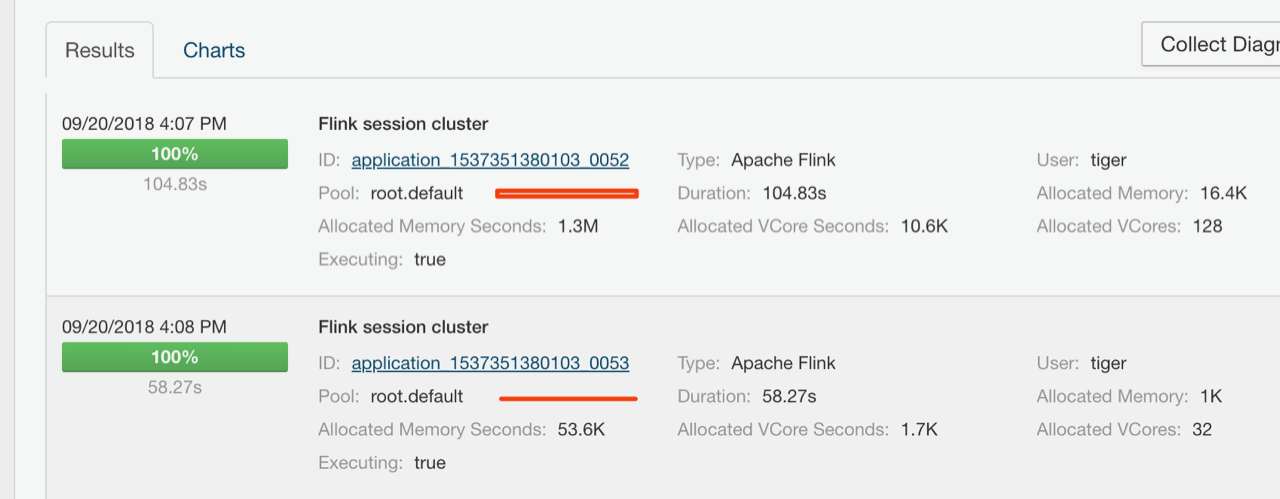

I am new to Flink. I am using Flink on yarn per-job. I submitted two applications. > /data/apps/opt/flink/bin/flink run -m yarn-cluster /data/apps/opt/fluxion/fluxion-flink.jar /data/apps/conf/fluxion//job_submit_rpt_company_user_s.conf > /data/apps/opt/flink/bin/flink run -m yarn-cluster /data/apps/opt/fluxion/fluxion-flink.jar /data/apps/conf/fluxion//job_submit_rpt_company_performan_.conf I can saw these two applications on yarn. I noticed that the application name is “flink session cluster” rather than “flink per-job”. Is that right?  However, I can see both flink jobs in each yarn application. Is that right?   And Finally, I want to kill one job and killed one yarn application. However one yarn application is killed and but both flink jobs restarted in another yarn application. I want to kill one and remain another. In my opinion, one job in an application and the job is killed when the yarn application is killed.  I think the problem is that these two application should be “flink per-job” rather than “flink session cluster”. But I don’t know why it becomes “flink session-cluster”. Can anybody help? Thanks. |

Re: multiple flink applications on yarn are shown in one application.

|

Hi,

currently, Flink still has to use session mode under the hood if you submit the job in attached-mode. The reason is that the job could consists of multiple parts that require to run one after the other. This will be changed in the future and also should not happen if you submit the job detached. Best, Stefan

|

Re: multiple flink applications on yarn are shown in one application.

|

Thank you for your help.

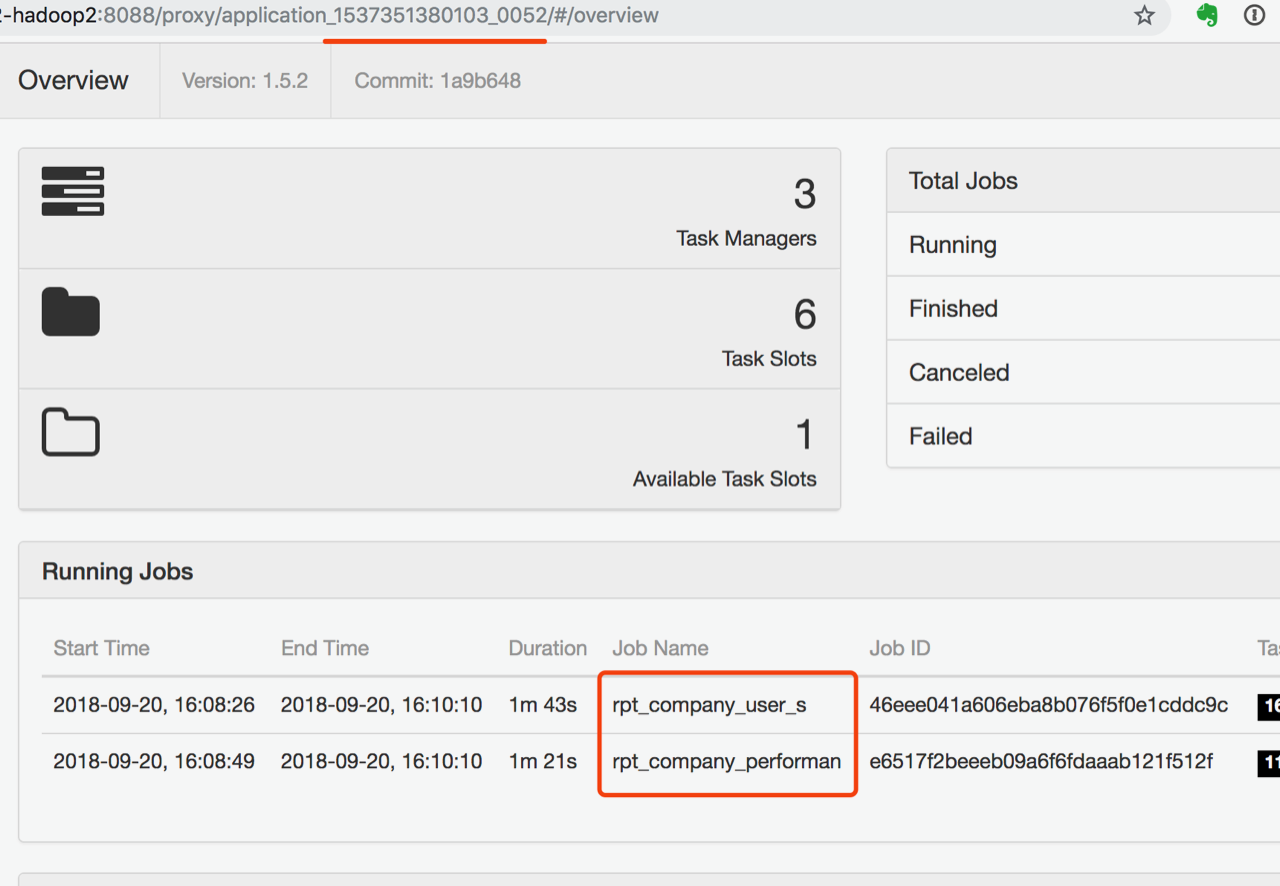

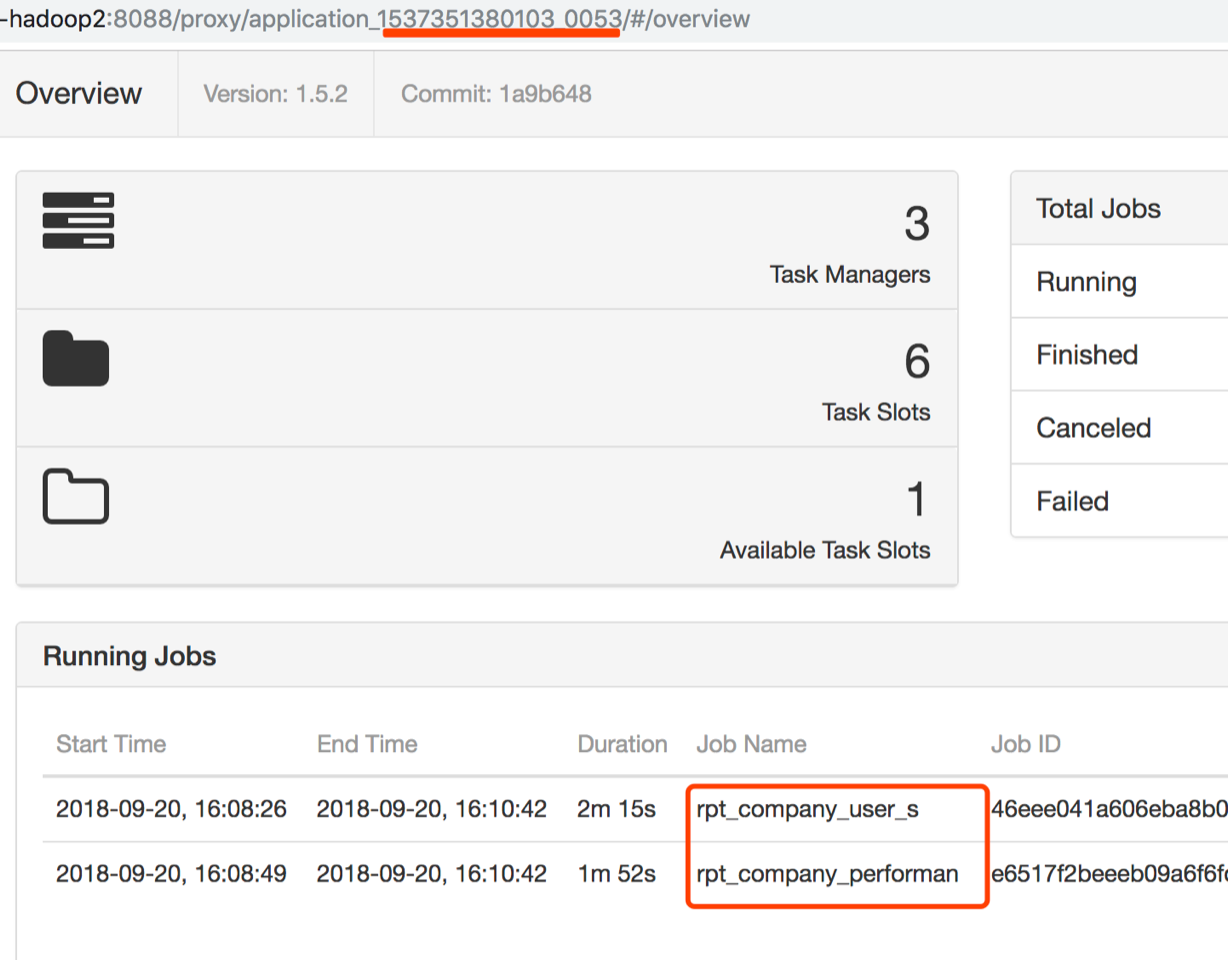

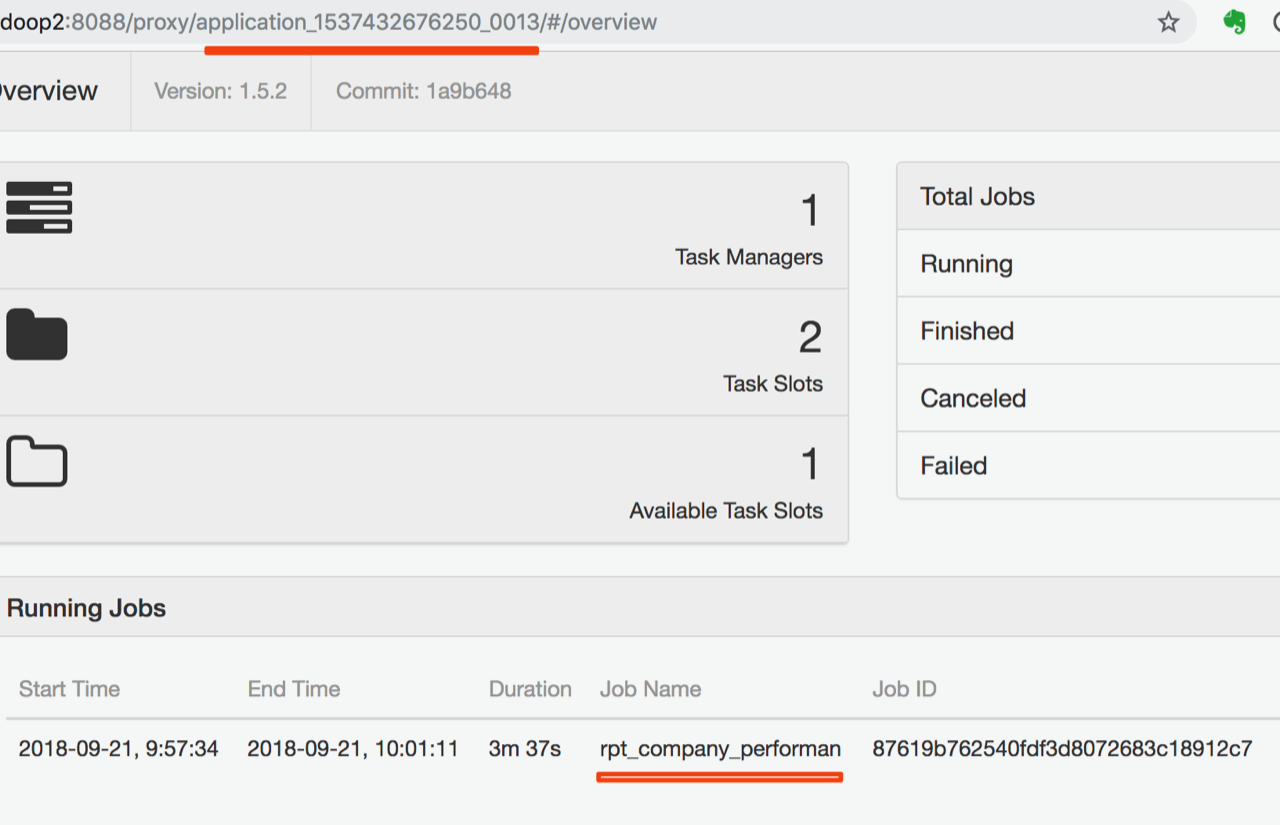

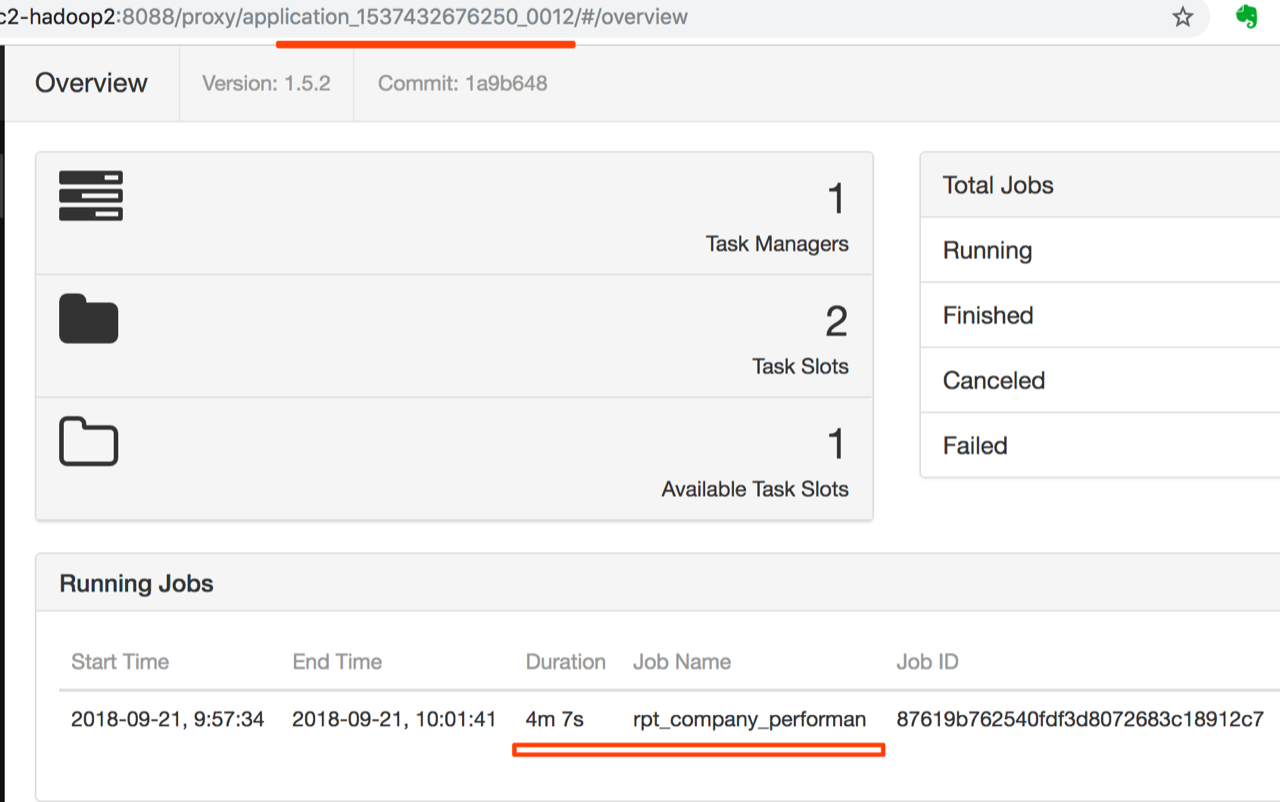

It is per-job mode if I submit the job detached. However, I submit these two jobs use the command below. > /data/apps/opt/flink/bin/flink run -m yarn-cluster -d /data/apps/opt/fluxion/fluxion-flink.jar /data/apps/conf/fluxion//job_submit_rpt_company_performan.conf > /data/apps/opt/flink/bin/flink run -m yarn-cluster -d /data/apps/opt/fluxion/fluxion-flink.jar /data/apps/conf/fluxion//job_submit_rpt_company_user_s.conf I browse the yarn application. As the picture shows I got 2 applications(0013 / 0012) but the job in both applications is the same. I can’t find the job submitted secondly. The job in application_XXX_0013 should be rpt_company_user_s. This will not happen in session mode. Best LX

|

Re: multiple flink applications on yarn are shown in one application.

|

Hi,

I see from your command that you are using the same jar file twice, so I want to double-check first how you even determine which job should be started? I am also adding Till (in CC) depending on your answer to my first question, he might have some additional thoughts. Best, Stefan

|

Re: multiple flink applications on yarn are shown in one application.

|

Thanks for your help.

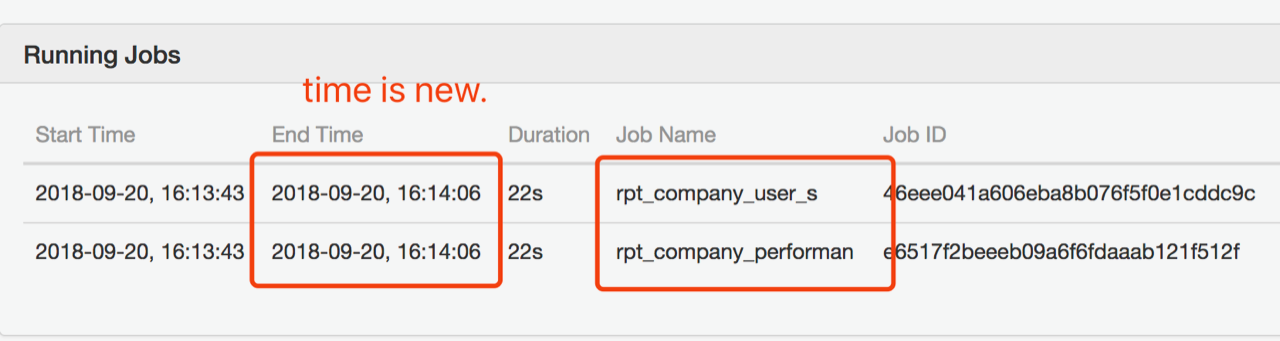

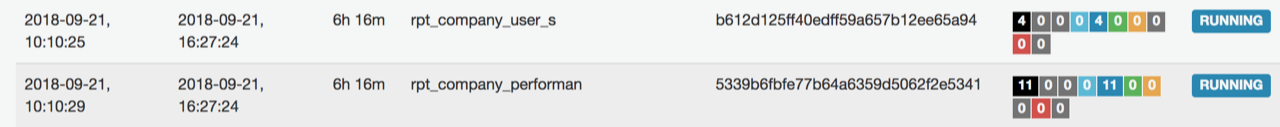

All jobs are defined in the conf file. The jar read the conf file and constructs job and executes job. The picture is jobs in my standalone cluster. It just work fine. I am now working on moving standalone cluster to yarn.

|

«

Return to (DEPRECATED) Apache Flink User Mailing List archive.

|

1 view|%1 views

| Free forum by Nabble | Edit this page |