low performance in running queries

low performance in running queries

|

Hi all,

I am running Flink on a standalone cluster and getting very long execution time for the streaming queries like WordCount for a fixed text file. My VM runs on a Debian 10 with 16 cpu cores and 32GB of RAM. I have a text file with size of 2GB. When I run the Flink on a standalone cluster, i.e., one JobManager and one taskManager with 25GB of heapsize, it took around two hours to finish counting this file while a simple python script can do it in around 7 minutes. Just wondering what is wrong with my setup. I ran the experiments on a cluster with six taskManagers, but I still get very long execution time like 25 minutes or so. I tried to increase the JVM heap size to have lower execution time but it did not help. I attached the log file and the Flink configuration file to this email. Best, Habib |

Re: low performance in running queries

|

The reason might be the parallelism of your task is only 1, that's too low. See [1] to specify proper parallelism for your job, and the execution time should be reduced significantly. Best Regards, Zhenghua Gao On Tue, Oct 29, 2019 at 9:27 PM Habib Mostafaei <[hidden email]> wrote: Hi all, |

Re: low performance in running queries

|

Thanks Gao for the reply. I used the parallelism parameter with

different values like 6 and 8 but still the execution time is not

comparable with a single threaded python script. What would be the

reasonable value for the parallelism? Best, Habib On 10/30/2019 1:17 PM, Zhenghua Gao

wrote:

-- Habib Mostafaei, Ph.D. Postdoctoral researcher TU Berlin, FG INET, MAR 4.003 Marchstraße 23, 10587 Berlin |

Re: low performance in running queries

|

I haven't run any benchmarks with Flink or even used it enough to directly help with your question, however I suspect that the following article might be relevant: Given the computation you're performing is trivial, it's possible that the additional overhead of serialisation, interprocess communication, state management etc that distributed systems like Flink require are dominating the runtime here. 2 hours (or even 25 minutes) still seems too long to me however, so hopefully it really is just a configuration issue of some sort. Either way, if you do figure this out or anyone with good knowledge of the article above in relation to Flink is able to give their thoughts, I'd be very interested in hearing more. Regards, Chris ------ Original Message ------

From: "Habib Mostafaei" <[hidden email]>

To: "Zhenghua Gao" <[hidden email]>

Cc: "user" <[hidden email]>; "Georgios Smaragdakis" <[hidden email]>; "Niklas Semmler" <[hidden email]>

Sent: 30/10/2019 12:25:28

Subject: Re: low performance in running queries

|

Re: low performance in running queries

|

Hi,

I would also suggest to just attach a code profiler to the process during those 2 hours and gather some results. It might answer some questions what is taking so long time. Piotrek

|

Re: low performance in running queries

|

In reply to this post by Habib Mostafaei

I think more runtime information would help figure out where the problem is. 1) how many parallelisms actually working 2) the metrics for each operator 3) the jvm profiling information, etc Best Regards, Zhenghua Gao On Wed, Oct 30, 2019 at 8:25 PM Habib Mostafaei <[hidden email]> wrote:

|

Re: low performance in running queries

|

I enclosed all logs from the run and for this run I used

parallelism one. However, for other runs I checked and found that

all parallel workers were working properly. Is there a simple way

to get profiling information in Flink? Best, Habib On 10/31/2019 2:54 AM, Zhenghua Gao

wrote:

|

Re: low performance in running queries

2019-10-30 15:59:52,122 INFO org.apache.flink.runtime.taskmanager.Task - Split Reader: Custom File Source -> Flat Map (1/1) (6a17c410c3e36f524bb774d2dffed4a4) switched from DEPLOYING to RUNNING. 2019-10-30 17:45:10,943 INFO org.apache.flink.runtime.taskmanager.Task - Split Reader: Custom File Source -> Flat Map (1/1) (6a17c410c3e36f524bb774d2dffed4a4) switched from RUNNING to FINISHED.It's surprise that the source task uses 95 mins to read a 2G file. Could you give me your code snippets and some sample lines of the 2G file? I will try to reproduce your scenario and dig the root causes. Best Regards, Zhenghua Gao On Thu, Oct 31, 2019 at 9:05 PM Habib Mostafaei <[hidden email]> wrote:

|

Re: low performance in running queries

|

I used streaming WordCount provided by Flink and the file contains

text like "This is some text...". I just copied several times.

Best, Habib On 11/1/2019 6:03 AM, Zhenghua Gao

wrote:

|

Re: low performance in running queries

|

In reply to this post by Habib Mostafaei

Hi,

> Is there a simple way to get profiling information in Flink?

Flink doesn’t provide any special tooling for that. Just use your chosen profiler, for example: Oracle’s Mission Control (free on non production clusters, no need to install anything if already using Oracle’s JVM), VisualVM (I think free), YourKit (paid). For each one of them there is a plenty of online support how to use them both for local and remote profiling. Piotrek

|

Re: low performance in running queries

|

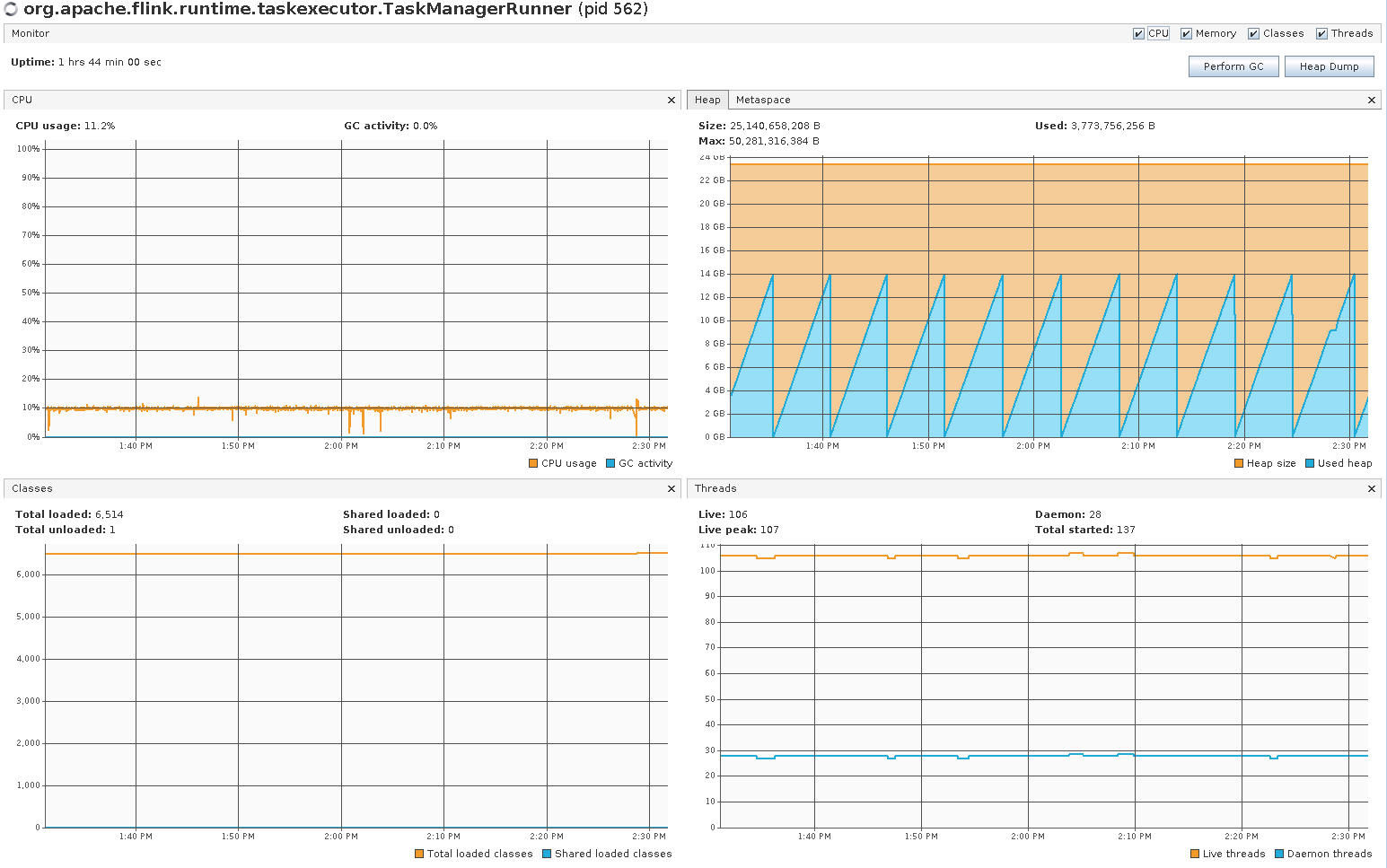

Hi Piotrek, Thanks for the list of profilers. I used VisualVM and here is the

resource usage for taskManager.

Habib

On 11/1/2019 9:48 AM, Piotr Nowojski

wrote:

Hi, -- Habib Mostafaei, Ph.D. Postdoctoral researcher TU Berlin, FG INET, MAR 4.003 Marchstraße 23, 10587 Berlin |

Re: low performance in running queries

|

Hi,

More important would be the code profiling output. I think VisualVM allows to share the code profiling result as “snapshots”? If you could analyse or share this, it would be helpful. From the attached screenshot the only thing that is visible is that there are no GC issues, and secondly the application is running only on one (out of 10?) CPU cores. Which hints one obvious way how to improve the performance - scale out. However the WordCount example might not be the best for this, as I’m pretty sure its source is fundamentally not parallel. Piotrek

|

Re: low performance in running queries

|

In reply to this post by Habib Mostafaei

Hi, I ran the streaming WordCount with a 2GB text file(copied /usr/share/dict/words 400 times) last weekend and didn't reproduce your result(16 minutes in my case). But i find some clues may help you: The streaming WordCount job would output all intermedia result in your output file(if specified) or taskmanager.out. It's large (about 4GB in my case) and causes the disk writes high. Best Regards, Zhenghua Gao On Fri, Nov 1, 2019 at 4:40 PM Habib Mostafaei <[hidden email]> wrote:

|

Re: low performance in running queries

|

In reply to this post by Piotr Nowojski-3

Hi, On 11/1/2019 4:40 PM, Piotr Nowojski

wrote:

Hi,Enclosed is a snapshot of VisualVM.

Yes, your are right that the source is not parallel. Checking the timeline of execution shows that the source operation is done in less than a second while Map and Reduce operations take long running time. Habib

|

Re: low performance in running queries

|

Hi,

Unfortunately your VisualVM snapshot doesn’t contain the profiler output. It should look like this [1]. > Checking the timeline of execution shows that the source operation is done in less than a second while Map and Reduce operations take long running time. It could well be that the overhead comes for example from the state accesses, especially if you are using RocksDB. Still would be interesting to see the call stack that’s using the most CPU time. Piotrek

|

| Free forum by Nabble | Edit this page |