jdbc.JDBCInputFormat

import org.apache.flink.api.scala.io.jdbc.JDBCInputFormat Then, I can't use: I tried to download code from git and recompile, also |

|

As the exception says the class org.apache.flink.api.scala.io.jdbc.JDBCInputFormat does not exist. There is no Scala implementation of this class but you can also use Java classes in Scala.You have to do: import org.apache.flink.api.java.io.jdbc.JDBCInputFormat 2016-10-07 21:38 GMT+02:00 Alberto Ramón <[hidden email]>:

|

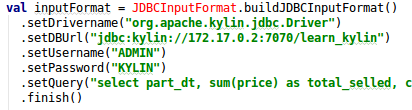

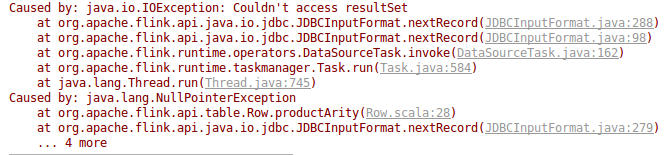

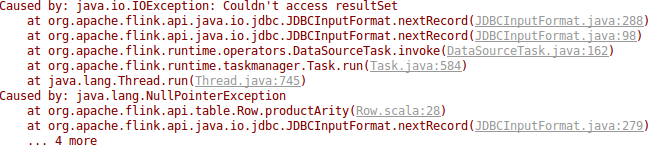

import org.apache.flink.api.scala._ var stringColum: TypeInformation[Int] = createTypeInformation[Int] The error is: (Isn't a connection problem, Because if I turn off database the error is different "Reused Connection") Can you see a problem in my code? (I found Flink 4108 unsolved issue,I don't know if is related) BR, Alberto 2016-10-07 21:46 GMT+02:00 Fabian Hueske <[hidden email]>:

|

|

I think you already found the correct

issue describing your problem (

FLINK-4108). This should get higher priority.

Timo Am 09/10/16 um 13:27 schrieb Alberto Ramón:

-- Freundliche Grüße / Kind Regards Timo Walther Follow me: @twalthr https://www.linkedin.com/in/twalthr |

|

It's from Jun and

Unassigned :( Is There a Workarround?2016-10-10 11:04 GMT+02:00 Timo Walther <[hidden email]>:

|

|

I could reproduce the error locally. I

will prepare a fix for it.

Timo Am 10/10/16 um 11:54 schrieb Alberto Ramón:

-- Freundliche Grüße / Kind Regards Timo Walther Follow me: @twalthr https://www.linkedin.com/in/twalthr |

|

I have opened a PR

(https://github.com/apache/flink/pull/2619). Would be great if you

could try it and comment if it solves you problem.

Timo Am 10/10/16 um 17:48 schrieb Timo Walther:

-- Freundliche Grüße / Kind Regards Timo Walther Follow me: @twalthr https://www.linkedin.com/in/twalthr |

|

I will check it this nigth Thanks2016-10-11 11:24 GMT+02:00 Timo Walther <[hidden email]>:

|

|

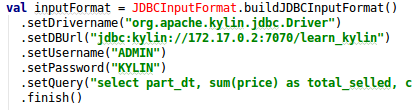

Hello I downloaded and compile your branch: Scala: String = version 2.11.8 And the coded is attached: 2016-10-11 12:01 GMT+02:00 Alberto Ramón <[hidden email]>:

|

|

Hi guys, I am facing following error message in flink scala JDBC wordcount. could you please advise me on this? Information:12/10/2016, 10:43 - Compilation completed with 2 errors and 0 warnings in 1s 903ms /Users/janaidu/faid/src/main/scala/fgid/JDBC.scala Error:(17, 67) can't expand macros compiled by previous versions of Scala val stringColum: TypeInformation[Int] = createTypeInformation[Int] Error:(29, 33) can't expand macros compiled by previous versions of Scala val dataset =env.createInput(inputFormat) ------------ code package DataSources ---------pom.xml <?xml version="1.0" encoding="UTF-8"?> Cheers S |

|

In reply to this post by Alberto Ramón

Hi Alberto,

you need to checkout the branch, run `mvn clean install` to put this version in your Maven repo and the `pom.xml` of your project should point to Flink version `1.2-SNAPSHOT`. Timo Am 11/10/16 um 19:47 schrieb Alberto Ramón:

-- Freundliche Grüße / Kind Regards Timo Walther Follow me: @twalthr https://www.linkedin.com/in/twalthr |

|

In reply to this post by sunny patel

Hi Sunny,

you are using different versions of Flink. `flink-parent` is set to `1.2-SNAPSHOT` but the property `flink.version` is still `1.1.2`. Hope that helps. Timo Am 12/10/16 um 11:49 schrieb sunny patel:

-- Freundliche Grüße / Kind Regards Timo Walther Follow me: @twalthr https://www.linkedin.com/in/twalthr |

|

Thanks, Timo, I have updated `flink-parent' and Flink version to 1.2-SNAPSHOT` but still, I am facing the version errors. could you please advise me on this? Information:12/10/2016, 11:34 - Compilation completed with 2 errors and 0 warnings in 7s 284ms /Users/janaidu/faid/src/main/scala/fgid/JDBC.scala Error:(17, 67) can't expand macros compiled by previous versions of Scala val stringColum: TypeInformation[Int] = createTypeInformation[Int] Error:(29, 33) can't expand macros compiled by previous versions of Scala val dataset =env.createInput(inputFormat) ========= POM.XML FILE <?xml version="1.0" encoding="UTF-8"?> ========== Thanks S On Wed, Oct 12, 2016 at 11:19 AM, Timo Walther <[hidden email]> wrote:

|

|

Doesn't this just mean that there is a

scala version mismatch? i.e flink was compiled with 2.10 but you

run it with 2.11?

On 12.10.2016 12:39, sunny patel wrote:

|

|

In reply to this post by sunny patel

Are you sure that the same Scala

version is used everywhere? Maybe it helps to clean your local

Maven repo and build the version again.

Am 12/10/16 um 12:39 schrieb sunny patel:

-- Freundliche Grüße / Kind Regards Timo Walther Follow me: @twalthr https://www.linkedin.com/in/twalthr |

|

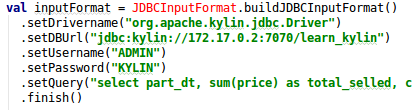

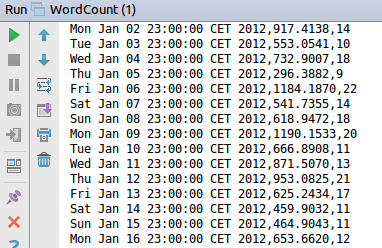

I tested With, Long, BigDecimal andDate types:  Have a nice day, Alb 2016-10-12 13:37 GMT+02:00 Timo Walther <[hidden email]>:

|

«

Return to (DEPRECATED) Apache Flink User Mailing List archive.

|

1 view|%1 views

| Free forum by Nabble | Edit this page |