Sensor's Data Aggregation Out of order with EventTime.

|

Hi all,

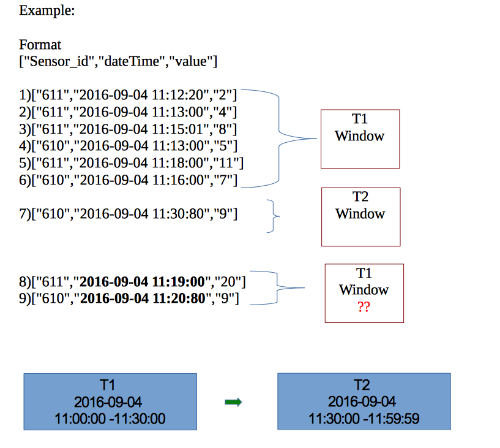

We have some sensors that sends data into kafka. Each kafka partition have a set of deferent sensor writing data in it. We consume the data from flink. We want to SUM up the values in half an hour intervals in eventTime(extract from data). The result is a keyed stream by sensor_id with timeWindow of 30 minutes. Situation: Usually when you have to deal with Sensor data you have a priori accept that your data will be ordered by timestamp for each sensor id. A characteristic example of data arriving is described below. Problem: The problem is that a watermark generated is closing the windows before all nodes have finished(7record).  Question: Is this scenario possible with EventTime? Or we need to choose another technique? We thought that it could be done by using one kafka partition for each sensor, but this would result in thousand partitions in kafka, which may be inefficient. Could you propose a possible solution for these kind of data arriving? |

Re: Sensor's Data Aggregation Out of order with EventTime.

|

This is a common problem in Event Time which is referred to as late

data. You can a) change the Watermark generation code 2) Allow elements to be late and re-trigger a window execution. For 2) see https://ci.apache.org/projects/flink/flink-docs-master/dev/windows.html#dealing-with-late-data -Max On Thu, Oct 6, 2016 at 10:53 AM, Steve <[hidden email]> wrote: > Hi all, > > We have some sensors that sends data into kafka. Each kafka partition have a > set of deferent sensor writing data in it. We consume the data from flink. > We want to SUM up the values in half an hour intervals in eventTime(extract > from data). > The result is a keyed stream by sensor_id with timeWindow of 30 minutes. > > Situation: > Usually when you have to deal with Sensor data you have a priori accept that > your data will be ordered by timestamp for each sensor id. A characteristic > example of data arriving is described below. > > Problem: > The problem is that a watermark generated is closing the windows before all > nodes have finished(7record). > > > <http://apache-flink-user-mailing-list-archive.2336050.n4.nabble.com/file/n9361/flinkExample.png> > > > Question: > Is this scenario possible with EventTime? Or we need to choose another > technique? We thought that it could be done by using one kafka partition for > each sensor, but this would result in thousand partitions in kafka, which > may be inefficient. Could you propose a possible solution for these kind of > data arriving? > > > > > -- > View this message in context: http://apache-flink-user-mailing-list-archive.2336050.n4.nabble.com/Sensor-s-Data-Aggregation-Out-of-order-with-EventTime-tp9361.html > Sent from the Apache Flink User Mailing List archive. mailing list archive at Nabble.com. |

«

Return to (DEPRECATED) Apache Flink User Mailing List archive.

|

1 view|%1 views

| Free forum by Nabble | Edit this page |