Regarding json/xml/csv file splitting

|

Hi,

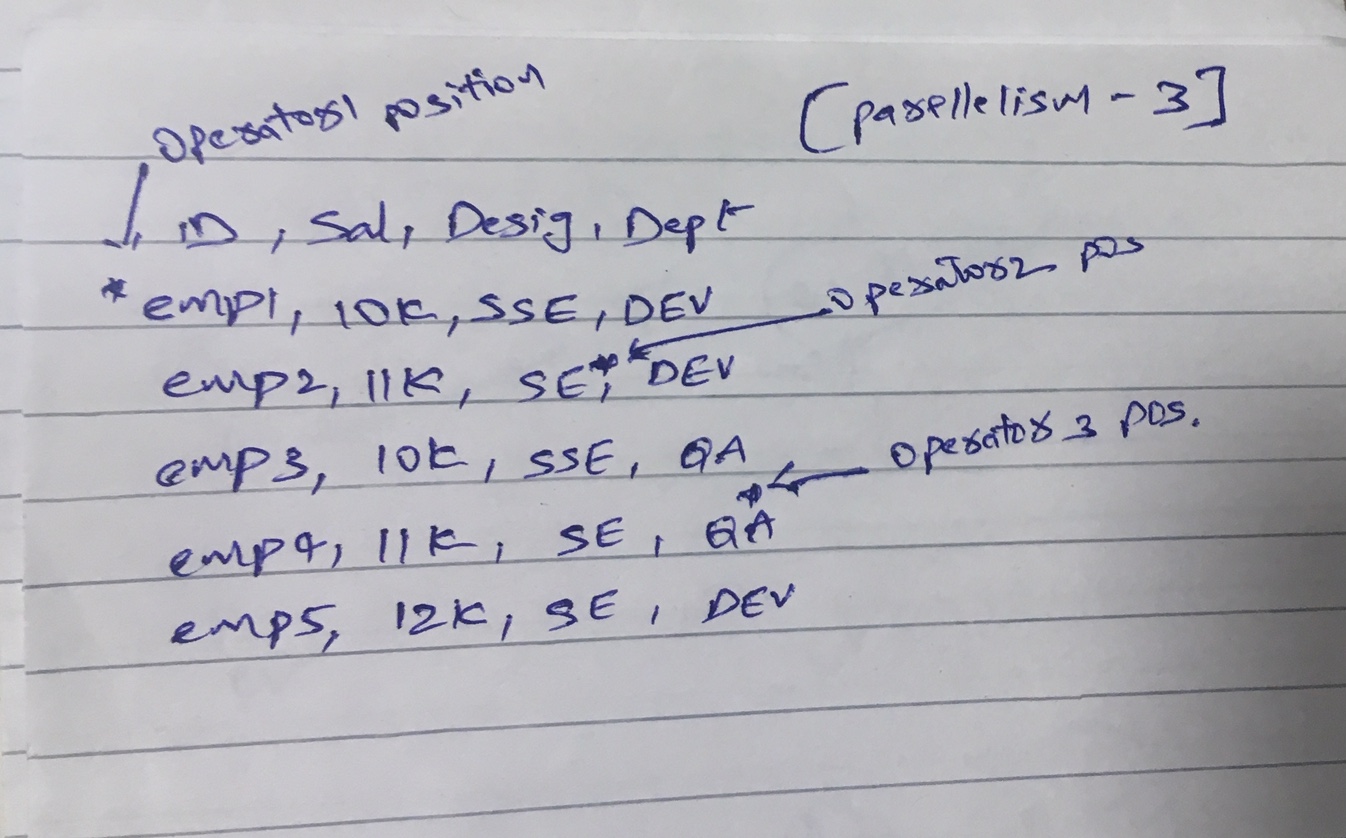

Can someone please tell me how to split json/xml data file. Since they are structured form (i.e., parent/child hierarchy), is it possible to split the file and process in parallel with 2 or more instances of source operator ? Also please confirm if my understanding of csv splitting is correct as mentioned below, When used parallelism greater than 1, file will be split into equal parts more or less and each operator instance will have respective start position of file partition. There can be possibility that start position of file partition can come in the middle of the delimited line as shown below. And when file reading is started initial partial record will be ignored by respective operator instance and reads full records which are coming afterwards. ie., # Operator1 reads emp1, emp2 records (reads emp2 since record's starting char position fell in its reading range) # Operator2 ignores partial emp2 rec and reads emp3 and emp4 # Operator3 ignores partial emp4 and reads emp5 Record delimiter is used to skip partial record and identifying new record.  Thank you, Madan. |

Re: Regarding json/xml/csv file splitting

|

Normally parallel processing of text input files is handled via Hadoop TextInputFormat, which support splitting of files on line boundaries at (roughly) HDFS block boundaries.

There are various XML Hadoop InputFormats available, which try to sync up with splittable locations. The one I’ve used in the past is part of the Mahout project. If each JSON record is on its own line, then you can just use a regular source, and parse it in a subsequent map function Otherwise you can still create a custom input format, as long as there’s some unique JSON that identifies the beginning/end of each record. And failing that, you can always build a list of file paths as your input, and then in your map function explicitly open/read each file and process it as you would any JSON file. In a past project where we had a similar requirement, the only interesting challenge was building N lists of files (for N mappers) where the sum of file sizes was roughly equal for each parallel map parser, as there was significant skew in the file sizes. — Ken

-------------------------- Ken Krugler +1 530-210-6378 http://www.scaleunlimited.com Custom big data solutions & training Flink, Solr, Hadoop, Cascading & Cassandra |

«

Return to (DEPRECATED) Apache Flink User Mailing List archive.

|

1 view|%1 views

| Free forum by Nabble | Edit this page |