Performance issue when writing to HDFS

|

Hi all,

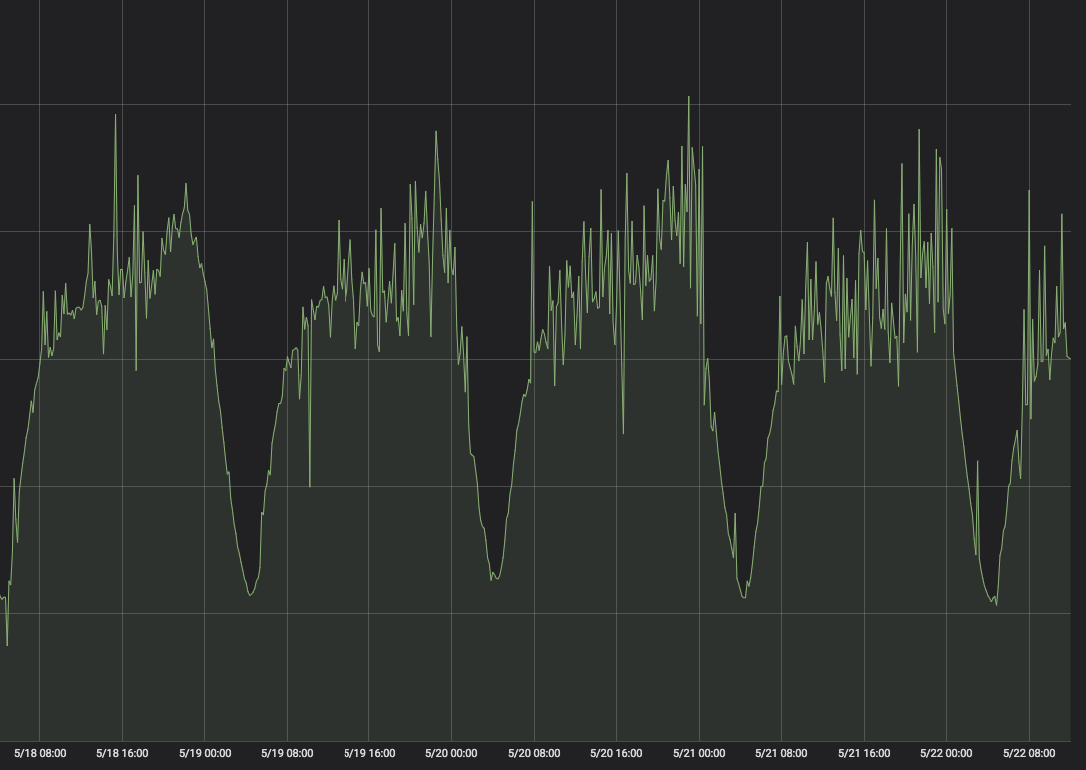

I have Flink application consuming from Kafka and writing the data to HDFS bucketed by event time with BucketingSink. Sometimes, the the traffic gets high and from the prometheus metrics, it shows the writing is not stable.  (getting from flink_taskmanager_job_task_operator_numRecordsOutPerSecond) The output data on HDFS is also getting delayed. (The records for a certain hour bucket are written to HDFS 50 minutes after that hour) I looked into the log, and find warning regarding the datanode ack, which might be related: DFSClient exception: 2020-05-21 10:43:10,432 INFO org.apache.hadoop.hdfs.DFSClient - Exception in createBlockOutputStream Slow ReadProcessor read fields warning: 2020-05-21 10:42:30,509 WARN org.apache.hadoop.hdfs.DFSClient - Slow ReadProcessor read fields took 30230ms (threshold=30000ms); ack: seqno: 126 reply: SUCCESS reply: SUCCESS reply: SUCCESS downstreamAckTimeNanos: 372753456 flag: 0 flag: 0 flag: 0, targets: [DatanodeInfoWithStorage[<IP address here>:1004,DS-833b175e-9848-453d-a222-abf5c05d643e,DISK], DatanodeInfoWithStorage[<IP address here>:1004,DS-f998208a-df7b-4c63-9dde-26453ba69559,DISK], DatanodeInfoWithStorage[<IP address here>:1004,DS-4baa6ba6-3951-46f7-a843-62a13e3a62f7,DISK]] We haven't done any tuning for the Flink job regarding writing to HDFS. Is there any config or optimization we can try to avoid delay and these warnings? Thanks in advance!! Best regards, Mu |

|

Hi Kong, Sorry that I'm not expert of Hadoop, but from the logs and Google, It seems more likely to be a problem of HDFS side [1] ? Like long-time GC in DataNode. Also I have found a similar issue from the history mails [2], and the conclusion should be similar. Best, Yun

|

|

Hi Gao, Thanks for helping out. It turns out to be a networking issue of our HDFS datanode. But I was hoping to find out a way to weaken the impact of such issue. Things I considered was to shorten the waiting time for a file to close when there is no incoming data, so that there will be fewer open files on hdfs. But I'm not sure if that would work. Regards, Mu On Fri, May 22, 2020 at 3:06 PM Yun Gao <[hidden email]> wrote:

|

«

Return to (DEPRECATED) Apache Flink User Mailing List archive.

|

1 view|%1 views

| Free forum by Nabble | Edit this page |