How do I see the output messages when I run a flink job on my local cluster?

|

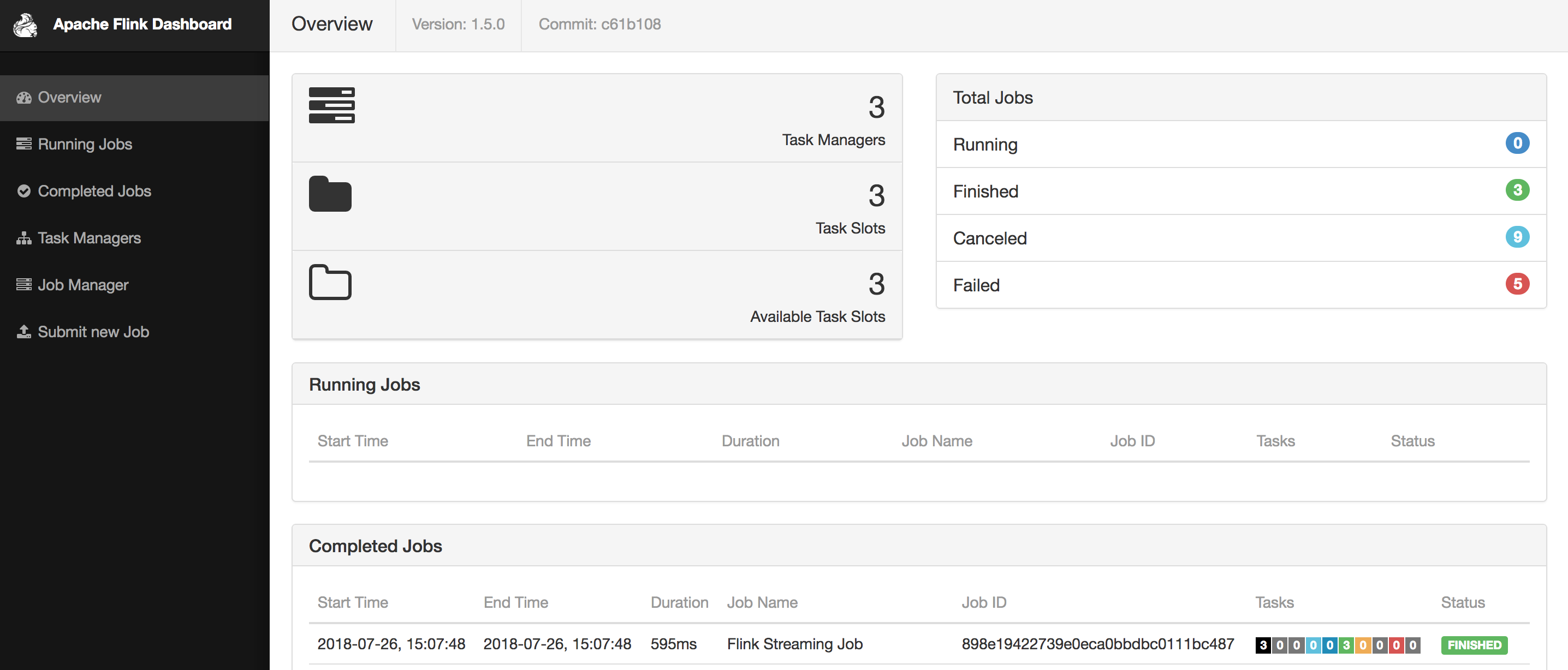

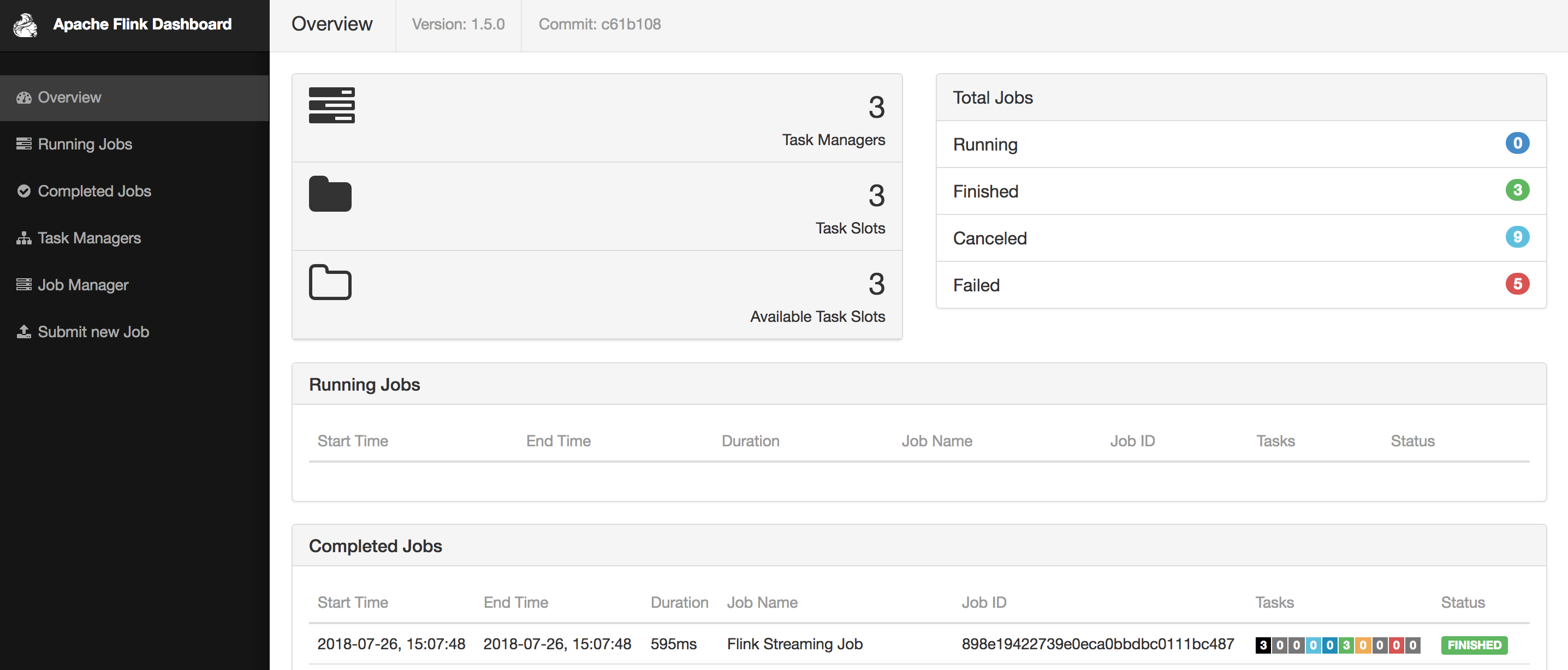

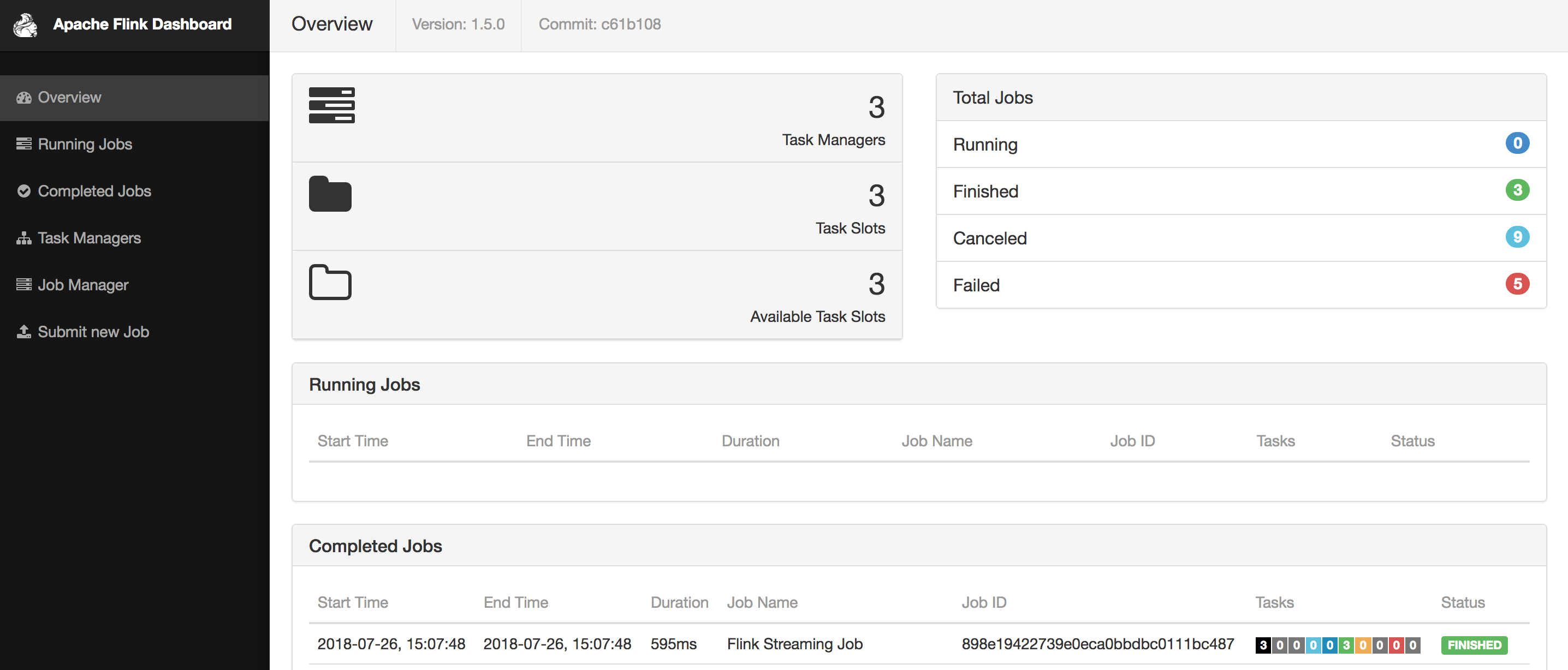

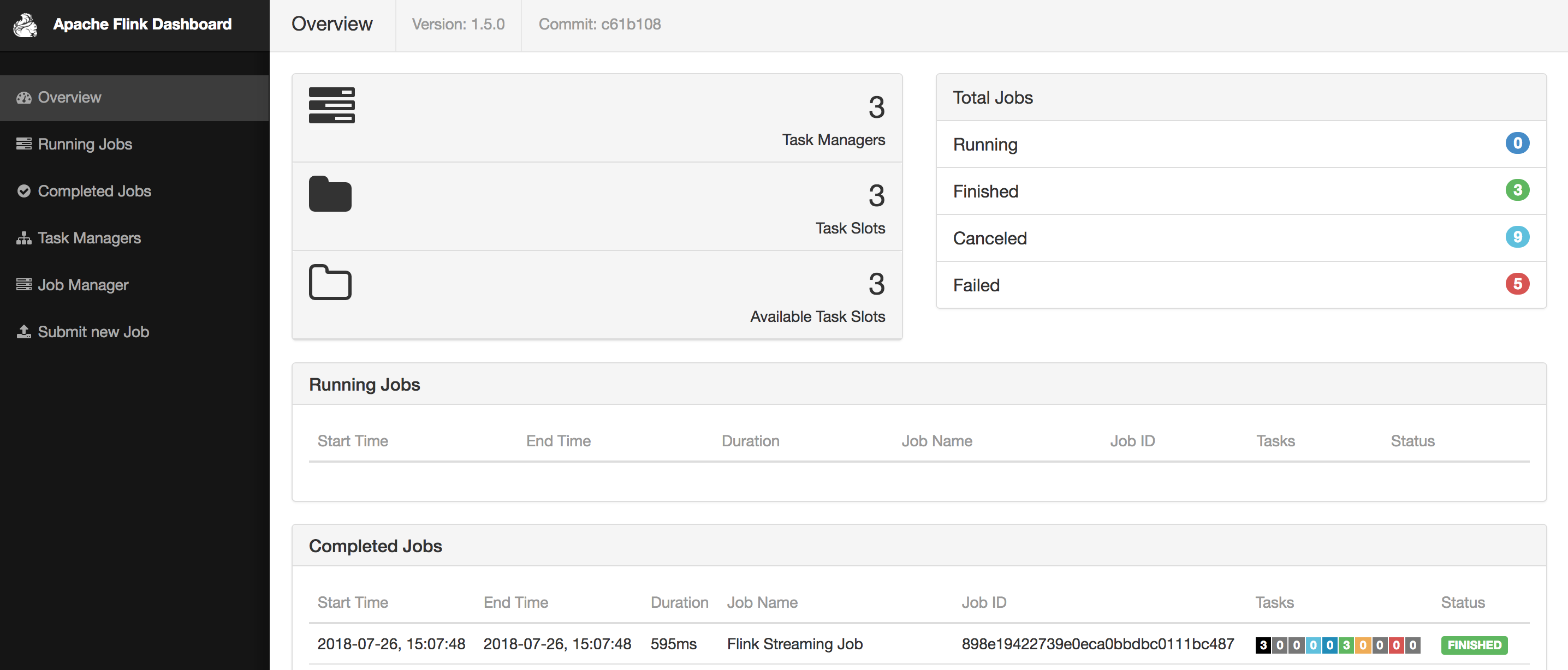

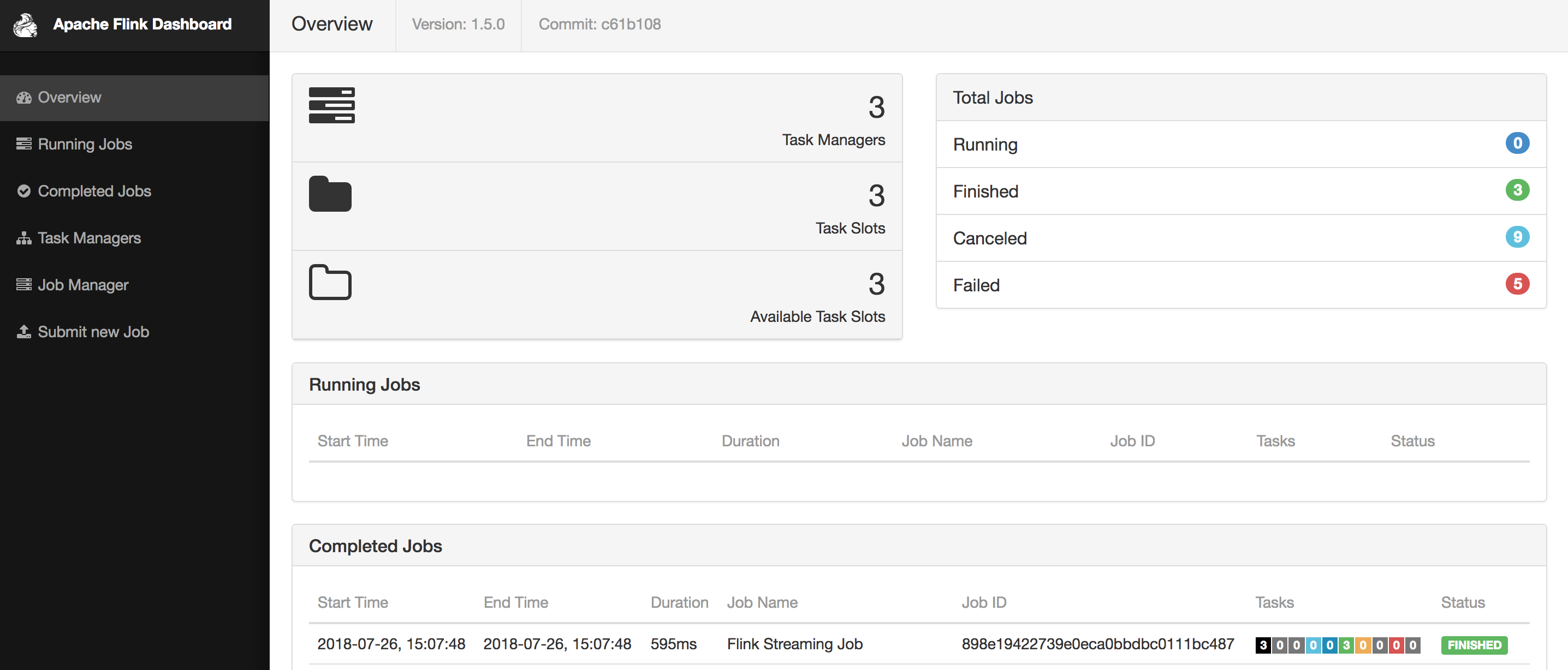

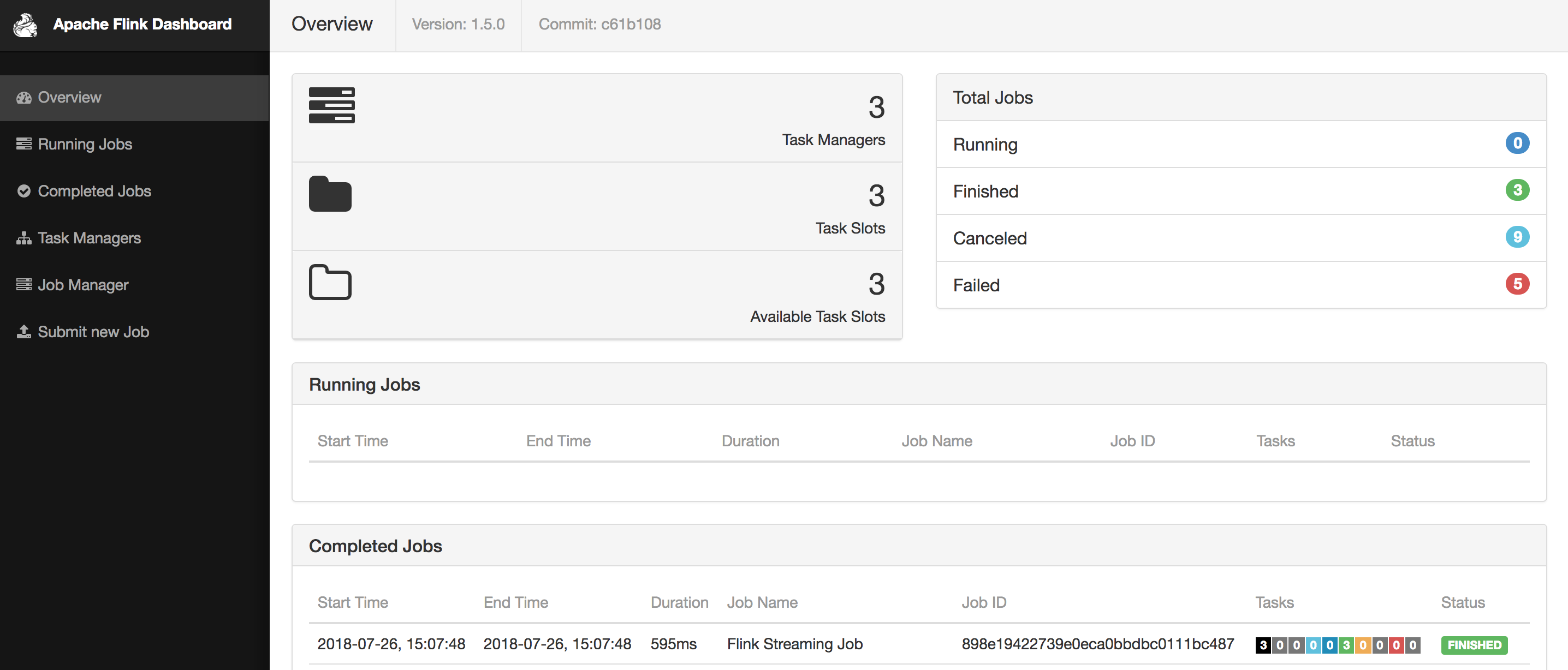

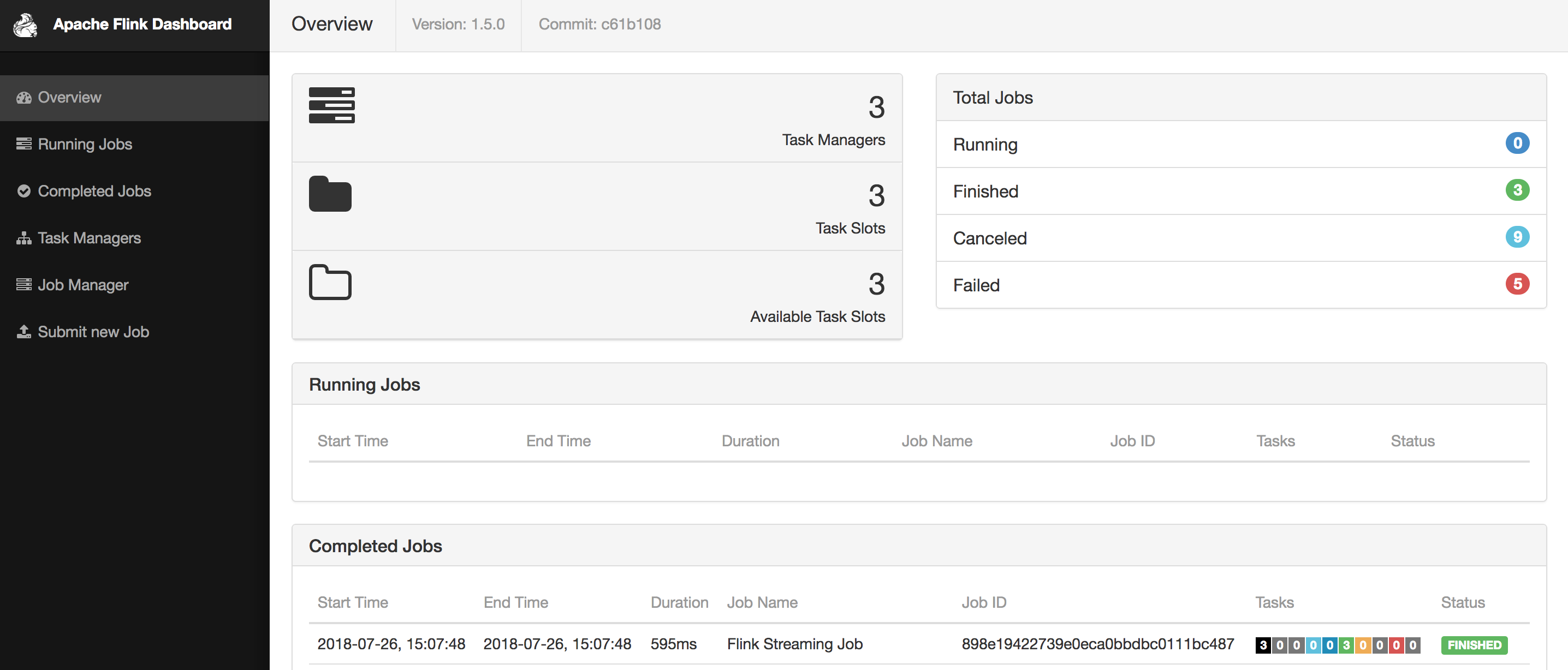

How do I see the output messages when I run a flink job on my local cluster. When I run from IntelliJ, I see the output in the standard output. This is what I see on my local cluster..   |

|

Hi anna, Did you check the CLI output or client log file? Thanks, vino. 2018-07-27 6:25 GMT+08:00 anna stax <[hidden email]>:

|

Re: How do I see the output messages when I run a flink job on my local cluster?

|

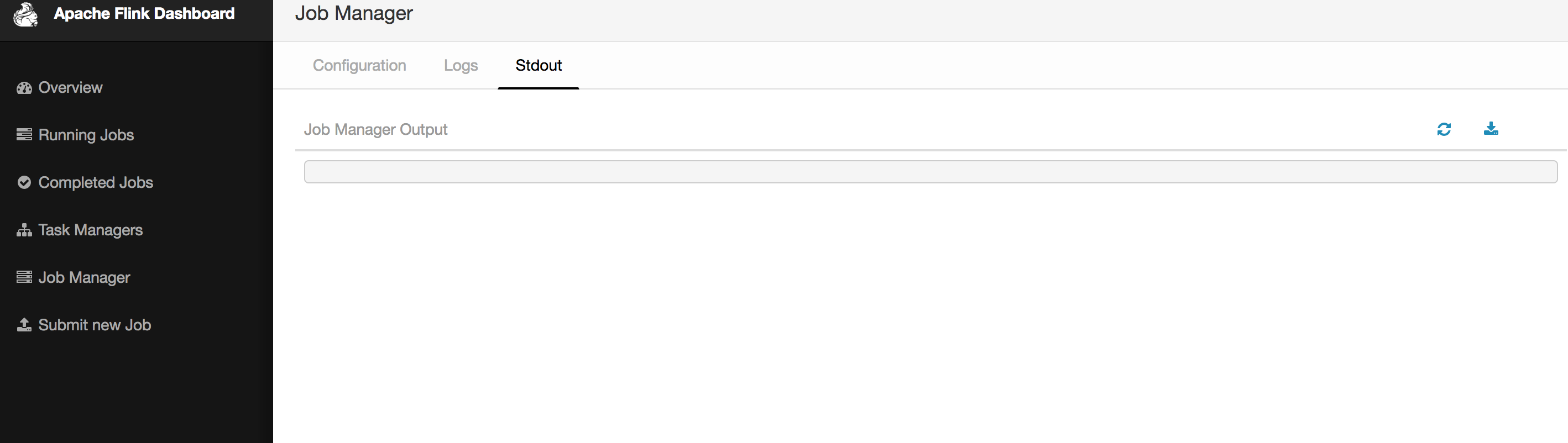

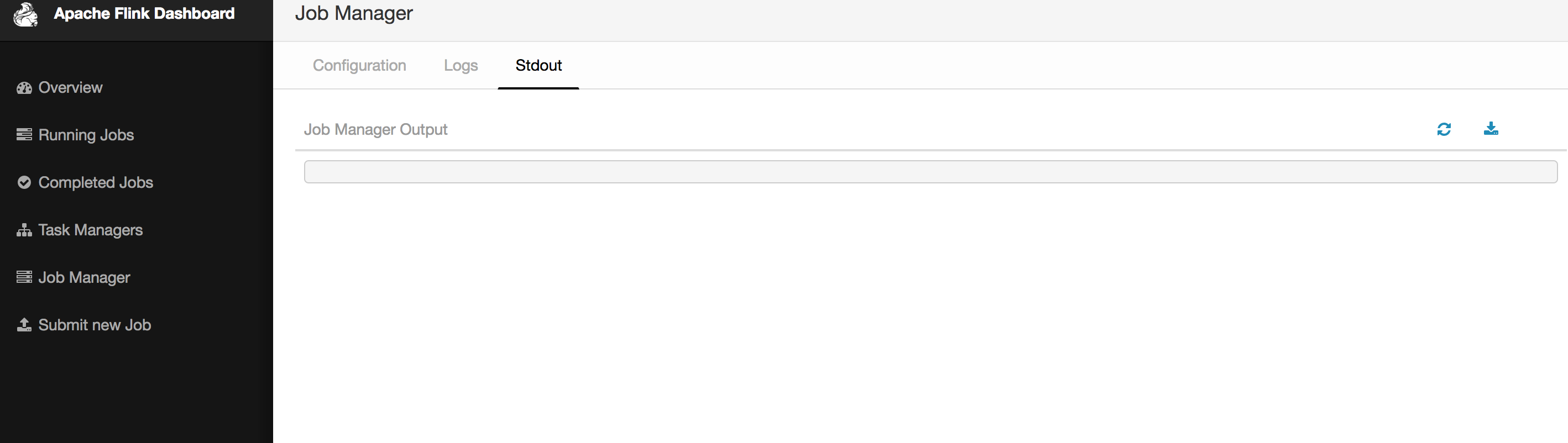

If you have a job that prints to stdout

you have to look at the taskmanager .out file (also available in

the UI).

On 27.07.2018 13:41, vino yang wrote:

|

|

Thanks Vino and Chesnay. I see the logs files being created but don't see the output. I am now writing to a kafka sink, it is not working. Works from IntelliJ IDE and not on my local cluster. How should I configure it to run on local cluster, standalone and yarn. Thanks On Fri, Jul 27, 2018 at 5:26 AM, Chesnay Schepler <[hidden email]> wrote:

|

|

Hi anna, Did you see the exception thrown by kafka sink? For test the output, I suggestion: 1) you can write the copied data which you want to sink to kafka to another sink (such as STDOUT), that means you write your data to two sinks, if you can not see the data in taskamanager.out, maybe the problem happens in the previous operator. 2) if your previous operator's transformation before kafka-sink is implemented with a UDF, you can log the data there. 3) share more details to us, such as job program, Flink version, JM and TM logs to help analysis. Thanks, vino. 2018-07-28 1:29 GMT+08:00 anna stax <[hidden email]>:

|

|

Thanks vino. It is working now. I have no idea what was wrong and why it is working now. I see the data in the kafka sink as well as in the taskexecutor's .out file All I did was delete the data in the kafka topics so I could do a clean test. Not sure how this helped fix my problem. Thanks On Fri, Jul 27, 2018 at 7:02 PM, vino yang <[hidden email]> wrote:

|

|

Hi anna, Glad to hear this. Thanks, vino. 2018-07-30 12:49 GMT+08:00 anna stax <[hidden email]>:

|

«

Return to (DEPRECATED) Apache Flink User Mailing List archive.

|

1 view|%1 views

| Free forum by Nabble | Edit this page |