Handling back pressure in Flink.

Handling back pressure in Flink.

|

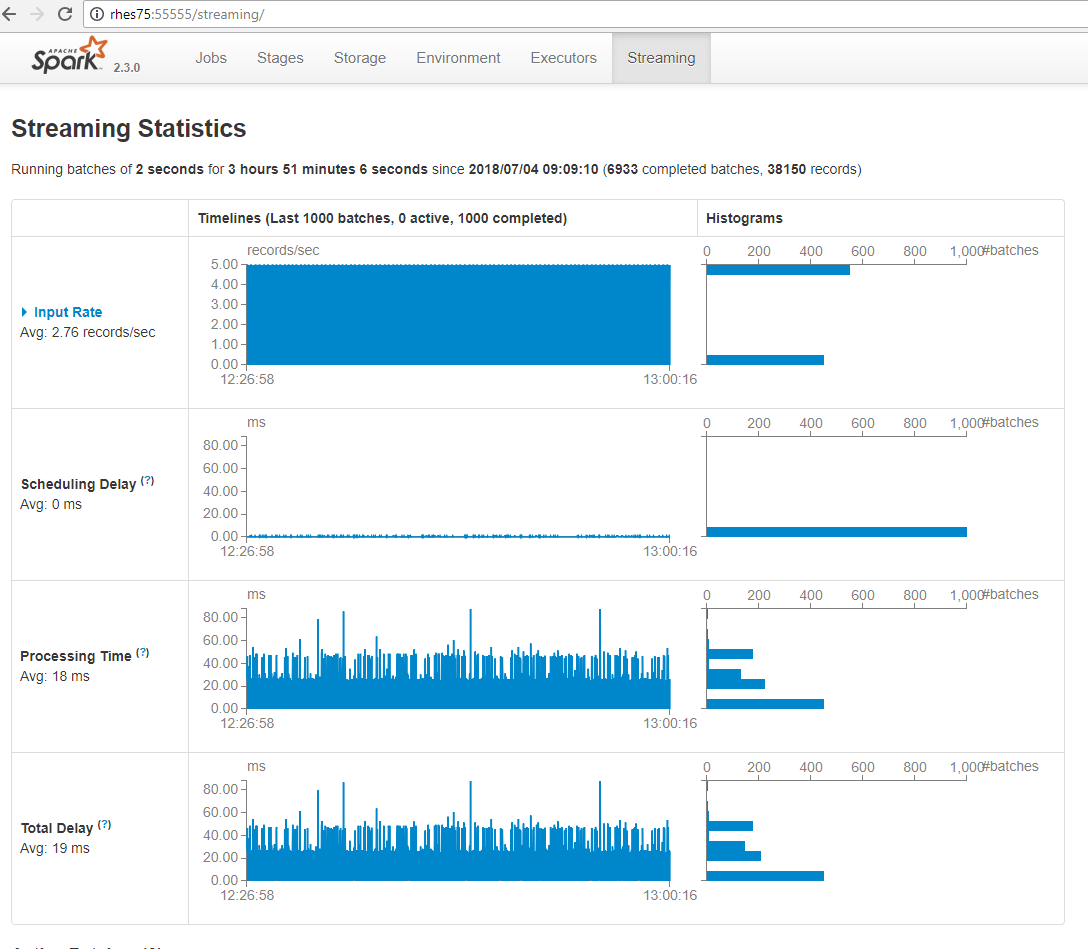

Hi, In spark one can handle back pressure by setting the spark conf parameter: sparkConf.set("spark.streaming.backpressure.enabled","true") With backpressure you make Spark Streaming application stable, i.e. receives data only as fast as it can process it. In general one needs to ensure that your microbatching processing time is less that your batch interval, i.e the rate that your producer sends data into Kafka. For example this is shown in Spark GUI below for batch interval = 2 seconds  Is there such procedure in Flink please? Thanks Dr Mich Talebzadeh

LinkedIn https://www.linkedin.com/profile/view?id=AAEAAAAWh2gBxianrbJd6zP6AcPCCdOABUrV8Pw

http://talebzadehmich.wordpress.com Disclaimer: Use it at your own risk. Any and all responsibility for any loss, damage or destruction of data or any other property which may arise from relying on this email's technical content is explicitly disclaimed. The author will in no case be liable for any monetary damages arising from such loss, damage or destruction.

|

回复:Handling back pressure in Flink.

|

Hi Mich, From flink-1.5.0 the network flow control is improved by credit-based mechanism whichs handles backpressure better than before. The producer sends data based on the number of available buffers(credit) onconsumer side. If processing time on consumer side is slower than producing time on producer side, the data will be cached on outqueue and inqueue memories of both side which may trigger back pressure the producer side. You can increase the number of credits on consumer side to relieve back pressure. Eventhough the back pressure happens, the application is still stable (will not cause OOM). I think you should not worry about that. Normally it is better to consider TPS of both sides and set the proper paralellism to avoid back pressure to some extent. Zhijiang

|

| Free forum by Nabble | Edit this page |