Flink behavior as a slow consumer - out of Heap MEM

Flink behavior as a slow consumer - out of Heap MEM

|

HI , I am trying to do some performance test to my flink deployment. I am implementing an extremely simplistic use case I built a ZMQ Source The topology is ZMQ Source - > Mapper- > DIscardingSInk ( a sink that does nothing ) Data is pushed via ZMQ at a very high rate. When the incoming rate from ZMQ is higher then the rate flink can keep up with, I can see that the JVM Heap is filling up ( using Flinks metrics ) . I was expecting Flink to handle this type of backpressure gracefully and not

Note : The mapper has not state to persist

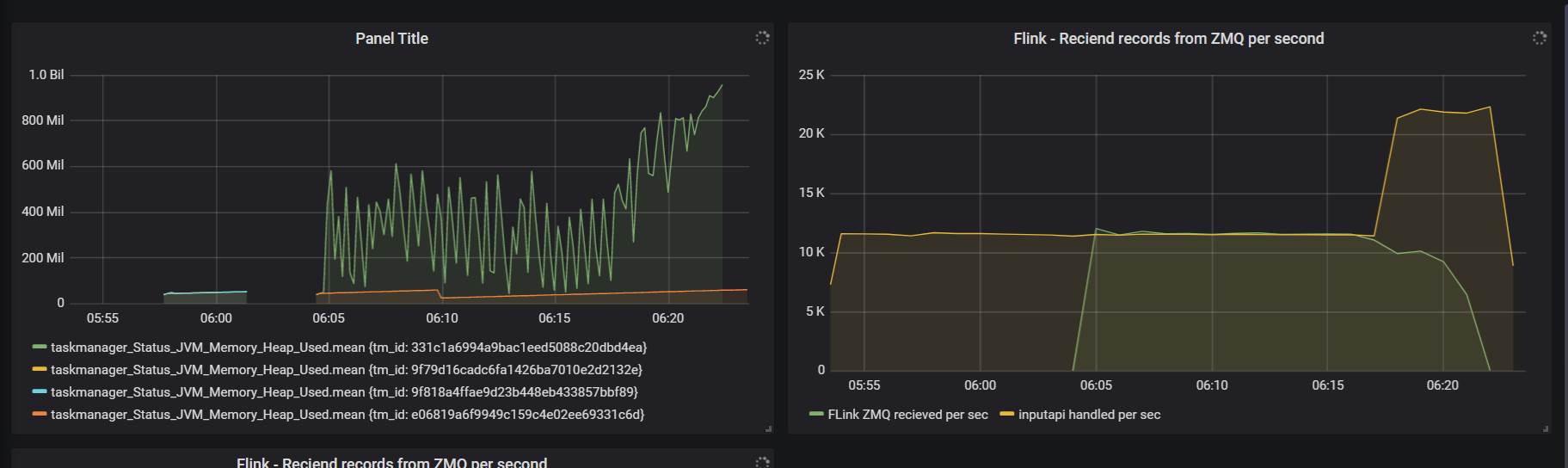

See below the Grafana charts, on the left is the TM HHEAP Used.

On the right – the ZMQ – out of flink. ( yellow ) Vs Flink consuming rate from reported by ZMQSOurce 1GB is the configured heap size

|

|

Hi Hanan, Sometimes, the behavior depends on your implementation. Since it's not a built-in connector, it would be better to share your customized source with the community so that the community would be better to help you figure out where is the problem. WDYT? Best, Vino Hanan Yehudai <[hidden email]> 于2019年11月26日周二 下午12:27写道:

|

|

Hi Hanan, Flink does handle backpressure gracefully. I guess your custom ZMQ source is receiving events in a separate thread? In a Flink source, the SourceContext.collect() method will not return if the downstream operators are not able to process incoming data fast enough. If my assumptions are right, I would suggest you to pull data from ZMQ in small batches, forwarding them to .collect(), and pausing the fetch when collect() is blocked. On Tue, Nov 26, 2019 at 6:59 AM vino yang <[hidden email]> wrote:

|

| Free forum by Nabble | Edit this page |