Flink SQL on Yarn For Help

|

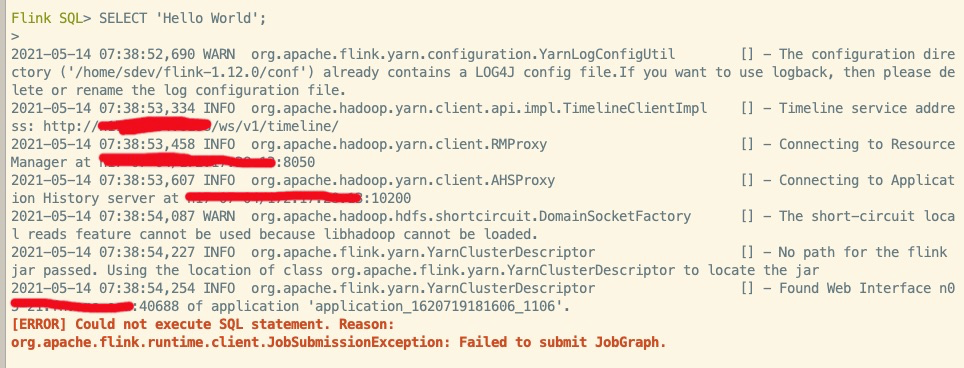

Hi, all I ran the flink sql demo from the official web. I got the error below. Could anyone help with this? Sincerely  |

|

Hi Yunhui,

officially we don't support YARN in the SQL Client yet. This is mostly because it is not tested. However, it could work due to the fact that we are using regular Flink submission APIs under the hood. Are you submitting to a job or session cluster? Maybe you can also share the complete log from the `/log` directory with us. Regards, Timo On 14.05.21 09:49, Yunhui Han wrote: > Hi, all > I ran the flink sql demo from the official web. I got the error below. > Could anyone help with this? > Sincerely > image.png > |

|

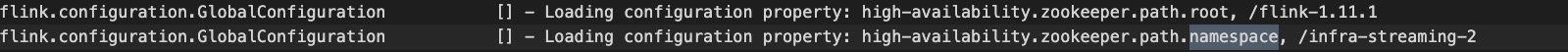

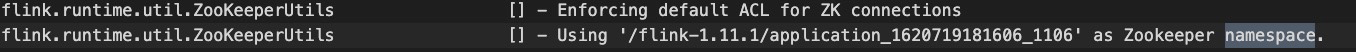

Hi Timo, Thanks for your response. I submitted to a session cluster. And this is the full log from /log directory. I found a problem that the zk namespace of the session cluster is different from which the sql-client specified. The zk namespace of the cluster is  And the zk_namespace of the sql client specified is  However, I cannot find a way to configure the zk namespace from sql client. Regards, Yunhui 2021-05-17 03:45:32,210 INFO org.apache.flink.configuration.GlobalConfiguration [] - Loading configuration property: jobmanager.rpc.address, localhost On Fri, May 14, 2021 at 9:01 PM Timo Walther <[hidden email]> wrote: Hi Yunhui, |

|

You check if there is a configuration option listed here:

https://ci.apache.org/projects/flink/flink-docs-release-1.13/docs/deployment/config/ If it is, you can add it to config/flink-config.yaml. Maybe others have other pointers. Otherwise you will need to use Table API instead of SQL Client. Regards, Timo On 17.05.21 08:38, Yunhui Han wrote: > Hi Timo, > > Thanks for your response. I submitted to a session cluster. > And this is the full log from /log directory. I found a problem that the > *zk namespace* of the session cluster is different from which the > sql-client specified. > The zk namespace of the cluster is > image.png > And the zk_namespace of the sql client specified is > image.png > However, I cannot find a way to configure the zk namespace from sql client. > > Regards, > Yunhui > > 2021-05-17 03:45:32,210 INFO > org.apache.flink.configuration.GlobalConfiguration [] - > Loading configuration property: jobmanager.rpc.address, localhost > 2021-05-17 03:45:32,213 INFO > org.apache.flink.configuration.GlobalConfiguration [] - > Loading configuration property: jobmanager.rpc.port, 6123 > 2021-05-17 03:45:32,213 INFO > org.apache.flink.configuration.GlobalConfiguration [] - > Loading configuration property: jobmanager.memory.process.size, 4096m > 2021-05-17 03:45:32,213 INFO > org.apache.flink.configuration.GlobalConfiguration [] - > Loading configuration property: taskmanager.memory.process.size, 4096m > 2021-05-17 03:45:32,213 INFO > org.apache.flink.configuration.GlobalConfiguration [] - > Loading configuration property: taskmanager.numberOfTaskSlots, 1 > 2021-05-17 03:45:32,213 INFO > org.apache.flink.configuration.GlobalConfiguration [] - > Loading configuration property: parallelism.default, 1 > 2021-05-17 03:45:32,222 INFO > org.apache.flink.configuration.GlobalConfiguration [] - > Loading configuration property: state.backend, filesystem > 2021-05-17 03:45:32,222 INFO > org.apache.flink.configuration.GlobalConfiguration [] - > Loading configuration property: state.checkpoints.dir, > hdfs:///flink-1.11.0/checkpoints > 2021-05-17 03:45:32,222 INFO > org.apache.flink.configuration.GlobalConfiguration [] - > Loading configuration property: state.savepoints.dir, > hdfs:///flink-1.11.0/savepoints > 2021-05-17 03:45:32,223 INFO > org.apache.flink.configuration.GlobalConfiguration [] - > Loading configuration property: > jobmanager.execution.failover-strategy, region > 2021-05-17 03:45:32,223 INFO > org.apache.flink.configuration.GlobalConfiguration [] - > Loading configuration property: high-availability, zookeeper > 2021-05-17 03:45:32,223 INFO > org.apache.flink.configuration.GlobalConfiguration [] - > Loading configuration property: high-availability.zookeeper.quorum, > *******:2181,*******:2181,*******:2181 > 2021-05-17 03:45:32,223 INFO > org.apache.flink.configuration.GlobalConfiguration [] - > Loading configuration property: high-availability.storageDir, > hdfs:///flink-1.11.0/recovery > 2021-05-17 03:45:32,223 INFO > org.apache.flink.configuration.GlobalConfiguration [] - > Loading configuration property: > high-availability.zookeeper.path.root, /flink-1.11.1 > 2021-05-17 03:45:32,224 INFO > org.apache.flink.configuration.GlobalConfiguration [] - > Loading configuration property: > high-availability.zookeeper.path.namespace, /infra-streaming-2 > 2021-05-17 03:45:32,224 INFO > org.apache.flink.configuration.GlobalConfiguration [] - > Loading configuration property: yarn.application-attempts, 10 > 2021-05-17 03:45:32,310 INFO > org.apache.flink.yarn.cli.FlinkYarnSessionCli [] - > Found Yarn properties file under /tmp/.yarn-properties-sdev. > 2021-05-17 03:45:32,524 INFO > org.apache.flink.table.client.gateway.local.LocalExecutor [] - > Using default environment file: > file:/home/sdev/flink-1.12.0/conf/sql-client-defaults.yaml > 2021-05-17 03:45:32,771 INFO > org.apache.flink.table.client.config.entries.ExecutionEntry [] - > Property 'execution.restart-strategy.type' not specified. Using > default value: fallback > 2021-05-17 03:45:33,619 INFO > org.apache.flink.table.client.gateway.local.ExecutionContext [] - > Executor config: {execution.savepoint.ignore-unclaimed-state=false, > execution.attached=true, yarn.application.id > <http://yarn.application.id>=application_1620719181606_1106, > execution.shutdown-on-attached-exit=false, > pipeline.jars=[file:/home/sdev/flink-1.12.0/opt/flink-sql-client_2.11-1.12.0.jar], > high-availability.cluster-id=application_1620719181606_1106, > pipeline.classpaths=[], execution.target=yarn-session, > $internal.deployment.config-dir=/home/sdev/flink-1.12.0/conf} > 2021-05-17 03:45:33,783 INFO > org.apache.flink.table.client.cli.CliClient [] - > Command history file path: /home/sdev/.flink-sql-history > 2021-05-17 03:47:34,267 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Trying to connect to localhost/127.0.0.1:6123 <http://127.0.0.1:6123> > 2021-05-17 03:47:34,269 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address 'n-infra-hadoop-client/*******': > Connection refused (Connection refused) > 2021-05-17 03:47:34,271 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address '/127.0.0.1 <http://127.0.0.1>': > Connection refused (Connection refused) > 2021-05-17 03:47:34,272 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address > '/fe80:0:0:0:f816:3eff:fe81:be62%eth0': Network is unreachable > (connect failed) > 2021-05-17 03:47:34,272 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address '/*******': Connection refused > (Connection refused) > 2021-05-17 03:47:34,273 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address '/0:0:0:0:0:0:0:1%lo': Network is > unreachable (connect failed) > 2021-05-17 03:47:34,273 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address '/127.0.0.1 <http://127.0.0.1>': > Connection refused (Connection refused) > 2021-05-17 03:47:34,274 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address > '/fe80:0:0:0:f816:3eff:fe81:be62%eth0': Network is unreachable > (connect failed) > 2021-05-17 03:47:34,274 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address '/*******': Connection refused > (Connection refused) > 2021-05-17 03:47:34,275 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address '/0:0:0:0:0:0:0:1%lo': Network is > unreachable (connect failed) > 2021-05-17 03:47:34,276 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address '/127.0.0.1 <http://127.0.0.1>': > Connection refused (Connection refused) > 2021-05-17 03:47:34,276 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Could not connect. Waiting for 1600 msecs before next attempt > 2021-05-17 03:47:35,877 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Trying to connect to localhost/127.0.0.1:6123 <http://127.0.0.1:6123> > 2021-05-17 03:47:35,877 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address 'n-infra-hadoop-client/*******': > Connection refused (Connection refused) > 2021-05-17 03:47:35,878 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address '/127.0.0.1 <http://127.0.0.1>': > Connection refused (Connection refused) > 2021-05-17 03:47:35,879 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address > '/fe80:0:0:0:f816:3eff:fe81:be62%eth0': Network is unreachable > (connect failed) > 2021-05-17 03:47:35,880 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address '/*******': Connection refused > (Connection refused) > 2021-05-17 03:47:35,881 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address '/0:0:0:0:0:0:0:1%lo': Network is > unreachable (connect failed) > 2021-05-17 03:47:35,881 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address '/127.0.0.1 <http://127.0.0.1>': > Connection refused (Connection refused) > 2021-05-17 03:47:35,882 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address > '/fe80:0:0:0:f816:3eff:fe81:be62%eth0': Network is unreachable > (connect failed) > 2021-05-17 03:47:35,882 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address '/*******': Connection refused > (Connection refused) > 2021-05-17 03:47:35,884 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address '/0:0:0:0:0:0:0:1%lo': Network is > unreachable (connect failed) > 2021-05-17 03:47:35,885 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address '/127.0.0.1 <http://127.0.0.1>': > Connection refused (Connection refused) > 2021-05-17 03:47:35,885 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Could not connect. Waiting for 1804 msecs before next attempt > 2021-05-17 03:47:37,690 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Trying to connect to localhost/127.0.0.1:6123 <http://127.0.0.1:6123> > 2021-05-17 03:47:37,692 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address 'n-infra-hadoop-client/*******': > Connection refused (Connection refused) > 2021-05-17 03:47:37,693 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address '/127.0.0.1 <http://127.0.0.1>': > Connection refused (Connection refused) > 2021-05-17 03:47:37,694 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address > '/fe80:0:0:0:f816:3eff:fe81:be62%eth0': Network is unreachable > (connect failed) > 2021-05-17 03:47:37,694 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address '/*******': Connection refused > (Connection refused) > 2021-05-17 03:47:37,695 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address '/0:0:0:0:0:0:0:1%lo': Network is > unreachable (connect failed) > 2021-05-17 03:47:37,696 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address '/127.0.0.1 <http://127.0.0.1>': > Connection refused (Connection refused) > 2021-05-17 03:47:37,697 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address > '/fe80:0:0:0:f816:3eff:fe81:be62%eth0': Network is unreachable > (connect failed) > 2021-05-17 03:47:37,697 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address '/*******': Connection refused > (Connection refused) > 2021-05-17 03:47:37,698 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address '/0:0:0:0:0:0:0:1%lo': Network is > unreachable (connect failed) > 2021-05-17 03:47:37,698 INFO > org.apache.flink.runtime.net.ConnectionUtils [] - > Failed to connect from address '/127.0.0.1 <http://127.0.0.1>': > Connection refused (Connection refused) > 2021-05-17 03:47:37,698 WARN > org.apache.flink.runtime.net.ConnectionUtils [] - > Could not connect to localhost/127.0.0.1:6123 > <http://127.0.0.1:6123>. Selecting a local address using heuristics. > 2021-05-17 03:47:38,976 INFO > org.apache.flink.table.client.gateway.local.ProgramDeployer [] - > Submitting job > org.apache.flink.streaming.api.graph.StreamGraph@4b476233 for query > yarn-session: select 1` > 2021-05-17 03:47:39,042 WARN > org.apache.flink.yarn.configuration.YarnLogConfigUtil [] - > The configuration directory ('/home/sdev/flink-1.12.0/conf') already > contains a LOG4J config file.If you want to use logback, then please > delete or rename the log configuration file. > 2021-05-17 03:47:39,599 INFO > org.apache.hadoop.yarn.client.api.impl.TimelineClientImpl [] - > Timeline service address: http://******:8188/ws/v1/timeline/ > 2021-05-17 03:47:39,699 INFO org.apache.hadoop.yarn.client.RMProxy > [] - Connecting to ResourceManager at > ****/******:8050 > 2021-05-17 03:47:39,824 INFO org.apache.hadoop.yarn.client.AHSProxy > [] - Connecting to Application History server > at ******/******:10200 > 2021-05-17 03:47:40,266 WARN > org.apache.hadoop.hdfs.shortcircuit.DomainSocketFactory [] - > The short-circuit local reads feature cannot be used because > libhadoop cannot be loaded. > 2021-05-17 03:47:40,418 INFO > org.apache.flink.yarn.YarnClusterDescriptor [] - > No path for the flink jar passed. Using the location of class > org.apache.flink.yarn.YarnClusterDescriptor to locate the jar > 2021-05-17 03:47:40,450 INFO > org.apache.flink.yarn.YarnClusterDescriptor [] - > Found Web Interface ********:40688 of application > 'application_1620719181606_1106'. > 2021-05-17 03:47:40,466 INFO > org.apache.flink.runtime.util.ZooKeeperUtils [] - > Enforcing default ACL for ZK connections > 2021-05-17 03:47:40,466 INFO > org.apache.flink.runtime.util.ZooKeeperUtils [] - > Using '/flink-1.11.1/application_1620719181606_1106' as Zookeeper > namespace. > 2021-05-17 03:47:40,501 INFO > org.apache.flink.shaded.curator4.org.apache.curator.utils.Compatibility [] - Running in ZooKeeper 3.4.x compatibility mode > 2021-05-17 03:47:40,502 INFO > org.apache.flink.shaded.curator4.org.apache.curator.utils.Compatibility [] - Using emulated InjectSessionExpiration > 2021-05-17 03:47:40,527 INFO > org.apache.flink.shaded.curator4.org.apache.curator.framework.imps.CuratorFrameworkImpl [] - Starting > 2021-05-17 03:47:40,534 INFO > org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ZooKeeper > [] - Client > environment:zookeeper.version=3.4.14-4c25d480e66aadd371de8bd2fd8da255ac140bcf, > built on 03/06/2019 16:18 GMT > 2021-05-17 03:47:40,534 INFO > org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ZooKeeper > [] - Client environment:host.name <http://host.name>=******* > 2021-05-17 03:47:40,534 INFO > org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ZooKeeper > [] - Client environment:java.version=1.8.0_201 > 2021-05-17 03:47:40,534 INFO > org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ZooKeeper > [] - Client environment:java.vendor=Oracle Corporation > 2021-05-17 03:47:40,535 INFO > org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ZooKeeper > [] - Client environment:java.home=/usr/lib/jvm/java-8-oracle/jre > 2021-05-17 03:47:40,535 INFO > org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ZooKeeper > [] - Client > environment:java.class.path=/home/sdev/flink-1.12.0/lib/clickhouse-jdbc-0.2.jar:/home/sdev/flink-1.12.0/lib/flink-csv-1.12.0.jar:/home/sdev/flink-1.12.0/lib/flink-json-1.12.0.jar:/home/sdev/flink-1.12.0/lib/flink-shaded-zookeeper-3.4.14.jar:/home/sdev/flink-1.12.0/lib/flink-table-blink_2.11-1.12.0.jar:/home/sdev/flink-1.12.0/lib/flink-table_2.11-1.12.0.jar:/home/sdev/flink-1.12.0/lib/log4j-1.2-api-2.12.1.jar:/home/sdev/flink-1.12.0/lib/log4j-api-2.12.1.jar:/home/sdev/flink-1.12.0/lib/log4j-core-2.12.1.jar:/home/sdev/flink-1.12.0/lib/log4j-slf4j-impl-2.12.1.jar:/home/sdev/flink-1.12.0/lib/flink-dist_2.11-1.12.0.jar:/home/sdev/flink-1.12.0/opt/flink-python_2.11-1.12.0.jar:/usr/hdp/2.5.6.0-40/hadoop/conf:/usr/hdp/2.5.6.0-40/hadoop/lib/netty-3.6.2.Final.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/junit-4.11.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/commons-lang3-3.4.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/commons-collections-3.2.2.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/commons-codec-1.4.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/gson-2.2.4.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/commons-digester-1.8.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/xz-1.0.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/jackson-annotations-2.2.3.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/commons-beanutils-core-1.8.0.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/nimbus-jose-jwt-3.9.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/java-xmlbuilder-0.4.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/commons-net-3.1.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/jackson-mapper-asl-1.9.13.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/hamcrest-core-1.3.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/commons-io-2.4.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/jackson-xc-1.9.13.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/stax-api-1.0-2.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/api-util-1.0.0-M20.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/ranger-yarn-plugin-shim-0.6.0.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/zookeeper-3.4.6.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/aws-java-sdk-s3-1.10.6.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/servlet-api-2.5.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/jackson-databind-2.2.3.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/jcip-annotations-1.0.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/jetty-util-6.1.26.hwx.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/protobuf-java-2.5.0.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/jetty-6.1.26.hwx.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/commons-math3-3.1.1.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/jackson-core-asl-1.9.13.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/jaxb-impl-2.2.3-1.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/httpcore-4.4.4.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/apacheds-i18n-2.0.0-M15.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/httpclient-4.5.2.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/aws-java-sdk-kms-1.10.6.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/slf4j-api-1.7.10.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/ranger-plugin-classloader-0.6.0.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/jsp-api-2.1.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/ranger-hdfs-plugin-shim-0.6.0.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/commons-configuration-1.6.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/guava-11.0.2.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/htrace-core-3.1.0-incubating.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/jersey-core-1.9.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/snappy-java-1.0.4.1.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/commons-beanutils-1.7.0.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/xmlenc-0.52.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/paranamer-2.3.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/commons-cli-1.2.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/jersey-json-1.9.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/azure-storage-4.2.0.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/jersey-server-1.9.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/jets3t-0.9.0.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/jsr305-3.0.0.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/curator-framework-2.7.1.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/commons-logging-1.1.3.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/joda-time-2.8.1.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/ojdbc6.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/avro-1.7.4.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/activation-1.1.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/jackson-core-2.2.3.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/jackson-jaxrs-1.9.13.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/asm-3.2.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/azure-keyvault-core-0.8.0.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/curator-recipes-2.7.1.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/mockito-all-1.8.5.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/jettison-1.1.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/curator-client-2.7.1.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/jsch-0.1.54.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/commons-lang-2.6.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/log4j-1.2.17.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/json-smart-1.1.1.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/slf4j-log4j12-1.7.10.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/aws-java-sdk-core-1.10.6.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/jaxb-api-2.2.2.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/api-asn1-api-1.0.0-M20.jar:/usr/hdp/2.5.6.0-40/hadoop/lib/commons-compress-1.4.1.jar:/usr/hdp/2.5.6.0-40/hadoop/.//hadoop-auth.jar:/usr/hdp/2.5.6.0-40/hadoop/.//hadoop-aws-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop/.//hadoop-common.jar:/usr/hdp/2.5.6.0-40/hadoop/.//hadoop-common-2.7.3.2.5.6.0-40-tests.jar:/usr/hdp/2.5.6.0-40/hadoop/.//hadoop-azure-datalake-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop/.//hadoop-azure-datalake.jar:/usr/hdp/2.5.6.0-40/hadoop/.//hadoop-common-tests.jar:/usr/hdp/2.5.6.0-40/hadoop/.//hadoop-nfs-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop/.//hadoop-auth-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop/.//hadoop-aws.jar:/usr/hdp/2.5.6.0-40/hadoop/.//hadoop-annotations.jar:/usr/hdp/2.5.6.0-40/hadoop/.//hadoop-common-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop/.//hadoop-azure.jar:/usr/hdp/2.5.6.0-40/hadoop/.//hadoop-azure-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop/.//hadoop-nfs.jar:/usr/hdp/2.5.6.0-40/hadoop/.//hadoop-annotations-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/./:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/netty-3.6.2.Final.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/commons-codec-1.4.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/commons-io-2.4.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/leveldbjni-all-1.8.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/servlet-api-2.5.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/jetty-util-6.1.26.hwx.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/okhttp-2.4.0.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/protobuf-java-2.5.0.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/jetty-6.1.26.hwx.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/commons-daemon-1.0.13.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/guava-11.0.2.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/htrace-core-3.1.0-incubating.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/jersey-core-1.9.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/xmlenc-0.52.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/okio-1.4.0.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/commons-cli-1.2.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/jersey-server-1.9.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/jsr305-3.0.0.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/xercesImpl-2.9.1.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/commons-logging-1.1.3.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/asm-3.2.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/xml-apis-1.3.04.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/commons-lang-2.6.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/log4j-1.2.17.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/lib/netty-all-4.0.23.Final.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/.//hadoop-hdfs-2.7.3.2.5.6.0-40-tests.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/.//hadoop-hdfs-nfs.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/.//hadoop-hdfs.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/.//hadoop-hdfs-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/.//hadoop-hdfs-tests.jar:/usr/hdp/2.5.6.0-40/hadoop-hdfs/.//hadoop-hdfs-nfs-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/netty-3.6.2.Final.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/commons-lang3-3.4.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/commons-collections-3.2.2.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/commons-codec-1.4.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/gson-2.2.4.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/commons-digester-1.8.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/xz-1.0.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/zookeeper-3.4.6.2.5.6.0-40-tests.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jackson-annotations-2.2.3.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/commons-beanutils-core-1.8.0.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/nimbus-jose-jwt-3.9.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/java-xmlbuilder-0.4.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/commons-net-3.1.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jackson-mapper-asl-1.9.13.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/commons-io-2.4.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/fst-2.24.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jackson-xc-1.9.13.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/stax-api-1.0-2.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/api-util-1.0.0-M20.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/zookeeper-3.4.6.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/leveldbjni-all-1.8.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/servlet-api-2.5.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jackson-databind-2.2.3.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jcip-annotations-1.0.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jetty-util-6.1.26.hwx.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jersey-client-1.9.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/protobuf-java-2.5.0.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jetty-6.1.26.hwx.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/commons-math3-3.1.1.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/aopalliance-1.0.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jackson-core-asl-1.9.13.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jaxb-impl-2.2.3-1.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/httpcore-4.4.4.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/javax.inject-1.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/apacheds-i18n-2.0.0-M15.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/httpclient-4.5.2.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jsp-api-2.1.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/guice-servlet-3.0.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/commons-configuration-1.6.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/guava-11.0.2.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/htrace-core-3.1.0-incubating.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jersey-core-1.9.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/snappy-java-1.0.4.1.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/commons-beanutils-1.7.0.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/xmlenc-0.52.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/paranamer-2.3.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/metrics-core-3.0.1.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/commons-cli-1.2.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jersey-json-1.9.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/azure-storage-4.2.0.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jersey-server-1.9.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jets3t-0.9.0.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jersey-guice-1.9.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jsr305-3.0.0.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/curator-framework-2.7.1.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/commons-logging-1.1.3.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/avro-1.7.4.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/activation-1.1.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jackson-core-2.2.3.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/javassist-3.18.1-GA.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/guice-3.0.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jackson-jaxrs-1.9.13.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/asm-3.2.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/azure-keyvault-core-0.8.0.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/curator-recipes-2.7.1.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jettison-1.1.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/curator-client-2.7.1.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jsch-0.1.54.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/commons-lang-2.6.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/log4j-1.2.17.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/json-smart-1.1.1.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/objenesis-2.1.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/jaxb-api-2.2.2.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/api-asn1-api-1.0.0-M20.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/lib/commons-compress-1.4.1.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-client.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-server-web-proxy.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-api.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-applications-unmanaged-am-launcher-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-server-nodemanager-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-registry-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-server-resourcemanager.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-registry.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-server-resourcemanager-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-applications-distributedshell-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-server-common-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-server-timeline-pluginstorage.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-api-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-client-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-server-common.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-server-nodemanager.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-applications-distributedshell.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-server-tests.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-common.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-server-applicationhistoryservice-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-server-tests-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-common-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-server-applicationhistoryservice.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-server-web-proxy-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-server-sharedcachemanager-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-server-sharedcachemanager.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-server-timeline-pluginstorage-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-yarn/.//hadoop-yarn-applications-unmanaged-am-launcher.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/netty-3.6.2.Final.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/junit-4.11.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/xz-1.0.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/hamcrest-core-1.3.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/commons-io-2.4.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/leveldbjni-all-1.8.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/protobuf-java-2.5.0.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/aopalliance-1.0.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/jackson-core-asl-1.9.13.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/javax.inject-1.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/guice-servlet-3.0.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/jersey-core-1.9.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/paranamer-2.3.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/jersey-server-1.9.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/jersey-guice-1.9.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/avro-1.7.4.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/guice-3.0.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/asm-3.2.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/log4j-1.2.17.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/lib/commons-compress-1.4.1.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-openstack.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//netty-3.6.2.Final.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//junit-4.11.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-streaming.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-auth.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//commons-lang3-3.4.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//commons-collections-3.2.2.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-gridmix.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-mapreduce-client-app-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//commons-codec-1.4.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//gson-2.2.4.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//commons-digester-1.8.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//xz-1.0.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//commons-beanutils-core-1.8.0.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//nimbus-jose-jwt-3.9.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//java-xmlbuilder-0.4.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-sls.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//commons-net-3.1.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//jackson-mapper-asl-1.9.13.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hamcrest-core-1.3.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//commons-io-2.4.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//jackson-xc-1.9.13.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//apacheds-kerberos-codec-2.0.0-M15.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-mapreduce-client-hs-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//stax-api-1.0-2.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//api-util-1.0.0-M20.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-extras.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-extras-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-sls-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//zookeeper-3.4.6.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-mapreduce-client-shuffle.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-mapreduce-examples.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-mapreduce-client-hs-plugins-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-mapreduce-examples-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//servlet-api-2.5.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//jcip-annotations-1.0.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//jetty-util-6.1.26.hwx.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-mapreduce-client-core-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-mapreduce-client-jobclient-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-gridmix-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-mapreduce-client-jobclient-2.7.3.2.5.6.0-40-tests.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//okhttp-2.4.0.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-mapreduce-client-common.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//protobuf-java-2.5.0.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-archives.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//jetty-6.1.26.hwx.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//commons-math3-3.1.1.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//azure-data-lake-store-sdk-2.1.4.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//jackson-core-asl-1.9.13.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//jaxb-impl-2.2.3-1.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//httpcore-4.4.4.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-mapreduce-client-hs-plugins.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//apacheds-i18n-2.0.0-M15.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//httpclient-4.5.2.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//jsp-api-2.1.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-mapreduce-client-jobclient-tests.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//commons-configuration-1.6.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//guava-11.0.2.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-distcp.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-auth-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//htrace-core-3.1.0-incubating.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//jersey-core-1.9.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-streaming-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-mapreduce-client-shuffle-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//snappy-java-1.0.4.1.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-archives-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//commons-beanutils-1.7.0.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//xmlenc-0.52.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//commons-httpclient-3.1.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-openstack-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-mapreduce-client-hs.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//paranamer-2.3.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//okio-1.4.0.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//metrics-core-3.0.1.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//commons-cli-1.2.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//jersey-json-1.9.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-datajoin.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-distcp-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-mapreduce-client-common-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//jersey-server-1.9.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//jets3t-0.9.0.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-mapreduce-client-app.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//jsr305-3.0.0.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//curator-framework-2.7.1.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-ant-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//commons-logging-1.1.3.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-mapreduce-client-jobclient.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//avro-1.7.4.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//activation-1.1.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//jackson-jaxrs-1.9.13.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//asm-3.2.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//azure-keyvault-core-0.8.0.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//curator-recipes-2.7.1.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//mockito-all-1.8.5.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//jettison-1.1.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//curator-client-2.7.1.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//jsch-0.1.54.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//commons-lang-2.6.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//log4j-1.2.17.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//json-smart-1.1.1.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-rumen-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-mapreduce-client-core.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//jaxb-api-2.2.2.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//api-asn1-api-1.0.0-M20.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-ant.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-rumen.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//commons-compress-1.4.1.jar:/usr/hdp/2.5.6.0-40/hadoop-mapreduce/.//hadoop-datajoin-2.7.3.2.5.6.0-40.jar::jdbc-mysql.jar:mysql-connector-java-5.1.37-bin.jar:mysql-connector-java.jar:/usr/hdp/2.5.6.0-40/tez/tez-dag-0.7.0.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/tez/tez-runtime-library-0.7.0.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/tez/tez-common-0.7.0.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/tez/tez-yarn-timeline-cache-plugin-0.7.0.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/tez/tez-mapreduce-0.7.0.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/tez/tez-examples-0.7.0.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/tez/tez-tests-0.7.0.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/tez/tez-runtime-internals-0.7.0.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/tez/tez-api-0.7.0.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/tez/tez-yarn-timeline-history-with-acls-0.7.0.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/tez/tez-yarn-timeline-history-with-fs-0.7.0.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/tez/tez-yarn-timeline-history-0.7.0.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/tez/tez-job-analyzer-0.7.0.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/tez/tez-history-parser-0.7.0.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/tez/lib/commons-collections-3.2.2.jar:/usr/hdp/2.5.6.0-40/tez/lib/hadoop-aws-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/tez/lib/commons-codec-1.4.jar:/usr/hdp/2.5.6.0-40/tez/lib/jettison-1.3.4.jar:/usr/hdp/2.5.6.0-40/tez/lib/commons-io-2.4.jar:/usr/hdp/2.5.6.0-40/tez/lib/slf4j-api-1.7.5.jar:/usr/hdp/2.5.6.0-40/tez/lib/servlet-api-2.5.jar:/usr/hdp/2.5.6.0-40/tez/lib/jetty-util-6.1.26.hwx.jar:/usr/hdp/2.5.6.0-40/tez/lib/hadoop-mapreduce-client-core-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/tez/lib/jersey-client-1.9.jar:/usr/hdp/2.5.6.0-40/tez/lib/protobuf-java-2.5.0.jar:/usr/hdp/2.5.6.0-40/tez/lib/jetty-6.1.26.hwx.jar:/usr/hdp/2.5.6.0-40/tez/lib/commons-math3-3.1.1.jar:/usr/hdp/2.5.6.0-40/tez/lib/commons-collections4-4.1.jar:/usr/hdp/2.5.6.0-40/tez/lib/jsr305-2.0.3.jar:/usr/hdp/2.5.6.0-40/tez/lib/guava-11.0.2.jar:/usr/hdp/2.5.6.0-40/tez/lib/commons-cli-1.2.jar:/usr/hdp/2.5.6.0-40/tez/lib/jersey-json-1.9.jar:/usr/hdp/2.5.6.0-40/tez/lib/hadoop-mapreduce-client-common-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/tez/lib/hadoop-azure-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/tez/lib/commons-lang-2.6.jar:/usr/hdp/2.5.6.0-40/tez/lib/hadoop-yarn-server-web-proxy-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/tez/lib/hadoop-annotations-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/tez/lib/hadoop-yarn-server-timeline-pluginstorage-2.7.3.2.5.6.0-40.jar:/usr/hdp/2.5.6.0-40/tez/lib/metrics-core-3.1.0.jar:/usr/hdp/2.5.6.0-40/tez/conf:/usr/hdp/current/hadoop-client/conf::/etc/hbase/conf:/home/sdev/flink-1.12.0/opt/flink-sql-client_2.11-1.12.0.jar > 2021-05-17 03:47:40,537 INFO > org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ZooKeeper > [] - Client > environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib > 2021-05-17 03:47:40,537 INFO > org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ZooKeeper > [] - Client environment:java.io.tmpdir=/tmp > 2021-05-17 03:47:40,537 INFO > org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ZooKeeper > [] - Client environment:java.compiler=<NA> > 2021-05-17 03:47:40,537 INFO > org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ZooKeeper > [] - Client environment:os.name <http://os.name>=Linux > 2021-05-17 03:47:40,537 INFO > org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ZooKeeper > [] - Client environment:os.arch=amd64 > 2021-05-17 03:47:40,537 INFO > org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ZooKeeper > [] - Client environment:os.version=3.13.0-141-generic > 2021-05-17 03:47:40,537 INFO > org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ZooKeeper > [] - Client environment:user.name <http://user.name>=sdev > 2021-05-17 03:47:40,537 INFO > org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ZooKeeper > [] - Client environment:user.home=/home/sdev > 2021-05-17 03:47:40,537 INFO > org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ZooKeeper > [] - Client environment:user.dir=/home/sdev/flink-1.12.0 > 2021-05-17 03:47:40,537 INFO > org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ZooKeeper > [] - Initiating client connection, > connectString=******:2181,*******:2181,******:2181 > sessionTimeout=60000 > watcher=org.apache.flink.shaded.curator4.org.apache.curator.ConnectionState@10ad95cd > 2021-05-17 03:47:40,558 INFO > org.apache.flink.shaded.curator4.org.apache.curator.framework.imps.CuratorFrameworkImpl [] - Default schema > 2021-05-17 03:47:40,568 INFO > org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ClientCnxn > [] - Opening socket connection to server *******/******:2181. Will > not attempt to authenticate using SASL (unknown error) > 2021-05-17 03:47:40,570 INFO > org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ClientCnxn > [] - Socket connection established to *******/*******:2181, > initiating session > 2021-05-17 03:47:40,581 INFO > org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ClientCnxn > [] - Session establishment complete on server *******/*******:2181, > sessionid = 0x277f856243c83ef, negotiated timeout = 40000 > 2021-05-17 03:47:40,585 INFO > org.apache.flink.shaded.curator4.org.apache.curator.framework.state.ConnectionStateManager [] - State change: CONNECTED > 2021-05-17 03:47:40,699 INFO > org.apache.flink.runtime.leaderretrieval.DefaultLeaderRetrievalService [] - Starting DefaultLeaderRetrievalService with ZookeeperLeaderRetrievalDriver{retrievalPath='/leader/rest_server_lock'}. > 2021-05-17 03:48:10,756 INFO > org.apache.flink.runtime.leaderretrieval.DefaultLeaderRetrievalService [] - Stopping DefaultLeaderRetrievalService. > 2021-05-17 03:48:10,756 INFO > org.apache.flink.runtime.leaderretrieval.ZooKeeperLeaderRetrievalDriver [] - Closing ZookeeperLeaderRetrievalDriver{retrievalPath='/leader/rest_server_lock'}. > 2021-05-17 03:48:10,757 INFO > org.apache.flink.shaded.curator4.org.apache.curator.framework.imps.CuratorFrameworkImpl [] - backgroundOperationsLoop exiting > 2021-05-17 03:48:10,768 INFO > org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ZooKeeper > [] - Session: 0x277f856243c83ef closed > 2021-05-17 03:48:10,768 INFO > org.apache.flink.shaded.zookeeper3.org.apache.zookeeper.ClientCnxn > [] - EventThread shut down for session: 0x277f856243c83ef > 2021-05-17 03:48:10,769 WARN > org.apache.flink.table.client.cli.CliClient [] - > Could not execute SQL statement. > org.apache.flink.table.client.gateway.SqlExecutionException: Error > while submitting job. > at > org.apache.flink.table.client.gateway.local.LocalExecutor.lambda$executeQueryInternal$7(LocalExecutor.java:554) > ~[flink-sql-client_2.11-1.12.0.jar:1.12.0] > at > org.apache.flink.table.client.gateway.local.ExecutionContext.wrapClassLoader(ExecutionContext.java:257) > ~[flink-sql-client_2.11-1.12.0.jar:1.12.0] > at > org.apache.flink.table.client.gateway.local.LocalExecutor.executeQueryInternal(LocalExecutor.java:549) > ~[flink-sql-client_2.11-1.12.0.jar:1.12.0] > at > org.apache.flink.table.client.gateway.local.LocalExecutor.executeQuery(LocalExecutor.java:365) > ~[flink-sql-client_2.11-1.12.0.jar:1.12.0] > at > org.apache.flink.table.client.cli.CliClient.callSelect(CliClient.java:634) > ~[flink-sql-client_2.11-1.12.0.jar:1.12.0] > at > org.apache.flink.table.client.cli.CliClient.callCommand(CliClient.java:324) > ~[flink-sql-client_2.11-1.12.0.jar:1.12.0] > at java.util.Optional.ifPresent(Optional.java:159) [?:1.8.0_201] > at > org.apache.flink.table.client.cli.CliClient.open(CliClient.java:216) > [flink-sql-client_2.11-1.12.0.jar:1.12.0] > at > org.apache.flink.table.client.SqlClient.openCli(SqlClient.java:141) > [flink-sql-client_2.11-1.12.0.jar:1.12.0] > at org.apache.flink.table.client.SqlClient.start(SqlClient.java:114) > [flink-sql-client_2.11-1.12.0.jar:1.12.0] > at org.apache.flink.table.client.SqlClient.main(SqlClient.java:196) > [flink-sql-client_2.11-1.12.0.jar:1.12.0] > Caused by: java.util.concurrent.ExecutionException: > org.apache.flink.runtime.client.JobSubmissionException: Failed to > submit JobGraph. > at > java.util.concurrent.CompletableFuture.reportGet(CompletableFuture.java:357) > ~[?:1.8.0_201] > at > java.util.concurrent.CompletableFuture.get(CompletableFuture.java:1895) > ~[?:1.8.0_201] > at > org.apache.flink.table.client.gateway.local.LocalExecutor.lambda$executeQueryInternal$7(LocalExecutor.java:552) > ~[flink-sql-client_2.11-1.12.0.jar:1.12.0] > ... 10 more > Caused by: org.apache.flink.runtime.client.JobSubmissionException: > Failed to submit JobGraph. > at > org.apache.flink.client.program.rest.RestClusterClient.lambda$submitJob$7(RestClusterClient.java:366) > ~[flink-dist_2.11-1.12.0.jar:1.12.0] > at > java.util.concurrent.CompletableFuture.uniExceptionally(CompletableFuture.java:870) > ~[?:1.8.0_201] > at > java.util.concurrent.CompletableFuture$UniExceptionally.tryFire(CompletableFuture.java:852) > ~[?:1.8.0_201] > at > java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:474) > ~[?:1.8.0_201] > at > java.util.concurrent.CompletableFuture.completeExceptionally(CompletableFuture.java:1977) > ~[?:1.8.0_201] > at > org.apache.flink.runtime.concurrent.FutureUtils.lambda$retryOperationWithDelay$9(FutureUtils.java:361) > ~[flink-dist_2.11-1.12.0.jar:1.12.0] > at > java.util.concurrent.CompletableFuture.uniWhenComplete(CompletableFuture.java:760) > ~[?:1.8.0_201] > at > java.util.concurrent.CompletableFuture$UniWhenComplete.tryFire(CompletableFuture.java:736) > ~[?:1.8.0_201] > at > java.util.concurrent.CompletableFuture.postComplete(CompletableFuture.java:474) > ~[?:1.8.0_201] > at > java.util.concurrent.CompletableFuture.completeExceptionally(CompletableFuture.java:1977) > ~[?:1.8.0_201] > at > org.apache.flink.runtime.concurrent.FutureUtils$Timeout.run(FutureUtils.java:1168) > ~[flink-dist_2.11-1.12.0.jar:1.12.0] > at > org.apache.flink.runtime.concurrent.DirectExecutorService.execute(DirectExecutorService.java:211) > ~[flink-dist_2.11-1.12.0.jar:1.12.0] > at > org.apache.flink.runtime.concurrent.FutureUtils.lambda$orTimeout$15(FutureUtils.java:549) > ~[flink-dist_2.11-1.12.0.jar:1.12.0] > at > java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) > ~[?:1.8.0_201] > at java.util.concurrent.FutureTask.run(FutureTask.java:266) > ~[?:1.8.0_201] > at > java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180) > ~[?:1.8.0_201] > at > java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293) > ~[?:1.8.0_201] > at > java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) > ~[?:1.8.0_201] > at > java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) > ~[?:1.8.0_201] > at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_201] > Caused by: java.util.concurrent.TimeoutException > at > org.apache.flink.runtime.concurrent.FutureUtils$Timeout.run(FutureUtils.java:1168) > ~[flink-dist_2.11-1.12.0.jar:1.12.0] > at > org.apache.flink.runtime.concurrent.DirectExecutorService.execute(DirectExecutorService.java:211) > ~[flink-dist_2.11-1.12.0.jar:1.12.0] > at > org.apache.flink.runtime.concurrent.FutureUtils.lambda$orTimeout$15(FutureUtils.java:549) > ~[flink-dist_2.11-1.12.0.jar:1.12.0] > at > java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) > ~[?:1.8.0_201] > at java.util.concurrent.FutureTask.run(FutureTask.java:266) > ~[?:1.8.0_201] > at > java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180) > ~[?:1.8.0_201] > at > java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293) > ~[?:1.8.0_201] > at > java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) > ~[?:1.8.0_201] > at > java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) > ~[?:1.8.0_201] > at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_201] > > > On Fri, May 14, 2021 at 9:01 PM Timo Walther <[hidden email] > <mailto:[hidden email]>> wrote: > > Hi Yunhui, > > officially we don't support YARN in the SQL Client yet. This is mostly > because it is not tested. However, it could work due to the fact > that we > are using regular Flink submission APIs under the hood. Are you > submitting to a job or session cluster? > > Maybe you can also share the complete log from the `/log` directory > with us. > > Regards, > Timo > > > On 14.05.21 09:49, Yunhui Han wrote: > > Hi, all > > I ran the flink sql demo from the official web. I got the error > below. > > Could anyone help with this? > > Sincerely > > image.png > > > |

«

Return to (DEPRECATED) Apache Flink User Mailing List archive.

|

1 view|%1 views

| Free forum by Nabble | Edit this page |