Flink Batch job: All slots for groupReduce task scheduled on same machine

Flink Batch job: All slots for groupReduce task scheduled on same machine

|

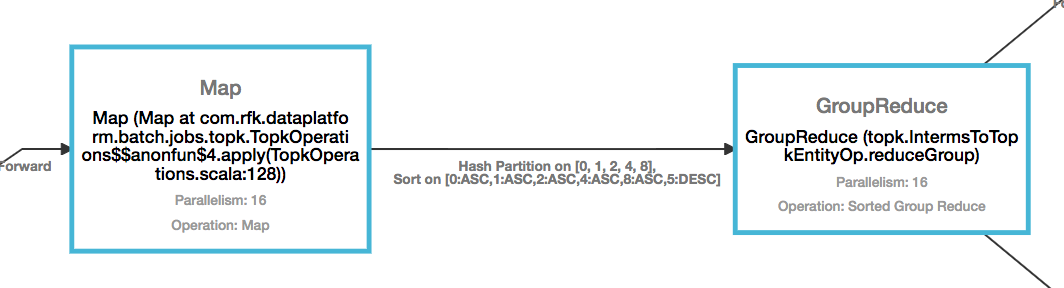

Hello, I have a fink batch job, where I am grouping dataset on some keys, and then using group reduce. Parallelism is set to 16. The slots for the Map task is distributed across all the machines, but for GroupReduce all the slots are being assigned to the same machine. Can you help me understand why/when this can happen? Code looks something like: dataset.map(MapFunction())

Thanks in advance Aneesha |

Re: Flink Batch job: All slots for groupReduce task scheduled on same machine

|

Hi,

Could you please also post where/how you see which tasks are mapped to which slots/TaskManagers? Best, Aljoscha

|

Re: Flink Batch job: All slots for groupReduce task scheduled on same machine

|

Hello Aljoscha

I looked into the Subtasks session on Flink Dashboard, for the about two tasks. Thanks Aneesha

|

Re: Flink Batch job: All slots for groupReduce task scheduled on same machine

|

Could you please send a screenshot?

|

Re: Flink Batch job: All slots for groupReduce task scheduled on same machine

|

2018-02-20, 14:31:58 2018-02-20, 14:52:28 20m 30s Map (Map at com.rfk.dataplatform.batch.jobs.topk.TopkOperations$$anonfun$4.apply(TopkOperations.scala:128)) 10.8 GB 130,639,359 10.8 GB 130,639,359 16 00016000 FINISHED Start Time End Time Duration Bytes received Records received Bytes sent Records sent Attempt Host Status 2018-02-20, 14:43:05 2018-02-20, 14:52:28 9m 22s 693 MB 8,169,369 693 MB 8,169,369 1 ip-10-17-10-20:46079 FINISHED 2018-02-20, 14:31:58 2018-02-20, 14:32:35 37s 692 MB 8,164,898 692 MB 8,164,898 1 ip-10-17-10-20:46079 FINISHED 2018-02-20, 14:45:52 2018-02-20, 14:52:25 6m 32s 692 MB 8,160,648 692 MB 8,160,648 1 ip-10-17-10-20:46079 FINISHED 2018-02-20, 14:32:53 2018-02-20, 14:33:30 36s 692 MB 8,164,117 692 MB 8,164,117 1 ip-10-17-11-156:53921 FINISHED 2018-02-20, 14:39:05 2018-02-20, 14:39:43 37s 692 MB 8,168,042 692 MB 8,168,042 1 ip-10-17-11-156:53921 FINISHED 2018-02-20, 14:42:12 2018-02-20, 14:46:57 4m 45s 692 MB 8,161,923 692 MB 8,161,923 1 ip-10-17-11-156:53921 FINISHED 2018-02-20, 14:38:13 2018-02-20, 14:38:47 34s 692 MB 8,163,351 692 MB 8,163,351 1 ip-10-17-8-168:54366 FINISHED 2018-02-20, 14:39:34 2018-02-20, 14:40:08 33s 692 MB 8,163,694 692 MB 8,163,694 1 ip-10-17-8-168:54366 FINISHED 2018-02-20, 14:32:09 2018-02-20, 14:32:42 33s 692 MB 8,165,675 692 MB 8,165,675 1 ip-10-17-8-168:54366 FINISHED 2018-02-20, 14:41:34 2018-02-20, 14:46:52 5m 17s 692 MB 8,165,679 692 MB 8,165,679 1 ip-10-17-8-193:33639 FINISHED 2018-02-20, 14:44:03 2018-02-20, 14:47:10 3m 6s 692 MB 8,165,245 692 MB 8,165,245 1 ip-10-17-8-193:33639 FINISHED 2018-02-20, 14:41:20 2018-02-20, 14:41:54 34s 692 MB 8,168,041 692 MB 8,168,041 1 ip-10-17-8-193:33639 FINISHED 2018-02-20, 14:40:55 2018-02-20, 14:41:32 36s 692 MB 8,167,142 692 MB 8,167,142 1 ip-10-17-9-52:36094 FINISHED 2018-02-20, 14:41:35 2018-02-20, 14:46:54 5m 18s 692 MB 8,161,355 692 MB 8,161,355 1 ip-10-17-9-52:36094 FINISHED 2018-02-20, 14:40:08 2018-02-20, 14:40:52 44s 692 MB 8,166,737 692 MB 8,166,737 1 ip-10-17-9-52:36094 FINISHED 2018-02-20, 14:44:23 2018-02-20, 14:47:12 2m 48s 692 MB 8,163,443 692 MB 8,163,443 1 ip-10-17-9-52:36094 FINISHED 2018-02-20, 14:31:58 2018-02-20, 14:59:18 27m 19s GroupReduce (topk.IntermsToTopkEntityOp.reduceGroup) 10.8 GB 130,639,359 3.53 GB 5,163,805 16 00016000 FINISHED Start Time End Time Duration Bytes received Records received Bytes sent Records sent Attempt Host Status 2018-02-20, 14:31:58 2018-02-20, 14:58:49 26m 51s 684 MB 8,098,138 226 MB 323,203 1 ip-10-17-10-20:46079 FINISHED 2018-02-20, 14:31:58 2018-02-20, 14:59:01 27m 3s 690 MB 8,210,429 226 MB 322,178 1 ip-10-17-10-20:46079 FINISHED 2018-02-20, 14:31:58 2018-02-20, 14:59:06 27m 8s 714 MB 8,483,239 226 MB 322,797 1 ip-10-17-10-20:46079 FINISHED 2018-02-20, 14:31:58 2018-02-20, 14:58:57 26m 58s 694 MB 8,176,076 226 MB 322,600 1 ip-10-17-10-20:46079 FINISHED 2018-02-20, 14:31:58 2018-02-20, 14:59:02 27m 4s 680 MB 8,005,934 226 MB 323,506 1 ip-10-17-10-20:46079 FINISHED 2018-02-20, 14:31:58 2018-02-20, 14:59:15 27m 16s 739 MB 8,708,468 227 MB 323,087 1 ip-10-17-10-20:46079 FINISHED 2018-02-20, 14:31:58 2018-02-20, 14:58:39 26m 41s 682 MB 8,015,473 225 MB 322,401 1 ip-10-17-10-20:46079 FINISHED 2018-02-20, 14:31:58 2018-02-20, 14:58:51 26m 53s 674 MB 7,994,360 226 MB 323,354 1 ip-10-17-10-20:46079 FINISHED 2018-02-20, 14:31:58 2018-02-20, 14:59:18 27m 19s 715 MB 8,581,459 226 MB 322,303 1 ip-10-17-10-20:46079 FINISHED 2018-02-20, 14:31:58 2018-02-20, 14:58:44 26m 45s 682 MB 7,912,704 228 MB 322,915 1 ip-10-17-10-20:46079 FINISHED 2018-02-20, 14:31:58 2018-02-20, 14:59:07 27m 8s 706 MB 8,288,227 226 MB 322,480 1 ip-10-17-10-20:46079 FINISHED 2018-02-20, 14:31:58 2018-02-20, 14:58:59 27m 1s 698 MB 8,152,011 225 MB 322,836 1 ip-10-17-10-20:46079 FINISHED 2018-02-20, 14:31:58 2018-02-20, 14:58:04 26m 5s 646 MB 7,598,798 226 MB 322,270 1 ip-10-17-10-20:46079 FINISHED 2018-02-20, 14:31:58 2018-02-20, 14:58:22 26m 24s 656 MB 7,769,116 225 MB 321,911 1 ip-10-17-10-20:46079 FINISHED 2018-02-20, 14:31:58 2018-02-20, 14:59:12 27m 14s 719 MB 8,440,687 226 MB 322,699 1 ip-10-17-10-20:46079 FINISHED 2018-02-20, 14:31:58 2018-02-20, 14:58:48 26m 50s 693 MB 8,204,240 227 MB 323,265 1 ip-10-17-10-20:46079 FINISHED

|

Re: Flink Batch job: All slots for groupReduce task scheduled on same machine

|

Hmm, that seems weird. Could you please also post the code of the complete program? Only the parts that build the program graph should be enough. And maybe a screenshot of the complete graph from the dashboard.

-- Aljoscha

|

«

Return to (DEPRECATED) Apache Flink User Mailing List archive.

|

1 view|%1 views

| Free forum by Nabble | Edit this page |