Flink 1.11 TaskManagerLogFileHandler -Exception

|

I am trying to switch to Flink 1.11 with the new EMR release 6.1. I have created 3 nodes EMR cluster with Flink 1.11. When I am running my job its working fine only issue is I am not able to see any logs in the job manager and task manager. I am seeing below exception in stdout of job manager 09:21:36.761 [flink-akka.actor.default-dispatcher-17] ERROR org.apache.flink.runtime.rest.handler.taskmanager.TaskManagerLogFileHandler - Failed to transfer file from TaskExecutor container_1599202660950_0009_01_000003. java.util.concurrent.CompletionException: org.apache.flink.util.FlinkException: The file LOG does not exist on the TaskExecutor. at org.apache.flink.runtime.taskexecutor.TaskExecutor.lambda$requestFileUploadByFilePath$25(TaskExecutor.java:1742) ~[flink-dist_2.12-1.11.0.jar:1.11.0] at java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1604) ~[?:1.8.0_252] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_252] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_252] at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_252] Caused by: org.apache.flink.util.FlinkException: The file LOG does not exist on the TaskExecutor. ... 5 more 09:21:36.773 [flink-akka.actor.default-dispatcher-17] ERROR org.apache.flink.runtime.rest.handler.taskmanager.TaskManagerLogFileHandler - Unhandled exception. org.apache.flink.util.FlinkException: The file LOG does not exist on the TaskExecutor. at org.apache.flink.runtime.taskexecutor.TaskExecutor.lambda$requestFileUploadByFilePath$25(TaskExecutor.java:1742) ~[flink-dist_2.12-1.11.0.jar:1.11.0] at java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1604) ~[?:1.8.0_252] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_252] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_252] at java.lang.Thread.run(Thread.java:748) ~[?:1.8.0_252] 09:21:57.399 [flink-akka.actor.default-dispatcher-15] ERROR org.apache.flink.runtime.rest.handler.taskmanager.TaskManagerLogFileHandler - Failed to transfer file from TaskExecutor container_1599202660950_0009_01_000003. TaskManagerLogFileHandler - Unhandled exception. org.apache.flink.util.FlinkException Is Any new setting I have to do in flink 1.11 as the same job is working fine on the previous version of EMR with Flink 1.10 |

|

I have also checked for port and all the ports from 0-65535 are open. Even I do not see any taskmanager.log is getting generated under my container logs on the task machine. On Fri, Sep 4, 2020 at 2:58 PM aj <[hidden email]> wrote:

|

|

Hi

Could you check the log4j.properties or related conf file used to generate logs to see anything unpected?

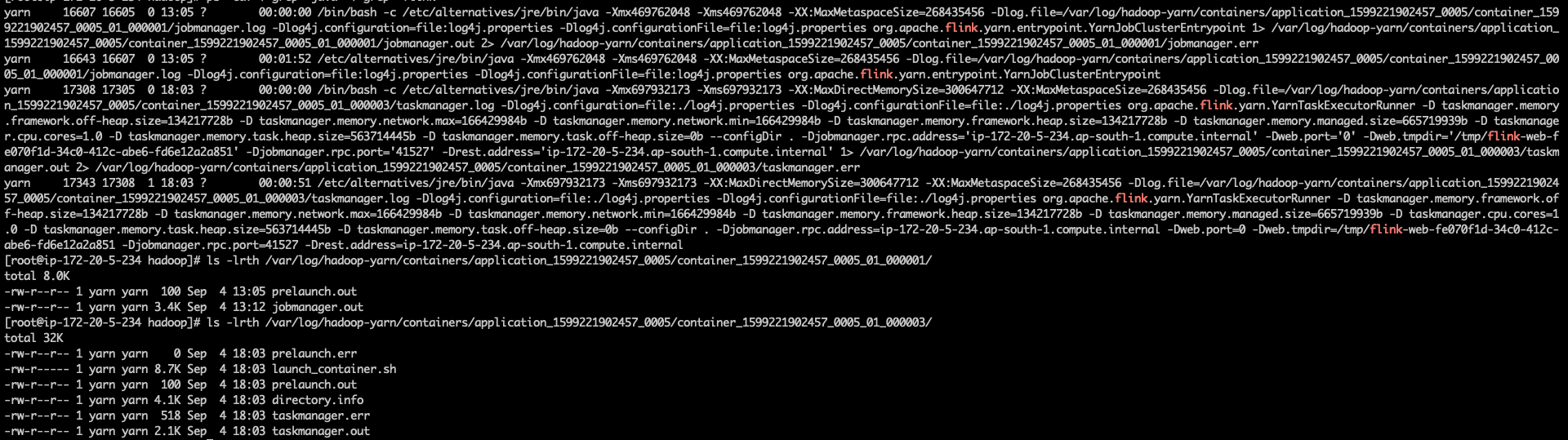

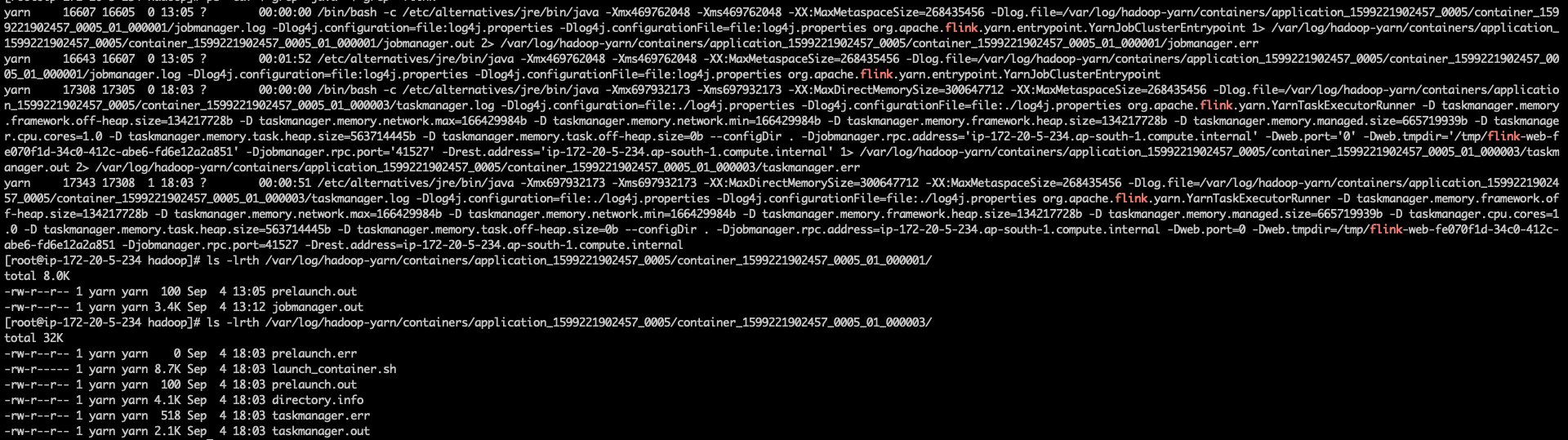

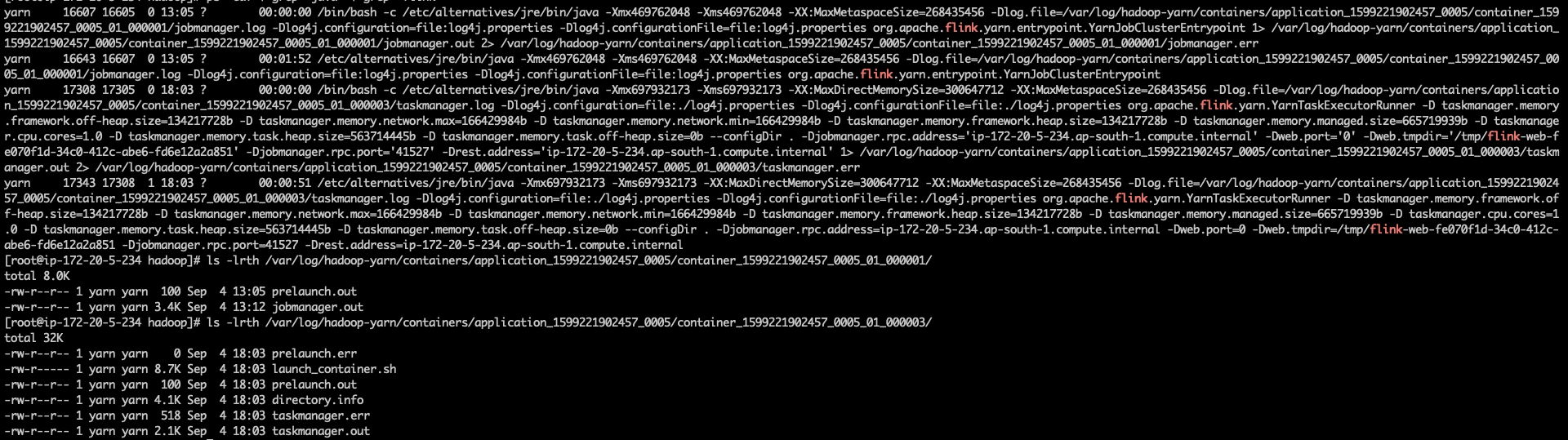

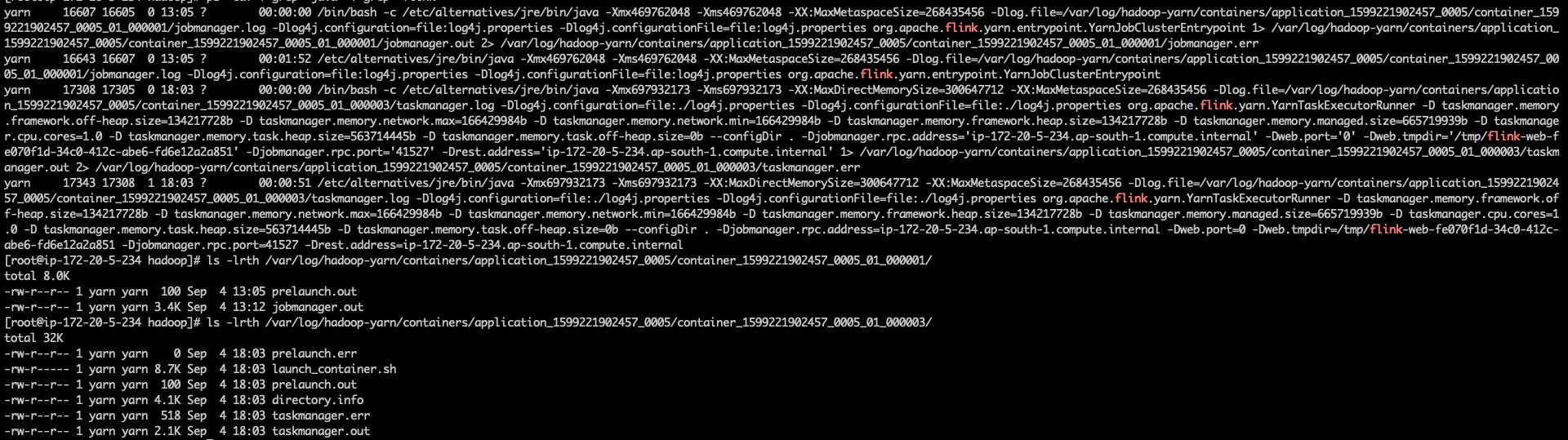

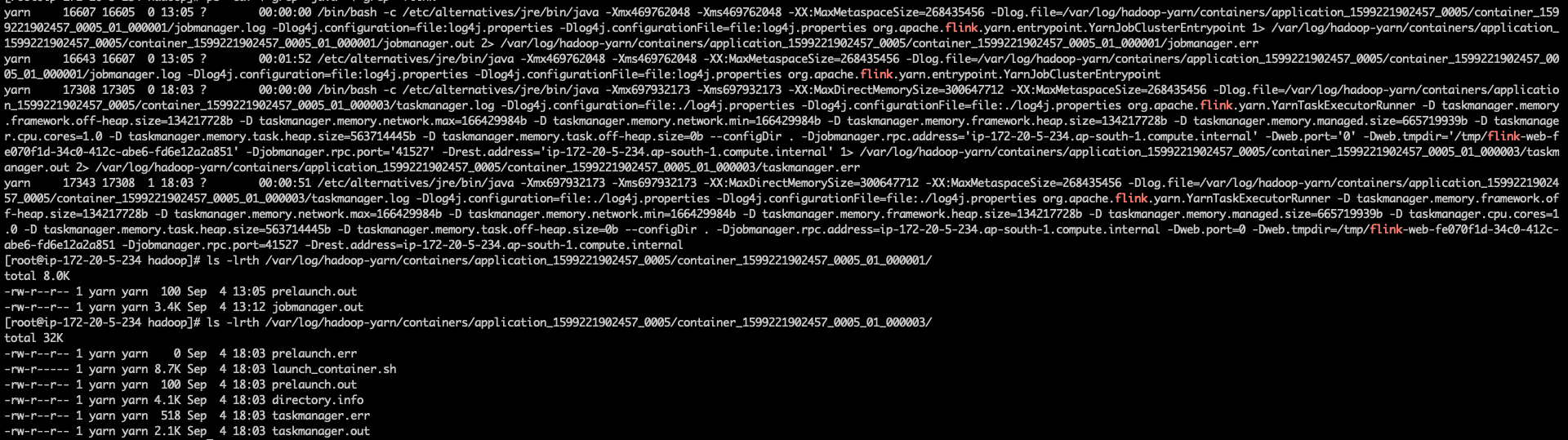

You could also login the machine and use command 'ps -ef | grep java' to grep the command to run taskmanager, as Flink would print the place where 'taskmanager.log' locates [1] via property {log.file}.

Best,

Yun Tang From: aj <[hidden email]>

Sent: Friday, September 4, 2020 21:20 To: user <[hidden email]> Subject: Re: Flink 1.11 TaskManagerLogFileHandler -Exception I have also checked for port and all the ports from 0-65535 are open. Even I do not see any taskmanager.log is getting generated under my container logs on the task machine.

On Fri, Sep 4, 2020 at 2:58 PM aj <[hidden email]> wrote:

|

the location is correct but these log files are not getting generated. On Fri, Sep 4, 2020 at 11:00 PM Yun Tang <[hidden email]> wrote:

|

|

I am not able to understand what is happening. Please help me to resolve this issue so that i can migrate to flink 1.11 On Sat, Sep 5, 2020 at 12:41 AM aj <[hidden email]> wrote:

-- |

|

Hi

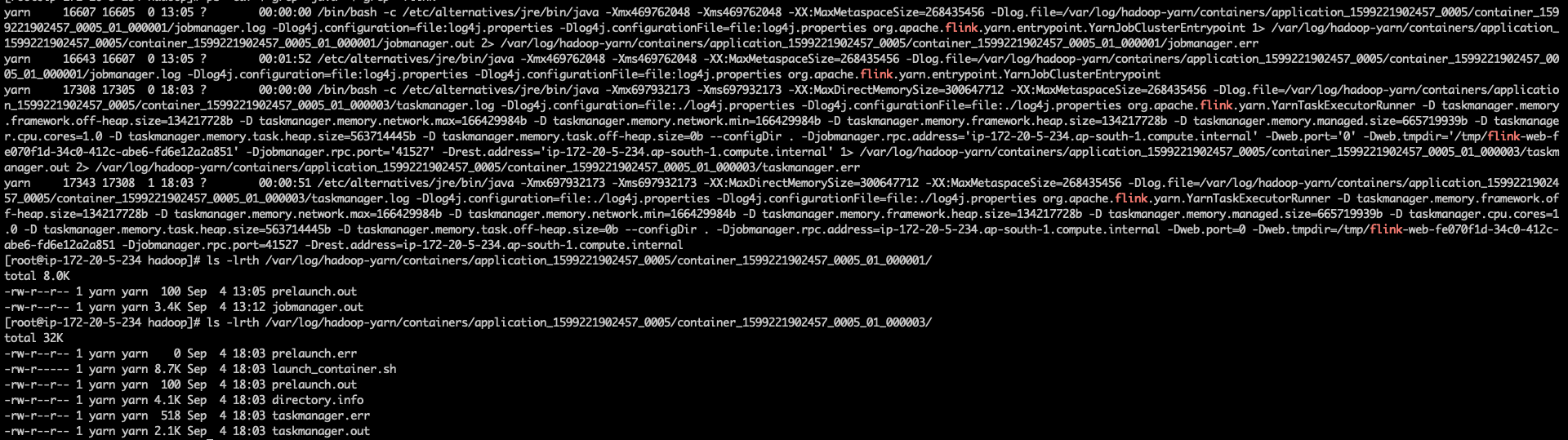

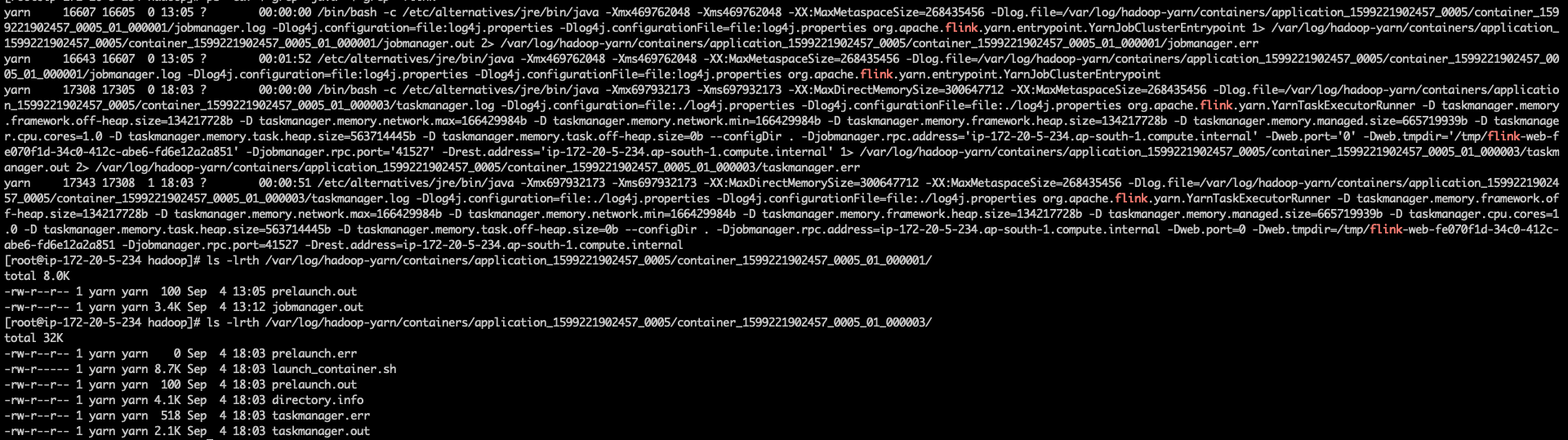

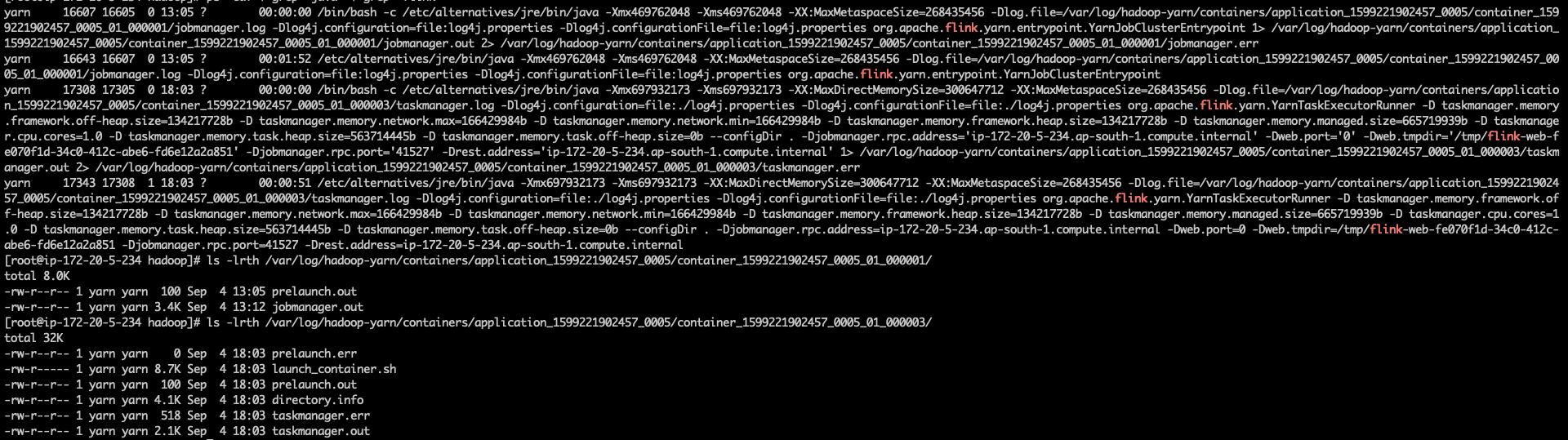

You could check the 'launch_container.sh' to see where the log4j.properties locates to see what inside log4j.properties and you could also check 'jobmanager.out' or 'taskmanager.out' to see why logs are not crated.

From: aj <[hidden email]>

Sent: Sunday, September 6, 2020 2:35 To: Yun Tang <[hidden email]> Cc: user <[hidden email]> Subject: Re: Flink 1.11 TaskManagerLogFileHandler -Exception I am not able to understand what is happening. Please help me to resolve this issue so that i can migrate to flink 1.11

On Sat, Sep 5, 2020 at 12:41 AM aj <[hidden email]> wrote:

-- |

Re: Flink 1.11 TaskManagerLogFileHandler -Exception

|

Hi Anuj, Could you check whether your jar files contain any log4j properties files? Accidentally including other log4j properties files in the classpath is one of the common causes of log issues. Thank you~ Xintong Song On Mon, Sep 7, 2020 at 12:36 PM Yun Tang <[hidden email]> wrote:

|

|

[hidden email] i have checked launch_container.sh and it is using flink default log4j.properties file. Jobmanager.out only printing this even i enable DEBUG LEVEL: WARNING: sun.reflect.Reflection.getCallerClass is not supported. This will impact performance. 11:49:58.216 [flink-akka.actor.default-dispatcher-15] ERROR org.apache.flink.runtime.rest.handler.taskmanager.TaskManagerLogFileHandler - Failed to transfer file from TaskExecutor container_1599221902457_0014_01_000002. java.util.concurrent.CompletionException: org.apache.flink.util.FlinkException: The file LOG does not exist on the TaskExecutor. at org.apache.flink.runtime.taskexecutor.TaskExecutor.lambda$requestFileUploadByFilePath$25(TaskExecutor.java:1742) ~[flink-dist_2.12-1.11.0.jar:1.11.0] at java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1700) ~[?:?] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128) ~[?:?] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628) ~[?:?] at java.lang.Thread.run(Thread.java:834) ~[?:?] Caused by: org.apache.flink.util.FlinkException: The file LOG does not exist on the TaskExecutor. ... 5 more 11:49:58.226 [flink-akka.actor.default-dispatcher-15] ERROR org.apache.flink.runtime.rest.handler.taskmanager.TaskManagerLogFileHandler - Unhandled exception. org.apache.flink.util.FlinkException: The file LOG does not exist on the TaskExecutor. at org.apache.flink.runtime.taskexecutor.TaskExecutor.lambda$requestFileUploadByFilePath$25(TaskExecutor.java:1742) ~[flink-dist_2.12-1.11.0.jar:1.11.0] at java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1700) ~[?:?] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128) ~[?:?] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628) ~[?:?] at java.lang.Thread.run(Thread.java:834) ~[?:?] 11:50:17.256 [flink-akka.actor.default-dispatcher-16] ERROR org.apache.flink.runtime.rest.handler.taskmanager.TaskManagerLogFileHandler - Failed to transfer file from TaskExecutor container_1599221902457_0014_01_000002. java.util.concurrent.CompletionException: org.apache.flink.util.FlinkException: The file LOG does not exist on the TaskExecutor. at org.apache.flink.runtime.taskexecutor.TaskExecutor.lambda$requestFileUploadByFilePath$25(TaskExecutor.java:1742) ~[flink-dist_2.12-1.11.0.jar:1.11.0] at java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1700) ~[?:?] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128) ~[?:?] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628) ~[?:?] at java.lang.Thread.run(Thread.java:834) ~[?:?] Caused by: org.apache.flink.util.FlinkException: The file LOG does not exist on the TaskExecutor. ... 5 more 11:50:17.257 [flink-akka.actor.default-dispatcher-16] ERROR org.apache.flink.runtime.rest.handler.taskmanager.TaskManagerLogFileHandler - Unhandled exception. org.apache.flink.util.FlinkException: The file LOG does not exist on the TaskExecutor. at org.apache.flink.runtime.taskexecutor.TaskExecutor.lambda$requestFileUploadByFilePath$25(TaskExecutor.java:1742) ~[flink-dist_2.12-1.11.0.jar:1.11.0] at java.util.concurrent.CompletableFuture$AsyncSupply.run(CompletableFuture.java:1700) ~[?:?] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128) ~[?:?] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628) ~[?:?] at java.lang.Thread.run(Thread.java:834) ~[?:?] Another thing is my prometheus reporter is also giving error : WARNING: sun.reflect.Reflection.getCallerClass is not supported. This will impact performance. 11:46:04.146 [main] ERROR org.apache.flink.runtime.metrics.ReporterSetup - Could not instantiate metrics reporter prom. Metrics might not be exposed/reported. java.lang.RuntimeException: Could not start PrometheusReporter HTTP server on any configured port. Ports: 9250 at org.apache.flink.metrics.prometheus.PrometheusReporter.open(PrometheusReporter.java:74) ~[flink-metrics-prometheus_2.12-1.11.0.jar:1.11.0] at org.apache.flink.runtime.metrics.ReporterSetup.createReporterSetup(ReporterSetup.java:130) ~[flink-dist_2.12-1.11.0.jar:1.11.0] at org.apache.flink.runtime.metrics.ReporterSetup.lambda$setupReporters$1(ReporterSetup.java:239) ~[flink-dist_2.12-1.11.0.jar:1.11.0] at java.util.Optional.ifPresent(Optional.java:183) ~[?:?] at org.apache.flink.runtime.metrics.ReporterSetup.setupReporters(ReporterSetup.java:236) ~[flink-dist_2.12-1.11.0.jar:1.11.0] at org.apache.flink.runtime.metrics.ReporterSetup.fromConfiguration(ReporterSetup.java:148) ~[flink-dist_2.12-1.11.0.jar:1.11.0] at org.apache.flink.runtime.taskexecutor.TaskManagerRunner.<init>(TaskManagerRunner.java:143) ~[flink-dist_2.12-1.11.0.jar:1.11.0] at org.apache.flink.runtime.taskexecutor.TaskManagerRunner.runTaskManager(TaskManagerRunner.java:305) ~[flink-dist_2.12-1.11.0.jar:1.11.0] at org.apache.flink.yarn.YarnTaskExecutorRunner.lambda$runTaskManagerSecurely$0(YarnTaskExecutorRunner.java:107) ~[flink-dist_2.12-1.11.0.jar:1.11.0] at java.security.AccessController.doPrivileged(Native Method) ~[?:?] at javax.security.auth.Subject.doAs(Subject.java:423) ~[?:?] at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1730) ~[hadoop-common-3.2.1-amzn-1.jar:?] at org.apache.flink.runtime.security.contexts.HadoopSecurityContext.runSecured(HadoopSecurityContext.java:41) ~[flink-dist_2.12-1.11.0.jar:1.11.0] at org.apache.flink.yarn.YarnTaskExecutorRunner.runTaskManagerSecurely(YarnTaskExecutorRunner.java:106) ~[flink-dist_2.12-1.11.0.jar:1.11.0] at org.apache.flink.yarn.YarnTaskExecutorRunner.main(YarnTaskExecutorRunner.java:77) ~[flink-dist_2.12-1.11.0.jar:1.11.0] even i can see the node is listening on the port netstat -l | grep "9250" tcp6 0 0 [::]:9250 [::]:* LISTEN And also when i have run this, i am getting output of metrics. : flink_jobmanager_Status_JVM_GarbageCollector_G1_Old_Generation_Count{host="ip_172_20_5_234_ap_south_1_compute_internal",} 0.0 # HELP flink_jobmanager_Status_JVM_Memory_Mapped_Count Count (scope: jobmanager_Status_JVM_Memory_Mapped) # TYPE flink_jobmanager_Status_JVM_Memory_Mapped_Count gauge flink_jobmanager_Status_JVM_Memory_Mapped_Count{host="ip_172_20_5_234_ap_south_1_compute_internal",} 0.0 # HELP flink_jobmanager_Status_JVM_Memory_Heap_Committed Committed (scope: jobmanager_Status_JVM_Memory_Heap) # TYPE flink_jobmanager_Status_JVM_Memory_Heap_Committed gauge flink_jobmanager_Status_JVM_Memory_Heap_Committed{host="ip_172_20_5_234_ap_south_1_compute_internal",} 4.69762048E8 # HELP flink_jobmanager_job_numberOfInProgressCheckpoints numberOfInProgressCheckpoints (scope: jobmanager_job) # TYPE flink_jobmanager_job_numberOfInProgressCheckpoints gauge flink_jobmanager_job_numberOfInProgressCheckpoints{job_id="f7e1d9f5ee7637142489b89fbd4381ad",host="ip_172_20_5_234_ap_south_1_compute_internal",job_name="test",} 0.0 # HELP flink_jobmanager_Status_JVM_Memory_NonHeap_Max Max (scope: jobmanager_Status_JVM_Memory_NonHeap) # TYPE flink_jobmanager_Status_JVM_Memory_NonHeap_Max gauge flink_jobmanager_Status_JVM_Memory_NonHeap_Max{host="ip_172_20_5_234_ap_south_1_compute_internal",} 7.80140544E8 # HELP flink_jobmanager_job_restartingTime restartingTime (scope: jobmanager_job) # TYPE flink_jobmanager_job_restartingTime gauge flink_jobmanager_job_restartingTime{job_id="f7e1d9f5ee7637142489b89fbd4381ad",host="ip_172_20_5_234_ap_south_1_compute_internal",job_name="test",} 0.0 So did not understand why this error is coming in task manager logs. @Xintong Could you check whether your jar files contain any log4j properties

files? Accidentally including other log4j properties files in the

classpath is one of the common causes of log issues. I do not think this is happening because i am using same jar that was working on Flink 1.10 On Mon, Sep 7, 2020 at 12:02 PM Xintong Song <[hidden email]> wrote:

-- |

|

Thanks for all the help .I have got the issue and it got resolved now. It is the issue with EMR cluster . 1. lib folder have jar corresponding to log4j 2 but log4j.properties file is corresponding to log4j 1. 2. They have missing plugins folders. I am not sure how these got changes in EMR cluster flink 1.11 version. I come to know when i downloaded flink 1.11 on my local and compare it. I am not sure what they have done but it really i was not expecting . On Mon, Sep 7, 2020 at 5:27 PM aj <[hidden email]> wrote:

-- |

Re: Flink 1.11 TaskManagerLogFileHandler -Exception

|

To be honest, my experience with EMR is never really positive. If you think about it: It's really a huge task to guarantee the interoperability of tens of big data frameworks, keep the versions fairly recent, and still backporting crucial fixes. AWS is doing a fine job, but I saw issues in pretty much every version and it's really hard to track down. I'd recommend going with a docker-based approach nowadays. Just pick the stuff that you really need and it's usually much more stable anyways. Plus you know where everything is and can upgrade versions much more easily. A good starting point is using EKS with Ververica community edition [1]. There is usually no need to manually setup the K8s pods if you go this route. On Mon, Sep 7, 2020 at 8:15 PM aj <[hidden email]> wrote:

-- Arvid Heise | Senior Java Developer Follow us @VervericaData -- Join Flink Forward - The Apache Flink Conference Stream Processing | Event Driven | Real Time -- Ververica GmbH | Invalidenstrasse 115, 10115 Berlin, Germany -- Ververica GmbHRegistered at Amtsgericht Charlottenburg: HRB 158244 B Managing Directors: Timothy Alexander Steinert, Yip Park Tung Jason, Ji (Toni) Cheng |

|

In reply to this post by anuj.aj07

Hi Fanbin, You do not have to copy log4j.properties to log4j2.properties. log4j2 has a different format so change ur log4j.properties file so that it uses a log4j2 formatting style. Please replace ur log4j.properties with this default setting for reference: On Fri, Oct 16, 2020 at 2:50 AM Fanbin Bu <[hidden email]> wrote:

|

«

Return to (DEPRECATED) Apache Flink User Mailing List archive.

|

1 view|%1 views

| Free forum by Nabble | Edit this page |