Hi,

Recently, I find a problem when job failed in 1.10.0, flink didn’t release resource first.

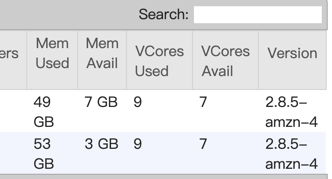

You can see I used flink on yarn, and it doesn’t allocate task manager, beacause no more memory left.

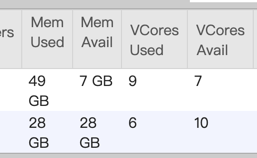

If i cancel the job, the cluster has more memory.

In 1.8.2, the job will restart normally, is this a bug?

Thanks.