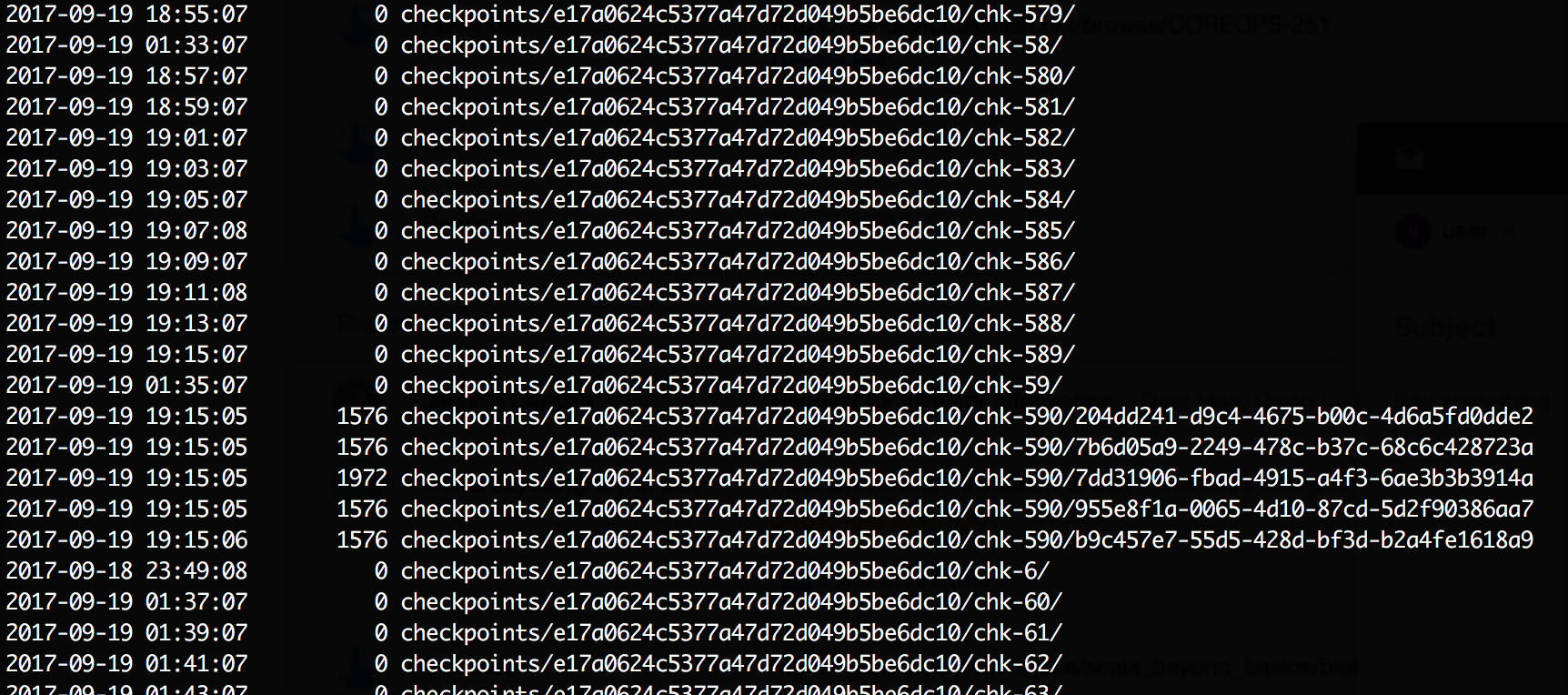

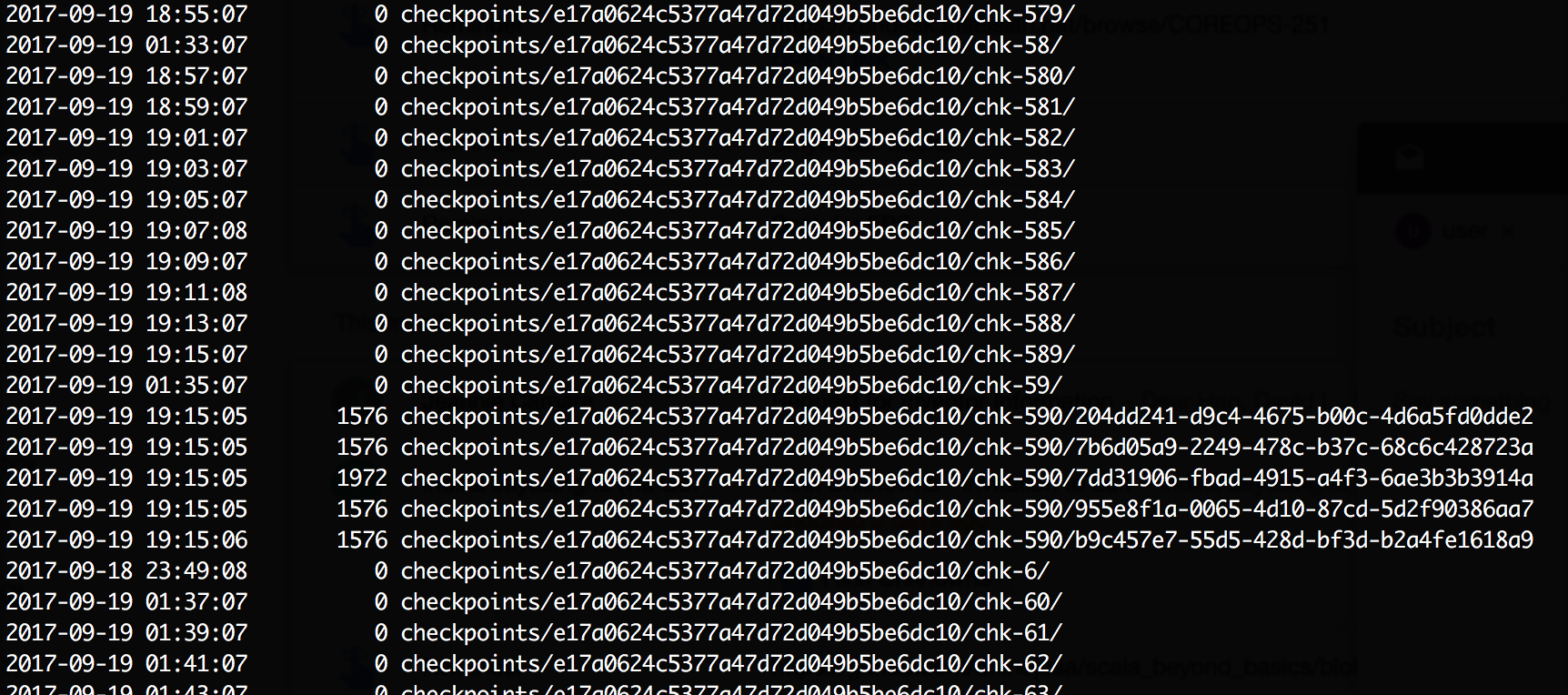

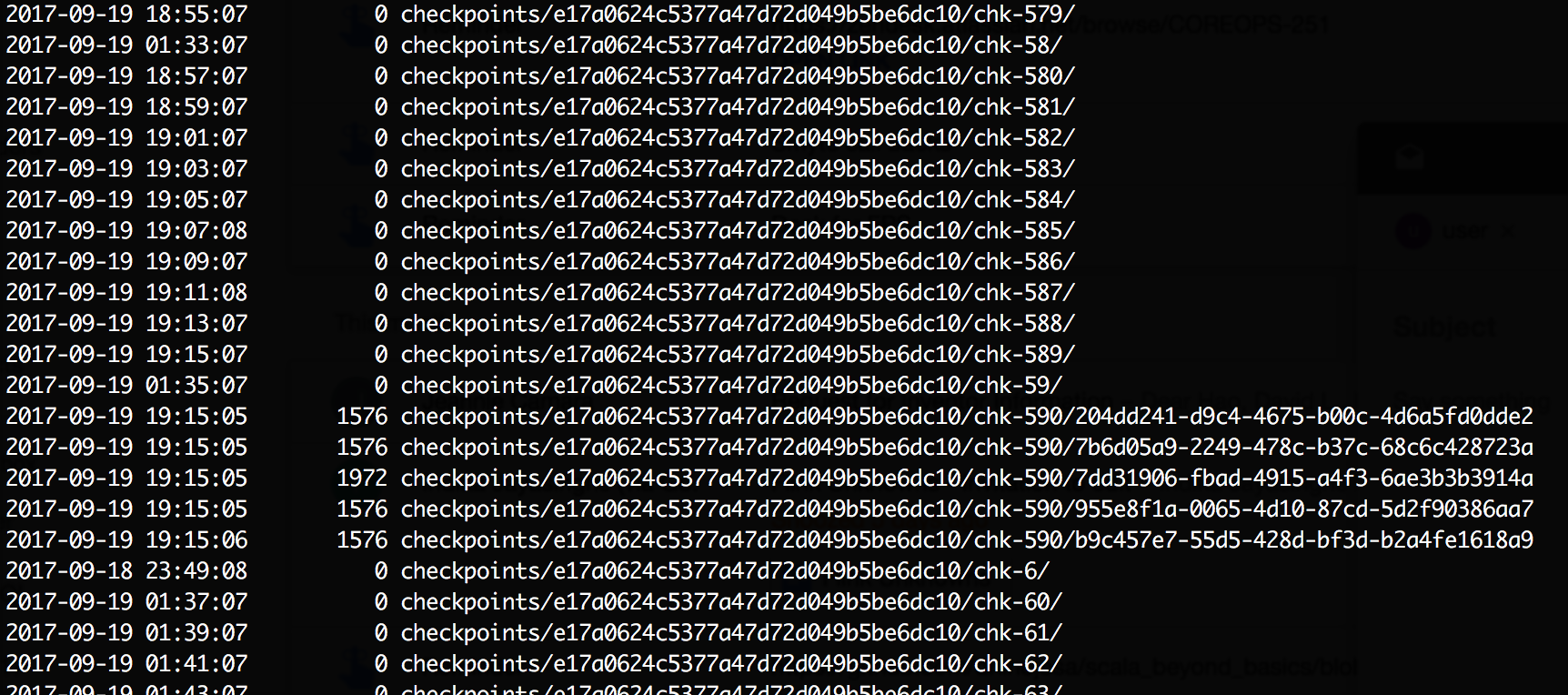

Empty directories left over from checkpointing

|

Hi, I am using RocksDB and S3 as storage backend for my checkpoints. Can flink delete these empty directories automatically? Or I need a background job to do the deletion? I know this has been discussed before, but I could not get a concrete answer for it yet. Thanks  |

Re: Empty directories left over from checkpointing

|

There are a couple of related JIRAs: On Tue, Sep 19, 2017 at 12:20 PM, Hao Sun <[hidden email]> wrote:

|

|

Thanks Elias! Seems like there is no better answer than "do not care about them now", or delete with a background job. On Tue, Sep 19, 2017 at 4:11 PM Elias Levy <[hidden email]> wrote:

|

Re: Empty directories left over from checkpointing

|

Hi, Best, Stefan

|

Re: Empty directories left over from checkpointing

|

Some updates on this:

Aside from reworking how the S3 directory handling is done, we also looked into supporting S3 different than we currently do. Currently support goes strictly through Hadoop's S3 file systems, which we need to change, because we want it to be possible to use Flink without Hadoop dependencies. In the next release, we will have S3 file systems without Hadoop dependency: - One implementation wraps and shades a newer version of s3a. For compatibility with current behavior. - The second is interesting for this directory problem: It uses Pesto's S3 support which is a bit different from Hadoop' s3n and s3a. It does not create empty directly marker files, hence it is not trying to make S3 look as much like a file system as s3a and s3n are, but that is actually of advantage for checkpointing. With that implementation, the here mentioned issue should not exist. Caveat: The new file systems and their aggressive shading needs to be testet at scale still, but we are happy to take any feedback on this. You can use them by simply dropping the respective JARs from "/opt" into "/lib" and using the file system scheme "s3://". The configuration is as in Hadoop/Presto, but you can drop the config keys into the Flink configuration - they will be forwarded to the Hadoop configuration. Hope that this makes the S3 use a lot easier and more fun... On Wed, Sep 20, 2017 at 2:49 PM, Stefan Richter <[hidden email]> wrote:

|

Re: Empty directories left over from checkpointing

|

Stephan, Thanks for taking care of this. We'll give it a try once 1.4 drops. On Sat, Oct 14, 2017 at 1:25 PM, Stephan Ewen <[hidden email]> wrote:

|

«

Return to (DEPRECATED) Apache Flink User Mailing List archive.

|

1 view|%1 views

| Free forum by Nabble | Edit this page |