Compiler error while using 'CsvTableSource'

|

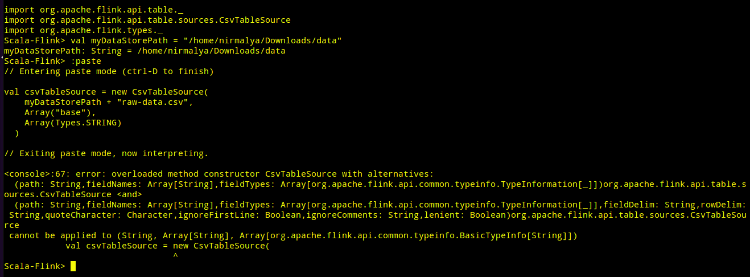

I am using flink-shell, available with flink-1.2-SNAPSHOT.

While loading a CSV file into a CsvTableSource - following the example given with the documents - I get this error.  I am not sure what the reason is! Am I missing import of an implicit somewhere? Any help, appreciated. -- Nirmalya |

Re: Compiler error while using 'CsvTableSource'

|

Hi Nirmalya, could you try casting the Types.STRING into a org.apache.flink.api.common.typeinfo.TypeInformation[String] type? Cheers, Till On Thu, Feb 2, 2017 at 5:55 PM, nsengupta <[hidden email]> wrote: I am using *flink-shell*, available with flink-1.2-SNAPSHOT. |

|

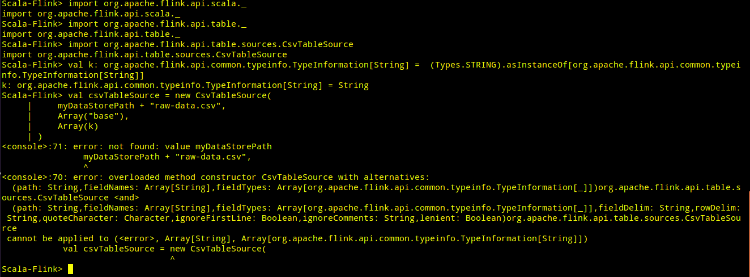

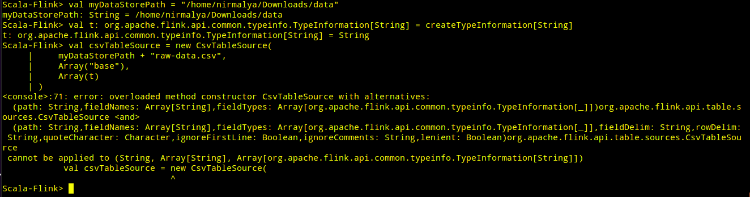

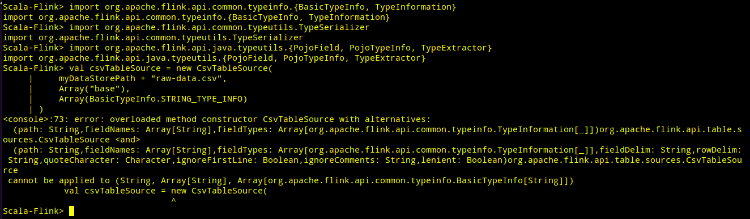

Hello Till,

Many thanks for a quick reply. I have tried to follow your suggestion, with no luck:  Just to give it a shot, I have tried this too (following Flink Documentation):  I took, a cursory look at the source code and stumbled upon this file: ./flink-libraries/flink-table/src/test/scala/org/apache/flink/table/utils/CommonTestData.scala Here, I see that you are using BasicTypeInfo while creating a CsvTableSource and I have tried that too:  Because I ordered maven to skipTests, while building Flink binaries, I don't know if this particular testcase passed or not. If it did, then I think we can conclude that it is a case of Scala's failure to resolve type, perhaps due to an implicit. It it didn't, then we perhaps have an easier problem to solve. Do you think I should run all the tests in my environment? It may take time, but I can do that if it helps us. -- Nirmalya |

|

Til,

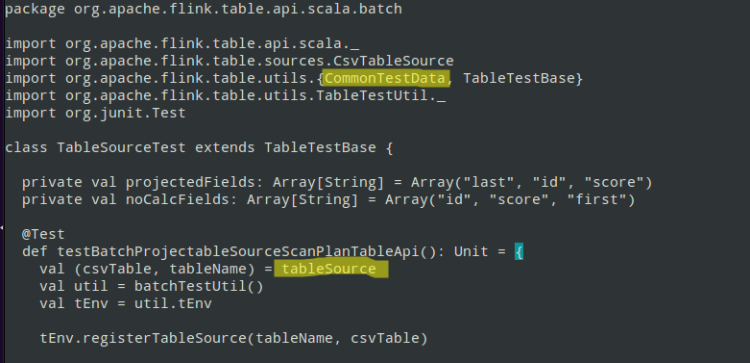

FWIW, I have fired the entire testsuite for Flink latest Snapshot. Almost all testcases passed, particularly this one:  This case uses a bulit-in loaded CSV (in org.apache.flink.table.api.scala.batch.TableSourceTest.scala):  Because this testcase runs, I presume that compiler is able to sort out the TypeInformation while building Flink-libraries, but while running, it is failing to do so, for some reason. -- Nirmalya |

Re: Compiler error while using 'CsvTableSource'

|

This should do the trick Cheers, On Fri, Feb 3, 2017 at 6:39 AM, nsengupta <[hidden email]> wrote: Til, |

|

Till,

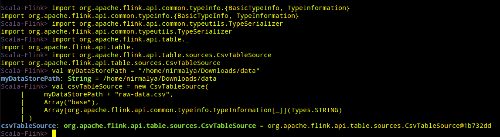

Many thanks. Just to confirm that it is working fine at my end, here's a screenshot.  This is Flink 1.1.4 but Flink-1.2/Flink-1.3 shouldn't be any problem. It never struck me that lack of covariance in Scala Arrays was the source of the problem. Bravo!  BTW, I am just curious to know how the Testcases worked: just to add to my knowledge of Scala. We didn't pass any typehint to the compiler there!  Could you please put a hint of a line or two? TIA. |

Re: Compiler error while using 'CsvTableSource'

|

I think the problem is that there are actually two constructors with the same signature. The one is defined with default arguments and the other has the same signature as the one with default arguments when you leave all default arguments out. I assume that this confuses the Scala compiler and only works if you've specified the right types or at least one of the parameters with a default argument. Cheers, Till On Fri, Feb 3, 2017 at 12:49 PM, nsengupta <[hidden email]> wrote: Till, |

|

Thanks, Till, for taking time to share your understanding. -- N On Sun, Feb 5, 2017 at 12:49 AM, Till Rohrmann [via Apache Flink User Mailing List archive.] <[hidden email]> wrote:

Software Technologist

http://www.linkedin.com/in/nirmalyasengupta "If you have built castles in the air, your work need not be lost. That is where they should be. Now put the foundation under them." |

Re: Compiler error while using 'CsvTableSource'

|

I created an issue to make this a bit

more user-friendly in the future.

https://issues.apache.org/jira/browse/FLINK-5714 Timo Am 05/02/17 um 06:08 schrieb nsengupta:

|

|

Thanks, Timo. Do I need to add anything to the ticket? Please let me know. I will do the needful. -- N On Mon, Feb 6, 2017 at 2:25 PM, Timo Walther [via Apache Flink User Mailing List archive.] <[hidden email]> wrote:

Software Technologist

http://www.linkedin.com/in/nirmalyasengupta "If you have built castles in the air, your work need not be lost. That is where they should be. Now put the foundation under them." |

«

Return to (DEPRECATED) Apache Flink User Mailing List archive.

|

1 view|%1 views

| Free forum by Nabble | Edit this page |