Checkpoints timing out for no apparent reason

Checkpoints timing out for no apparent reason

|

This post was updated on .

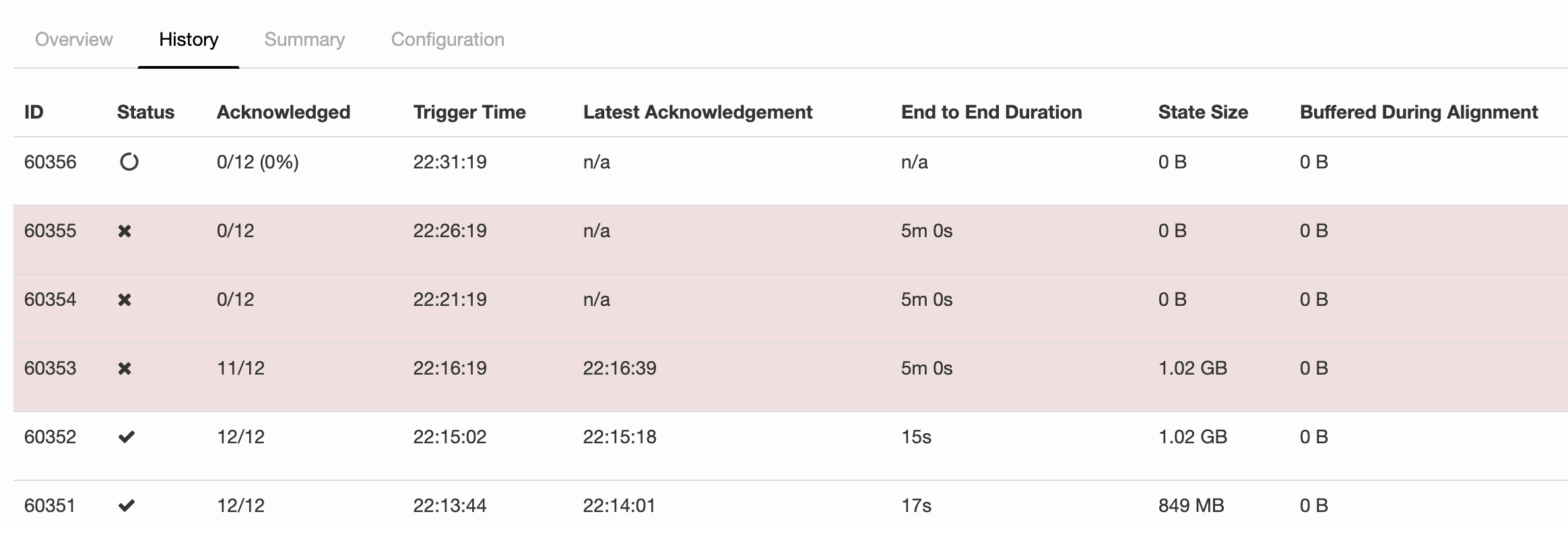

We have an issue with a job when it occasionally times out while creating

snapshots for no apparent reason:  Details: - Flink 1.7.2 - Checkpoints are saved to S3 with presto - Incremental checkpoints are used What might be the cause of this issue? It feels like some internal s3 client timeout issue, but I didn't find any configuration of such timeout. -- Sent from: http://apache-flink-user-mailing-list-archive.2336050.n4.nabble.com/ |

Re: Checkpoints timing out for no apparent reason

|

Hi The image did not show. incremental checkpoint includes: 1) flush memtable to sst files; 2) checkpoint of RocksDB; 3) snapshot metadata; 4) upload needed sst files to remote, all the first three steps are in sync part, and the fourth step in async part, could you please check whether the sync or async part takes too long time. As the sync part, maybe you could checkpoint the disk performance during checkpoint, as the async part, maybe you should checkpoint the network performance and the s3 client. Best, Congxian spoganshev <[hidden email]> 于2019年7月17日周三 上午4:02写道: We have an issue with a job when it occasionally times out while creating |

Re: Checkpoints timing out for no apparent reason

|

The image should be visible now at

http://apache-flink-user-mailing-list-archive.2336050.n4.nabble.com/Checkpoints-timing-out-for-no-apparent-reason-td28793.html#none It doesn't look like it is a disk performance or network issue. Feels more like some buffer overflowing or timeout due to slightly bigger files being uploaded to S3. -- Sent from: http://apache-flink-user-mailing-list-archive.2336050.n4.nabble.com/ |

Re: Checkpoints timing out for no apparent reason

|

Hi Sergei, If you want just to try increasing the timeouts, you could change the checkpoint timeout in env.getCheckpointConfig().setCheckpointTimeout(...) [1] or s3 client timeouts (see presto or hdfs for s3 configuration, there are some network timeouts) [2]. Otherwise it would be easier to investigate the reason of failures if you provide JM and TM logs. Best, On Thu, Jul 18, 2019 at 5:00 PM spoganshev <[hidden email]> wrote: The image should be visible now at |

Re: Checkpoints timing out for no apparent reason

|

I've looked into this problem a little bit more. And it looks like the

problem is caused by some problem with Kinesis sink. There is an exception in the logs at the moment in time when the job gets restored after being stalled for about 15 minutes: Encountered an unexpected expired iterator AAAAAAAAAAGzsd7J/muyVo6McROAzdW+UByN+g4ttJjFS/LkswyZHprdlBxsH6B7UI/8DIJu6hj/Vph9OQ6Oz7Rhxg9Dj64w58osOSwf05lX/N+c8EUVRIQY/yZnwjtlmZw1HAKWSBIblfkGIMmmWFPu/UpQqzX7RliA2XWeDvkLAdOcogGmRgceI95rOMEUIWYP7z2PmiQ7TlL4MOG+q/NYEiLgyuoVw7bkm+igE+34caD7peXuZA== for shard StreamShardHandle{streamName='staging-datalake-struct', shard='{ShardId: shardId-000000000005,ParentShardId: shardId-000000000001,HashKeyRange: {StartingHashKey: 255211775190703847597530955573826158592,EndingHashKey: 340282366920938463463374607431768211455},SequenceNumberRange: {StartingSequenceNumber: 49591208977124932291714633368622679061889586376843722834,}}'}; refreshing the iterator ... It's logged by org.apache.flink.streaming.connectors.kinesis.internals.ShardConsumer -- Sent from: http://apache-flink-user-mailing-list-archive.2336050.n4.nabble.com/ |

Re: Checkpoints timing out for no apparent reason

|

Looks like this is the issue:

https://issues.apache.org/jira/browse/FLINK-11164 We'll try switching to 1.8 and see if it helps. -- Sent from: http://apache-flink-user-mailing-list-archive.2336050.n4.nabble.com/ |

Re: Checkpoints timing out for no apparent reason

|

Switching to 1.8 didn't help. Timeout exception from Kinesis is a

consequence, not a reason. -- Sent from: http://apache-flink-user-mailing-list-archive.2336050.n4.nabble.com/ |

«

Return to (DEPRECATED) Apache Flink User Mailing List archive.

|

1 view|%1 views

| Free forum by Nabble | Edit this page |