Checkpoint was declined (tasks not ready)

Checkpoint was declined (tasks not ready)

|

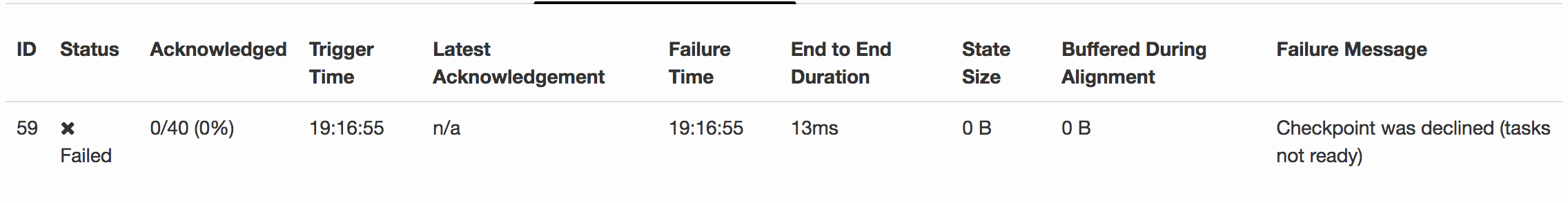

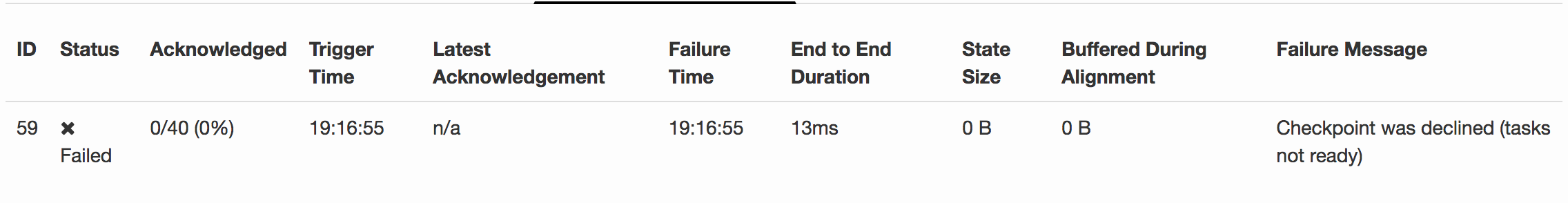

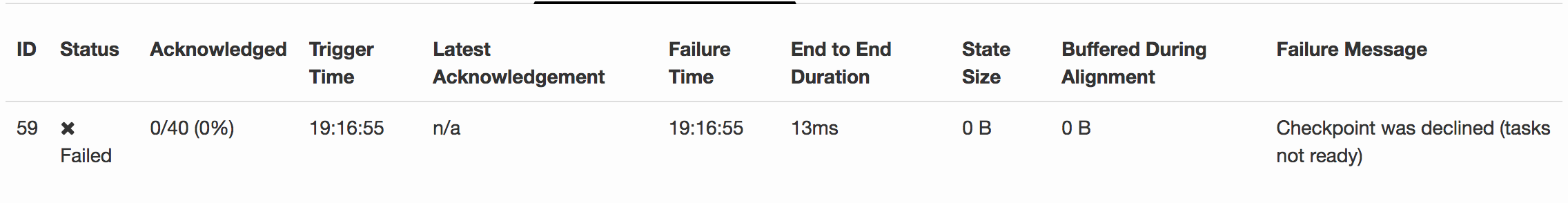

Hi, I'm noticing a weird issue with our flink streaming job. We use async io operator which makes a HTTP call and in certain cases when the async task times out, it throws an exception and causing the job to restart. java.lang.Exception: An async function call terminated with an exception. Failing the AsyncWaitOperator. at org.apache.flink.streaming.api.operators.async.Emitter.output(Emitter.java:136) at org.apache.flink.streaming.api.operators.async.Emitter.run(Emitter.java:83) at java.lang.Thread.run(Thread.java:745) Caused by: java.util.concurrent.ExecutionException: java.util.concurrent.TimeoutException: Async function call has timed out. at org.apache.flink.runtime.concurrent.impl.FlinkFuture.get(FlinkFuture.java:110) After the job restarts(we have a fixed restart strategy) we notice that the checkpoints start failing continuously with this message : Checkpoint was declined (tasks not ready)  But we see the job is running, its processing data, the accumulators we have are getting incremented etc but checkpointing fails with tasks not ready message. Wanted to reach out to the community to see if anyone else has experienced this issue before? ~ Karthik |

Re: Checkpoint was declined (tasks not ready)

|

We are using Flink 1.3.1 in Standalone mode with a HA job manager setup. ~ Karthik On Fri, Oct 6, 2017 at 8:22 PM, Karthik Deivasigamani <[hidden email]> wrote:

|

Re: Checkpoint was declined (tasks not ready)

|

As long as this does not appear all the time, but only once in a while, it should not be a problem. It simply means that this particular checkpoint could not be triggered, because some sources were not ready yet. It should try another checkpoint and then be okay. On Fri, Oct 6, 2017 at 4:53 PM, Karthik Deivasigamani <[hidden email]> wrote:

|

Re: Checkpoint was declined (tasks not ready)

|

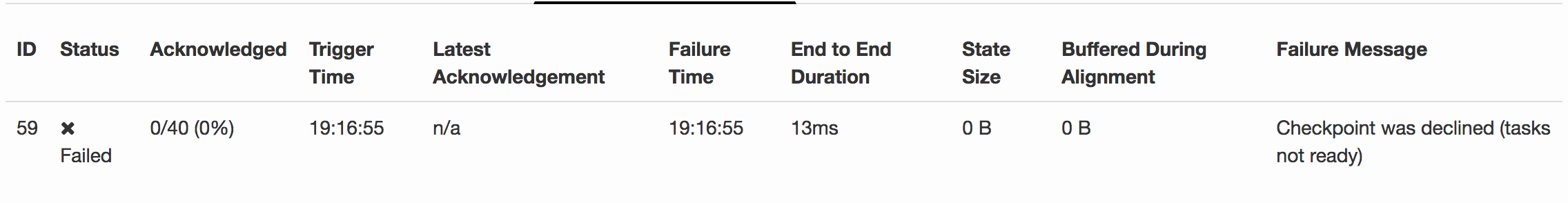

Hi Stephan, Once the job restarts due to an async io operator timeout we notice that its checkpoints never succeed again. But the job is running fine and is processing data.On Mon, Oct 9, 2017 at 3:19 PM, Stephan Ewen <[hidden email]> wrote:

|

Re: Checkpoint was declined (tasks not ready)

|

we also have similar problem - it happens really often when we

invoke async operators (ordered one). But we also observe that job

is not starting properly - we don't process any data when such

problems appear thanks, maciek On 09/10/2017 12:10, Karthik

Deivasigamani wrote:

|

Re: Checkpoint was declined (tasks not ready)

|

it seems that one of operators is stuck during recovery: prio=5

os_prio=0 tid=0x00007f634bb31000 nid=0xd5e in Object.wait()

[0x00007f63f13cc000] On 23/10/2017 13:54, Maciek Próchniak

wrote:

|

Re: Checkpoint was declined (tasks not ready)

|

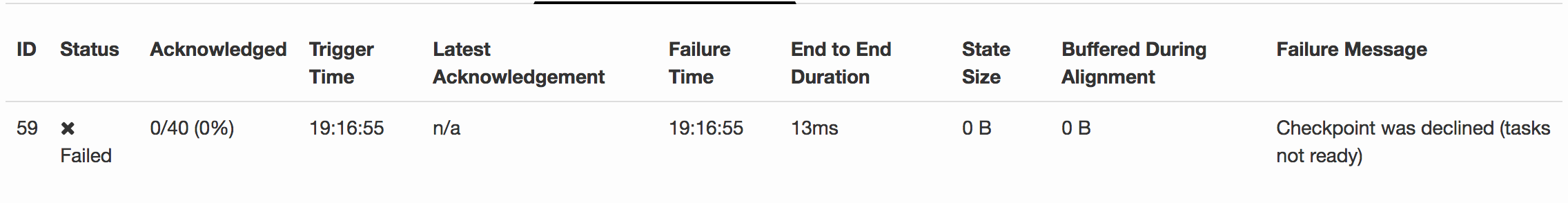

Ok, looks like we've found the cause of this issue. The scenario looks like

this: 1. The queue is full (let's assume that its capacity is N elements) 2. There is some pending element waiting, so the pendingStreamElementQueueEntry field in AsyncWaitOperator is not null and while-loop in addAsyncBufferEntry method is trying to add this element to the queue (but element is not added because queue is full) 3. Now the snapshot is taken - the whole queue of N elements is being written into the ListState in snapshotState method and also (what is more important) this pendingStreamElementQueueEntry is written to this list too. 4. The process is being restarted, so it tries to recover all the elements and put them again into the queue, but the list of recovered elements hold N+1 element and our queue capacity is only N. Process is not started yet, so it can not process any element and this one element is waiting endlessly. But it's never added and the process will never process anything. Deadlock. 5. Trigger is fired and indeed discarded because the process is not running yet. If something is unclear in my description - please let me know. We will also try to reproduce this bug in some unit test and then report Jira issue. -- Sent from: http://apache-flink-user-mailing-list-archive.2336050.n4.nabble.com/ |

Re: Checkpoint was declined (tasks not ready)

|

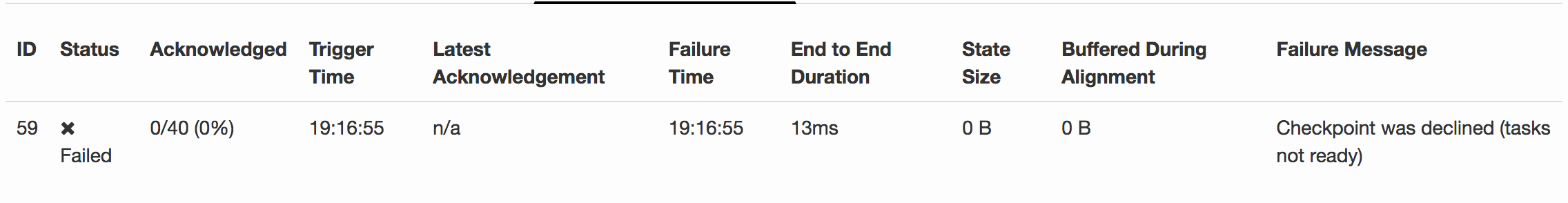

Hi Bartek, I think your explanation of the problem is correct. Thanks a lot for your investigation. What we could do to solve the problem is the following: Either) We start the emitter thread before we restore the elements in the open method. That way the open method won't block forever but only until the first element has been emitted downstream. or) Don't accept a pendingStreamElementQueueEntry by waiting in the processElement function until we have capacity left again in the queue. What do you think? Do you want to contribute the fix for this problem? Cheers, Till On Mon, Oct 23, 2017 at 4:30 PM, bartektartanus <[hidden email]> wrote: Ok, looks like we've found the cause of this issue. The scenario looks like |

Re: Checkpoint was declined (tasks not ready)

|

I think we could try with option number one, as it seems to be easier to

implement. Currently I'm cloning Flink repo to fix this and test that solution with our currently not working code. Unfortunately, it takes forever to download all the dependencies. Anyway, I hope that eventually will manage to create pull request (today). To which branch? Is master ok? Bartek -- Sent from: http://apache-flink-user-mailing-list-archive.2336050.n4.nabble.com/ |

Re: Checkpoint was declined (tasks not ready)

|

Yes please open the PR against Flink's master branch. You can also ping me once you've opened the PR. Then we can hopefully quickly merge it :-) Cheers, Till On Thu, Oct 26, 2017 at 12:44 PM, bartektartanus <[hidden email]> wrote: I think we could try with option number one, as it seems to be easier to |

Re: Checkpoint was declined (tasks not ready)

|

I've created an issue on Jira and prepared pull request, here's the link:

https://github.com/apache/flink/pull/4924 Travis CI check is not passing but looks like it's not my fault :) -- Sent from: http://apache-flink-user-mailing-list-archive.2336050.n4.nabble.com/ |

| Free forum by Nabble | Edit this page |